Welcome to ExtremeHW

Welcome to ExtremeHW, register to take part in our community, don't worry this is a simple FREE process that requires minimal information for you to signup.

Registered users can:

- Start new topics and reply to others.

- Show off your PC using our Rig Creator feature.

- Subscribe to topics and forums to get updates.

- Get your own profile page to customize.

- Send personal messages to other members.

- Take advantage of site exclusive features.

- Upgrade to Premium to unlock additional sites features.

-

Posts

38 -

Joined

-

Last visited

-

Days Won

4 -

Feedback

0%

Content Type

Forums

Store

Events

Gallery

Profiles

Videos

Marketplace

Tutorials

Posts posted by KyadCK

-

-

On 1/23/2021 at 9:49 AM, schuck6566 said:

In order, the 2 screenshots show a simple web search for "national guard parking garage" performed on Google and on Bing. BOTH show quite a bit of detail in the results(enough so that you can get the gist of the issue without reading the story). Google had an add on the main search result page,Bing did not. (unless firefox browser blocked them unknowingly to me)ABP was off for the search both times and was performed in their own tabs.

In a way,this is really not that different from the monetized videos on youtube.You click on 1 of them,youtube gets money and the poster gets money.You don't click no one gets the money from the ad. The story providers are looking to have their links become "monetized" on sites that are ad supported since they are helping draw people there. At least that's how I am seeing this.It would take work on BOTH sides to actually work,the search engines,and the content provider would have to have something in THEIR site detecting if a person was coming from a 3rd party link and charge that link appropriately.

You seem to be under a misunderstanding on YouTube ad revenue.

When you click on video and an Ad plays, both the content and the ad are provided by Google. Google pays the uploader a portion of the ad money for the opportunity to present the Ad.

When you click on a news article, the ads are hosted by and presented by the news site. The news site is making money from the Ads hosted on their web page which people found because of Google. Google does not get a cut unless the link you clicked on was in itself an Ad, or unless they use your data in some other way.

Google got them more traffic, and thus, more potential money. Why should Google pay them?

-

The complaints about news papers struggling makes no sense to me. Why should I kill a tree and have a non-interactive article it brought to me when I can watch it and get the reactions of others in real time? Of course they are struggling, they are obsolete.

Google paying to link to articles means they will pay for the ones most likely to make them money and hedge their bets like any company would. This is common sense. In no way will this help smaller, less read, publications grow, it will just funnel more people to the larger media outlets and open up Google to lawsuits when they, naturally, pick favorites.

That last bit with France makes sense; Google was providing content without sending the viewer to the site in question and was thus "claiming" potential revenue from something that was not theirs. I could see that taking effect globally. I can not see Google negotiating with every news outlet on every article and trying to put a dollar value on each one, that's absurd.

-

Sequentials mean nothing, and MSI didn't feel like sharing any other specs.

Not getting my hopes up.

-

1

1

-

-

AMD:

https://www.amd.com/system/files/TechDocs/AMD NVMe_SATA RAID Quick Start Guide for Windows OS.pdf

Intel:

Its not much different from how you would make a bootable motherboard RAID SATA array. You'll need to set up the array in BIOS, add the driver during install, all that fun stuff.

-

It's not going to go as cleanly as you might expect, but you are obviously welcome to try. You're probably going to want to reach out to Level1Techs about it if you plan to go over a few drives.

https://forum.level1techs.com/t/fixing-slow-nvme-raid-performance-on-epyc/151909

SpoilerThere is no benefit to add in cards because they do not perform any function. It is that simple, there are no RAID cards for NVMe, only software, and at these speeds the file systems themselves break if the CPU doesn't first.

What a time to be alive eh?

-

1

1

-

-

Software RAID, or use AMD or Intel's built in tools. No motherboard is capable of NVMe RAID, they just enable you to use RST/VROC or AMD's RAID tools. Even the Highpoint drive is just a PLX chip and software;

https://www.anandtech.com/show/16247/highpoint-updates-nvme-raid-cards-for-pcie-40-up-to-8-m2-ssds

QuoteHighPoint's NVMe RAID is implemented as software RAID bundled with adapter cards featuring Broadcom/PLX PCIe switches. HighPoint provides RAID drivers and management utilities for Windows, macOS and Linux. Competing software NVMe RAID solutions like Intel RST or VROC achieve boot support by bundling a UEFI driver in with the rest of the motherboard's firmware.

NVMe drives are already RAID cards. They have LPDDR4 Cache, they have multi-core controllers, and they have stacks of Flash NAND (SSDs) on data buses. They can already push >4GB/s. There is no bus that will give you a performance benefit over PCI-e 4.0 that you can use on modern desktop hardware, and adding another step in the chain will hurt latency. There is simply no controller short of an actual FPGA, ASIC, or true CPU that can keep up.

What is your end goal? If you are attempting to protect data, then get a automated networked backup solution. Unless you absolutely need higher sequential read/writes, you will see no benefit from RAID, better to just pony up for next gen Optane or whatever the fastest PCI-e 4.0 NAND SSD is at the time.

-

3

3

-

-

-

15 hours ago, ENTERPRISE said:

Can you show me what the homepage looks like with you scrolled down a little further ?

I assume the Latest News, Latest Posts and Podcasts are not all in one line ?

It would be something we would make as a switched feature.

Knowing that most users have a 1080 screen, that is a long way to go down to find information. I understand if site-created content takes priority, but being 2 full screens below what most people will see means it will likely not be noticed at all.

-

Mmm... Yes and no?

I am ok with it being on just the homepage, but the issue is that even on a 1440 tall screen I can only see "Latest Activity", and not the things under it, due to the top bar, the "Welcome to...", etc;

The Forum page has less on top, so if it had a more condensed version of the news up top that would be nice, maybe if the carousel was shrunk a bit vertically. But if I'm going to the forum specifically, I'm probably not looking for news anymore.

If the home page lost or minimized the "Welcome to" bar, changed the grey bar into a full on carousel, moved the four items below it into the carousel, shortened the carousel but had it show 3-4 things horizontally, most of the news/latest would be visible without scrolling on a 1440 screen.

Then again, I'm that guy with small font size and compacted user interface in a ticketing system, so I may be bias when it comes to information density and a dislike of scrolling.

-

New chipset (500-series)

+4 PCI-e lanes for a x16/x4 config to match X570.

PCI-e 4.0 to match X570.

DDR4-3200 to match X570.

DMI Width doubled to match X570.

Up-to 8 cores.

Estimated +50% iGPU.

PL1 still 125w, same as 10900k.

PL2 still 250w, same as 10900k.

Estimated >9% IPC increase.

Intel made a 5800X and X570, but with more power draw and AVX-512.

-

Quote

During a time of increased competitor activity, Intel has decided to disclose some of the high level details surrounding its next generation consumer processor, known as Rocket Lake or Intel’s 11th Gen Core.

-

On 10/23/2020 at 2:29 PM, UltraMega said:

Digital vs analog definitely matters though. You will never get as clean of sound from a pc with a sound card because of the electrical interference, then you have to worry about the wires going from the pc to the receiver being thick enough/shielded from any additional interference. All of that goes away with hdmi, and the only time you have analog is from the receiver to the speakers. Not to mention the dac in a receiver is going to be better than that in a sound card 99% of the time.

All agreed until the last sentence. Name any model receiver you please and I can nearly guarantee that my AE-9 has a better DAC because the DAC in the AE-9 goes beyond the 24/192 HDMI is capable of. 32/384 from a ES9038PRO to be precise, while my AV7703 has a AKM AK4458VN which can do 32/192.

To be clear, unless I have a source audio file that is higher than 32/192 that doesn't actually matter, and the AV7703 can obviously do more than 5.1 channel output (being an 11.2 preamp and all) but point stands. The AE-9's DAC does have better THD ratings and this holds up in testing. It certainly won't have a better speaker line amp than most receivers, but it does have a much better headphone amp, beatable only by (expensive) dedicated headphone DAC/Amp combos.

These things are measurable by the way;

https://reference-audio-analyzer.pro/en/report/dac/creative-ae-9-foobar-96.php#gsc.tab=0

With a total Line Out (RCA) THD of 0.000587% (expressed as a fraction, that is 1/170357th of a change, so a 20khz signal would be accurate down to 0.12hz, and the response curve is flat as a board), it is not the soundcard's problem, but simply fighting the reality of an external analog solution.

Here are the rear channels of the AE-9's speaker Line Out (3.5mm);

https://reference-audio-analyzer.pro/en/report/dac/creative-ae-9-rear.php#gsc.tab=0

Interference from the case itself may be an issue with some sound cards, but not all. If I were to get an RCA-in speaker amplifier, without any of the extra circuitry that a receiver would have in it, there is a fair chance it would sound of comparable quality to a receiver of the same caliber plugged in with HDMI.

Granted this isn't "fair" as I'm comparing to one of the best sound cards on the planet, but it cost a quarter of what my AV7703 did, and the AV7703 can't operate until you buy amplifiers or speakers with them built in, so...

On 10/23/2020 at 6:28 PM, Damon said:Exactly this^^^

Not the quality of the cables. It's analog vs digital to the receiver. The sound quality is good using the rca cables, but the hdmi audio sounded a little bit cleaner.

I ask again;

- Are you using more than a 2.1 speaker sound setup.

- What specific features of the soundcard do you need when using your speakers.

- Are you certain your receiver model does not have channel duplication built in?

It is fully possible you could use S/PDIF TOSLINK and still use your soundcard's features on your speakers without needing to go analog. That is how I run my RX-V375 off my AE-9.

-

36 minutes ago, UltraMega said:

Tldr.

HDCP is not the right term, you're right, but still want I am referring to is 4k hdmi level audio quality which is different than standard hdmi.

Writing an essay about this won't change what one can hear clearly with their own ears. Using a 4k hdmi audio setup is just about there best option available in terms of signal quality is my only point. There are lots of reasons for this.

The communication medium does not describe the quality of the source file, only the maximum rate at which data can be moved.

"4K HDMI" is not a thing. That is not a standard that exists. Every HDMI standard back to HDMI 1.4 is capable of producing a 4k signal, albeit at 30hz. HDMI 2.0 and 2.1 are still just 24/192 PCM per channel. It is no different from HDMI 1.4 which is also 24/192 per channel, and S/PDIF which is also 24/192 PCM per channel. The only difference is that HDMI 2.0 bumped the total channel count from 8 to 32.

Your testing environment is flawed, or you are suffering from placebo. If you feel there are "reasons" that are not listed in the actual specification of the medium you are choosing to back, then list them, along with a source on the info.

While you are at it, you should link the models of equipment you conducted your testing with, because I suspect that the receiver you used is of significantly higher quality than the SBZ you have listed.

-

16 hours ago, UltraMega said:

Yea, that didn't really need to be stated. Obviously I know you can't plug HDMI into a speaker. Not sure how you could have been lead to believe otherwise by anything I said.

My point is that if you can get you're audio setup to be fully HDCP 2.2 compatible, which requires 4K HDMI, the sound will be better vs that which you would get from a sound card assuming the receiver being used is the same either way.

I have tried this myself before, and it was really nice but ultimately I preferred having the settings/control that my sound card gives me.. well that and the the reciver I used was a little glitchy with a PC/windows at the time. The difference when listening to a super high quality audio file like a FLAC or watching a very high quality surround sound movie file with HDCP 2.2 vs a DAC style PCIe device is just leagues away, the difference is very apparent, to me at least.

The argument I am making is that HDMI itself is irrelevant and for whatever reason you think that HDCP is somehow involved even with just lossless music.

HDCP isn't even audio related, it's an anti piracy measure. High-bandwidth Digital Content Protection.

QuoteThe system is meant to stop HDCP-encrypted content from being played on unauthorized devices or devices which have been modified to copy HDCP content.[2][3] Before sending data, a transmitting device checks that the receiver is authorized to receive it. If so, the transmitter encrypts the data to prevent eavesdropping as it flows to the receiver.[4]

The majority of lossless music is in 24/48 or 24/96 stereo, aka, 2.3mbps or 4.6mbps.

- S/PDIF TOSLINK is capable of up-to 125mbps, though my AE-9 can "only" output to (and my RX-V375 accept input of) 24/192, or 9.2mbps over this interface.

- USB can obviously do >400mbps.

- Bluetooth 5 can do 6mbps.

- Networking, obviously, is capable of 10/100/1000mbps.

- HDMI 1.4 can do 8 channels at 24/192 PCM, or ~37mbps.

- HDMI 2.1 can do 32 channels at 24/192 PCM, or ~147.5mbps.

Regardless of how you get your data to the receiver, provided you have enough bandwidth, are using the same formats, and the mediums by which they get to the receiver are digital (and thus use the built in DAC), the end sound once it it run on the DAC/Amps and kicked out to the speakers is the same. HDMI, and especially HDCP, in themselves, are irrelevant. The argument that you are actually making is either that your receiver's analog inputs are sub-optimal (which they commonly are), or the DAC in the receiver is better than the one in your sound card (highly possible). That is all. You would get the same music quality if you used S/PDIF off the sound card.

Now, if HDCP is handicapping your movie audio experience and not letting you run lossless on your sound card because the media companies fear piracy more than the heat death of the universe... That is a whole separate problem, but certainly a software one, not because of your sound card.

Also there is no "DAC style PCI-e device", DAC literally stands for Digital/Analog Converter. Every device that takes a digital signal and makes an analog one for a speaker to play has a DAC, be it a Shitt stack, a sound blaster card, or a top of the line receiver. If anything that would be a "PCI-e style DAC" as opposed to a "USB style DAC" or "multi-input DAC".

16 hours ago, Damon said:It did sound like the HDMI audio was better than the rca cables. But like you said, it's not worth giving up all the control the sound card gives which is why I want an HDMI sound card (don't know why anyone would assume you want to plug an HDMI cable into your speakers

).

).

If the sound was better over HDMI than RCA, then one of the following assumptions can be made;

- The DAC in the receiver is better than the one in your sound card.

- The sound produced by your sound card's amps are less than optimal to be re-amp'd by the receiver.

- The RCA input of your receiver is not properly isolated from the rest of the system and you are getting interference.

- The sound card is not properly isolated form the rest of the system and you are getting interference.

And all of the above are why it is preferred to use a digital interface when using an external audio solution.

But that begs the question again, what specific feature(s) from the SB suite are you looking to use?

Crystalizer, Bass, and Dialog+ are all just equalizer settings. Smart Vol is mostly irrelevant to speakers, so the last option is Surround, which... again, most receivers have channel duplication, and Creative's "Surround" is really a fake surround designed for headphones. Yamaha calls theirs "5/7 channel stereo", Marantz calls it "Multi Channel Stereo", etc, and the subwoofer crossover is usually set on the receiver unless you use a dedicated LFE channel.

Are you certain your model receiver doesn't have channel duplication built in?

-

2

2

-

4 hours ago, UltraMega said:

HDMI over HDCP 2.2 (4k hdmi) is going to give you clearly sound than any sound card will.

HDMI will provide no sound at all as it is not an analog signal that you can plug into a speaker.

3 hours ago, UltraMega said:Would be nice but for several reasons non exist. I tried HDMI audio over HDCP 2.2 once and it sounded EXTREMELY clean but since most music is just two channels and I have 5.1 surround, it left most of my speakers idle, so I switched back. Using a sound card gives me the option to listen to music with all my speakers.

http://manuals.marantz.com/SR7012/EU/en/DRDZSYyrtgycpw.php

Original sound mode > Multi Ch Stereo > This mode is for enjoying stereo sound from all speakers.

Original sound mode > Virtual > This mode lets you experience an expansive surround sound effect when playing back through just the front (L/R) speakers only, and when listening with stereo headphones.

Standard feature on nicer Receivers and Preamps, I use it on my AV7703 all the time.

3 hours ago, Damon said:It can't be that hard to replace the spdif port with an hdmi. I want all the options I'd normally have with a sound card, using the hdmi interface. And yes, technically the receiver acts as the dac for the sound card. That's fantastic. Not the point.

HDMI requires a video signal be sent, so yes it can be that hard. HDMI also requires certification, charges a royalty per port, and to meet spec requires that the soundcard be able to pump out several gigabits of data per second.

In comparison, S/PDIF in two channel 24/96 is 4.6mbps.

2 hours ago, Damon said:I had basically the same experience, which is keeping me on 5.1 analog via sound card. I know that Creative has a software suite you can buy to use with onboard sound, but don't think it can do what I want.

Onboard sound does not include the HDMI port because that is off the iGPU, so you are correct, it will not do what you want.

However, you can also use software to set up a virtual audio device such as VoiceMeeter.

-

10 hours ago, UltraMega said:

Also HDMI is generally superior to any sound card though there are some features you may lose by going full hdmi audio that a sound card would provide.

HDMI does not equal sound card, they are not comparable. Receivers or DACs equate to sound cards, and there are plenty of junk ones, just as there are junk sound cards.

HDMI is a digital communication medium that is capable of 8 channels at 24/192k PCM for 1.4 or 32 channels for 2.0/2.1, but nothing about HDMI itself has anything to do with the quality of the sound. If you want to compare in raw useless numbers, my AE-9 does 32/384k, which is technically 512x more detailed than HDMI 1.4, but that doesn't make it so given most lossless is recorded in 24/48k.

The one benefit HDMI has over S/PDIF is "more channels". You could also chose to use the sound card's DAC, if you prefer it over the receiver's, and run analog outputs from the card to the receiver's inputs, provided it supports the number of analog inputs you need, and use the receiver as an amp.

TL;DR: HDMI is equal to USB, S/PDIF, or PCI-e. Not sound card. Thus, it can not be superior to a sound card.

10 hours ago, Damon said:Yes, it would be similar to how Creative's features all work over spdif and analog with their cards. The dummy monitor work around with the current HDMI audio and going through a receiver is less than ideal.

Bingo.

If you really want Creative's software suite (emulated surround, crystallizer, bass boost, smart volume, etc) applied to speakers, which frankly makes no sense as those features are designed for headphones, then there's always the X7.

https://www.amazon.com/Creative-X7-High-Resolution-Headphone-Connectivity/dp/B00Q3XLGLU

Or you could use S/PDIF Optical to the receiver from any other sound card as long as you don't plan to go above 5.1 and don't mind DTS/Dolby compression.

That said, there is nothing in the Creative software suite that would work well on speakers except maybe emulated surround, and I can speak from experience that it doesn't accomplish much.

-

Right, this thread will let me get a bit more wild than the Headphone club. So...

Front room;

Onkyo TX-8050 and a pair of Cerwin Vega S1s

Computer room;

Yamaha RX-V375 and a pair of Cerwin Vega E-312s

... and then the actual theater;

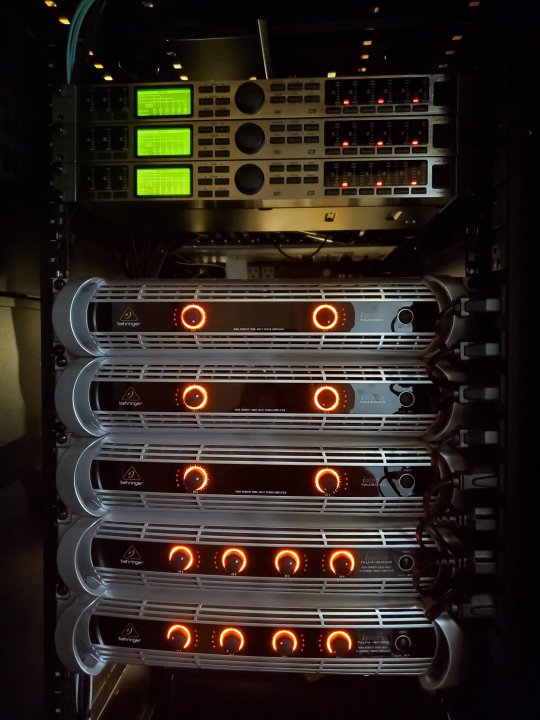

Marantz AV7703

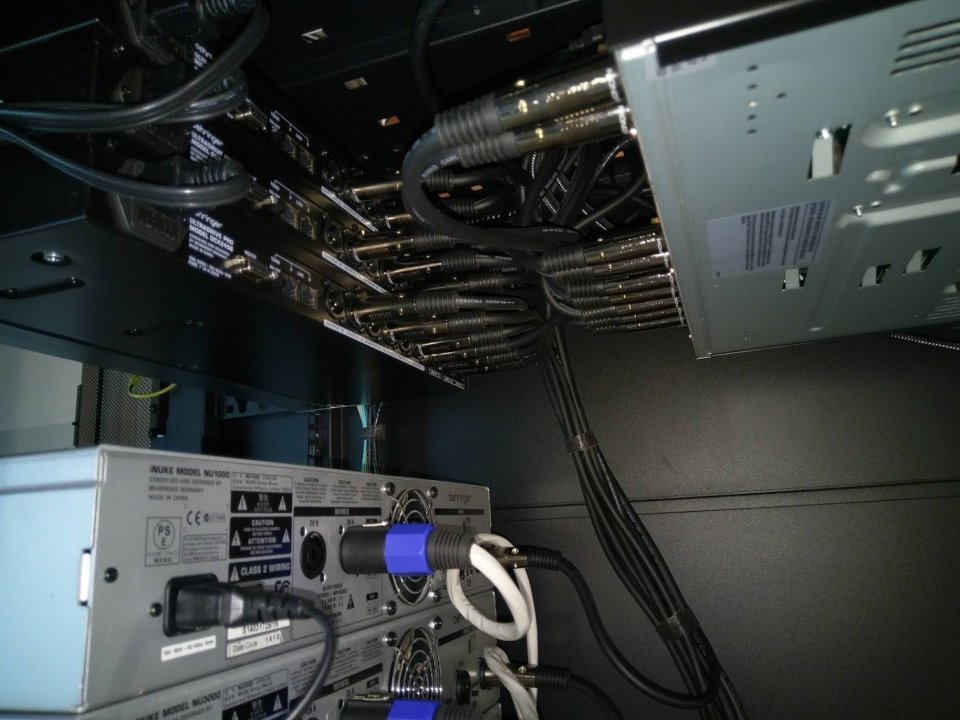

- 3x DCX2490

- - NU3000

- - - (FL) Cerwin Vega S2

- - NU3000

- - - (FR) Cerwin Vega S2

- - NU1000

- - - (C) Cerwin Vega SL-45C

- - NU4-6000

- - - (S1) 2x JBL 4645Cs

- - - (S2) Cerwin Vega CLS-15S (in passive)

- - NU4-6000

- - - (L/BL/R/BR) 2x Cerwin Vega D7s

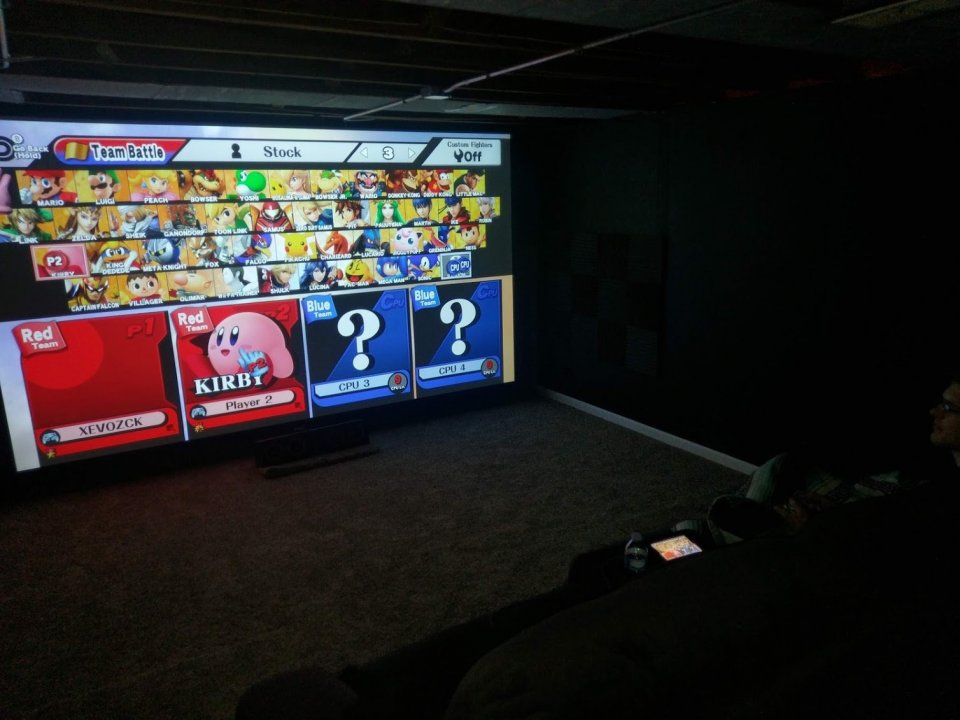

Pretty lights...

I don't really have a way to give context to the speaker size other than none of them have a woofer size under 12", while the CLS-15S and S2s are 15", and the JBLs are 18". I suppose I could make the argument that I have about 15.2 square feet, or 1.4 square meters of woofer cone.

... And then the lowest sensitivity are the E312s at 94dB@1w-1m with the S2s reaching up to 103. So obviously I throw 19KW at them, not that I ever max the amps much, not really required.

Music is meant to be felt, not heard, yea? I got my HD700s if I want detail, and I got these to make sure the foundation is settled every now and then.

-

HDMI is full digital, and thus any card with only HDMI would just be, at best, a hardware decoder/reencoder for the various audio codecs to PCM, DTS, or Dolby, whatever you have on the other end.

An actual sound card is significantly more than that, including a two channel DAC at minimum, and usually a lot more including 5.1/7.1, line in, mic, DACs, ADCs, and usually a headphone amp, plus some software for various features.

When you are using HDMI, the Receiver is you soundcard, ad it provides the headphone jack, speaker jacks, and DAC to process your audio. HDMI off of your sound card would be no different than HDMI off your GPU or Motherboard, and those are both commonplace, so to include it on your soundcard at the cost of other features makes little sense.

-

Just so we are in the clear, he is basing this entirely off of one twitter post, and ignores that the 3080 has 10-40% more active transistors than a theoretical doubled 5700XT on a lesser node?

And then for some reason doubles the Fan/Other power for AMD over nVidia because... reasons... and states plainly that they're making assumptions about the VRM lineup.

I'm not calling him a liar, but that's... not really convincing.

Not to mention that the thus far teased Big Navi chip has dual 8-pin, which would put PCI-e power at 300w. Not that AMD hasn't bypassed PCI power spec before, but just because one guy on twitter used an incorrect term does not mean the reported number is off by 50%.

-

5 hours ago, Andrew said:

New smaller model, all of them have OLED screens now, all of them have a higher screen to body ratio, the magsafe wireless charger to get optimum wireless charging, even the cheaper model has 5G, the Pro models have LiDAR, the Pro Max model has sensor stabilisation instead of lens stabilisation and the A14 CPU is meant to be more powerful than any CPU available in any of the current MacBook Pros.

On the surface, it doesn't look like much has changed, but quite a bit has. Apple have been doing "tick tock" updates with their phones since the 3G.

3GS is the 3G with slight improvements. 4 was entirely new, 4S is the 4 with slight improvements. 5 was entirely new, 6S was the 5 with slight improvements. 6 was entirely new, 6S was the 6 with slight improvements. 7 was entirely new, 8 was the 7 with slight improvements. XR was entirely new, 11 was the XR with slight improvements. 12 is entirely new, 12S/13 or whatever naming scheme they go for is probably going to be the 12 with slight improvements.

Heck of a claim saying their 2+4 CPU is going to be faster than a 2.4Ghz+ 8c/16t, but I guess that's what an asterisk and fine print are for.

I guess we'll find out when it's actually available in a product that can be benchmarked more easily than a phone, but makers of ARM CPUs have been trying (and failing abysmally) to claim the performance crown from x86 for a while. Is it really winning when you intentionally handicap your competition so heavily?

That said it makes perfect sense for Apple to unify their hardware given their walled garden. There is a lot to say about efficiency of code when you're writing for one platform, and the ability to include dedicated accelerators when you are not at the whims of anyone else.

-

Out hospital system bought a few thousand laptops when we kicked everyone we could out to work from home, so yet another vote on that.

That said, people have certainly been buying PCs to spend their time on at home as well given the shortages over the last few months.

-

They have the BIOS to run it now. So provided the site you order from had it's stock roll over between now and then, yes.

If no one ordered anything and you're getting stock from July, then no.

Worst case you can always email your vendor and ask when they got the latest shipment of whatever board you want to buy, or you can email AMD and they'll send you a cheap APU to upgrade BIOS with and return.

-

1

1

-

-

Pretty dead on, yea. Depending on cost I might swap the 4x8 for 2x16 sticks to lessen the load on the IMC (and it just happens to be a bit cheaper).

3600X is the right choice, not because it performs any better than a 3600, but because you get a better cooler out of the deal. As noted, you'll also be able to step up to Ryzen 5000 if you need the extra grunt.

That said, there is a case for intel in this bracket since that will stop you from needing to buy a GPU at all, and their hex cores are price competitive with AMD's.

AMD example; https://uk.pcpartpicker.com/list/FKzkLP

Intel Example; https://uk.pcpartpicker.com/list/CVMwPV

Can reduce to 16GB to get your budget back, or step down the MB a bit. Didn't include a case, but you shouldn't need anything special as neither of these CPUs are very power heavy. Honestly the PSU is massive overkill too.

For Minecraft especially, Singlethread is king. I imagine a hex core should be able to run all of those at the same time just fine, so the question comes down to RAM capacity needs.

-

1

1

-

-

6 minutes ago, rares495 said:

TDP is fake news. Power consumption is much higher.

TDP is a measure of cooling required to maintain a specific temperature at a given power level. A reference point of the minimum to not have runaway thermals.

TDP has never been representative of power consumption, it just happened to be close enough to not matter. At least until modern turbo/boost standards.

-

1

1

-

Google threatens to withdraw search engine from Australia

in Journalism & Entertainment

Posted

That is related to the French case spoken about at the end of the article, which is different from what Australia is trying to push.

France said "Yo, you're hosting some of our data and it's costing us views. Pay us for the data you're hosting that came from our sites".

Australia is saying "You're making money by people using you to find us. Debate and agree with us about the value of the article, per article, and then give us some of that in order to have the right to link to our article."

You are making the same argument France did. The French are right and the Australians are dumb in this case.

This is also dumb;

Laws should apply to all or none, or at least to a given size, in number of clicks or searches or something, not to one or two companies specifically.