You're thinking of the reticle limit which tl;dr dictates maximum physical limit on die size. There are ways around that though, and to be honest, that hasn't been the limiting factor on monolithic chip design.

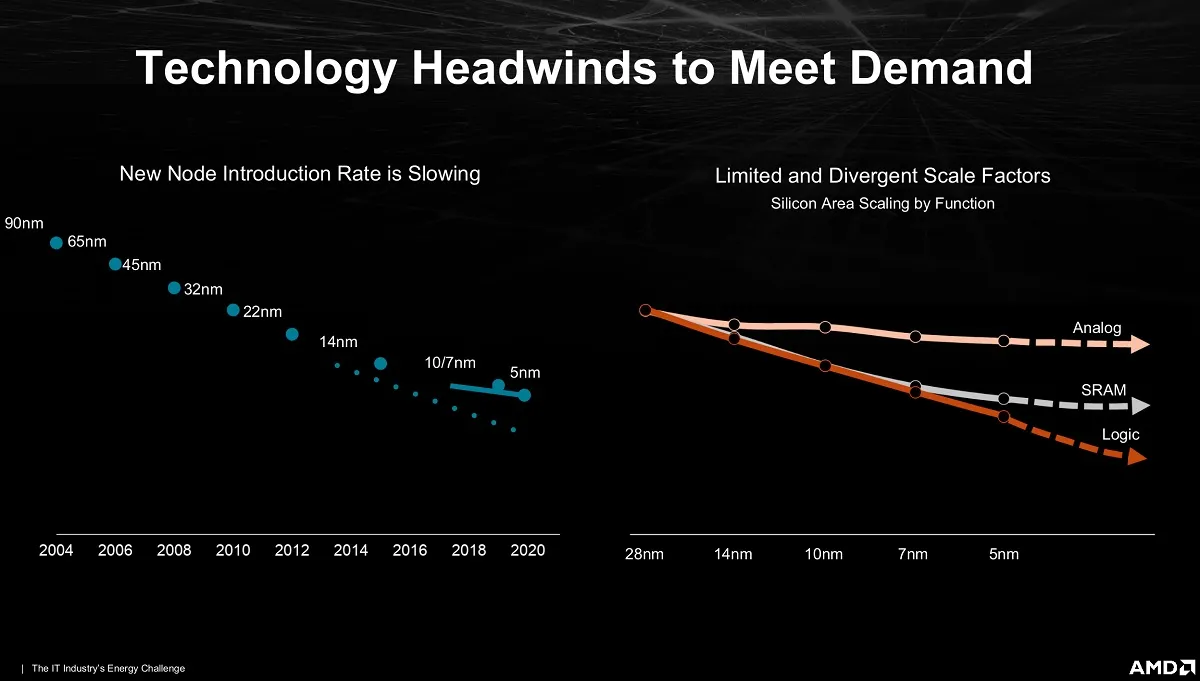

The real limit is that the bigger the die, the higher the probability of defects. The bigger the die, the fewer chips per wafer. With potential for more defects, the fewer yields per wafer too. This becomes a big problem as we start hitting 3nm, 2nm and beyond where TSMC functionally holds a monopoly or at least technological dominance in the fab market and can price wafers however they want. TSMC 2N wafer is set to be 2x the cost of 5N and still significantly more than 3N (Source). So the real problem Intel, AMD, and Nvidia are going to hit is designs that minimizes potential for defects, maximizes yields per wafer, so they can get the maximum profit out of each wafer. At the same time they need to still deliver on performance expectations.

We have not seen any consumer monolithic dies from Nvidia, AMD, or Intel anywhere close to the reticle limit. The closest was probably Turing with TU102 hitting a die size of 754mm2, but the dies since then have dropped back to just over 600mm2 for the biggest ones, with the step down dies being significantly smaller than that. Shifting back to Intel, the die size of Raptor Lake 14900K is 257mm2 - again a far cry from the physical reticle limit.

So in the end, I don't think reticle limit is really a factor here currently and its more probably down to the cost per wafer of the more advanced nodes, and the increasing complexity of architectures necessitating a rethinking of how to manufacture and package, hence chiplets and tiles.