Welcome to ExtremeHW

Welcome to ExtremeHW, register to take part in our community, don't worry this is a simple FREE process that requires minimal information for you to signup.

Registered users can:

- Start new topics and reply to others.

- Show off your PC using our Rig Creator feature.

- Subscribe to topics and forums to get updates.

- Get your own profile page to customize.

- Send personal messages to other members.

- Take advantage of site exclusive features.

- Upgrade to Premium to unlock additional sites features.

-

Posts

177 -

Joined

-

Last visited

-

Days Won

9 -

Feedback

0%

Content Type

Forums

Store

Events

Gallery

Profiles

Videos

Marketplace

Tutorials

Posts posted by Laithan

-

-

You should also be able to bypass the issue if you use a PCI-e HBA/RAID card. You could try to find some Toshiba Toggle Nand SSDs.

-

Yeah I remember, vdroop was a huge issue on this platform.. some improvements for the digital VRM boards but they can be tricky to find.

-

-

On 13/12/2021 at 10:58, schuck6566 said:

The 790i is happy with the xeon mod, for an example here's a striker II Extreme with a x5470 OC'd to between 4.5-4.6Ghz with a pair of 980ti's running.

The 790i is happy with the xeon mod, for an example here's a striker II Extreme with a x5470 OC'd to between 4.5-4.6Ghz with a pair of 980ti's running.

NVIDIA GeForce GTX 980 Ti video card benchmark result - Intel Xeon Processor X5470,ASUSTeK Computer INC. STRIKER II EXTREME

WWW.3DMARK.COM

NVIDIA GeForce GTX 980 Ti video card benchmark result - Intel Xeon Processor X5470,ASUSTeK Computer INC. STRIKER II EXTREME

WWW.3DMARK.COM

Intel Xeon Processor X5470, NVIDIA GeForce GTX 980 Ti x 2, 8192 MB, 64-bit Windows 7}@Laithan will recognize his machine & score I'm sure

Just learned about this thread. Build is looking great!

Ahh those were some fun days! Yeah the X5470 isn't unlocked but it has a 10x multi which is "good enough" for most overclocking needs and runs @ 1600Mhz bus with no issue at all (and even higher).

Getting above 1600Mhz with 4 sticks is tricky and usually requires the right memory and 1.5v on the NB (where the memory controller resides for this generation) which produces a ton of heat, but possible. The nice thing is that you can clock the memory speed and CPU bus seperately (unlinked).

These 790i Ultra chipsets were definitely ahead of their time and IMO stand out among one of the best ever made (not to be confused with the 780 chipset).

I'm glad to see they are still getting some love in 2022 (close enough to say that

)

)

Good luck!

-

1

1

-

-

Did you end up buying the High Point SSD7103 Bootable 4X M.2 NVMe RAID Controller or was it a different model?

-

Hey all.. Every time I see these new screenshots it makes me want to buy this game... just amazing!

I am not sure if my 2080Ti will be enough to drive it @ 4K... but the more daunting issue I seem to have is wondering how difficult the learning curve would be knowing that I have no clue how to fly any plane.. lol It seems quite intimidating initially anyway. Are there different modes to add auto-assists?

-

1

1

-

-

That is (practically.. or is it ACTUALLY) Photo-realistic... wow..

-

5 hours ago, schuck6566 said:

lol, and here's Nvidia's answer to the shortage.... https://www.pcworld.com/article/3607190/nvidia-rtx-30-graphics-card-shortages-gaming-gpu-gtx-1050-ti-geforce-rtx-2060.html

“There have been a lot of rumors recently about Nvidia releasing more GPUs to AIBs from older generations—the [GeForce] RTX 2060 and GTX 1050 Ti were specifically mentioned,”

They are more than happy to support artificial inflation and sell older products for double/triple the prices...

How about they just play it straight and produce more GPUs and put measures in place like IDK... one card limits or something to get around botters and scalpers...

This is an absolute disgrace and NVIDIA isn't doing us any favors with this...

-

$650 for a 1660 Super now... What the...

.. I think when I bought mine for this Plex server it was around $200 ish... Things are getting out of control. Who is paying these prices?!?

.. I think when I bought mine for this Plex server it was around $200 ish... Things are getting out of control. Who is paying these prices?!?

-

20 minutes ago, J7SC_Orion said:

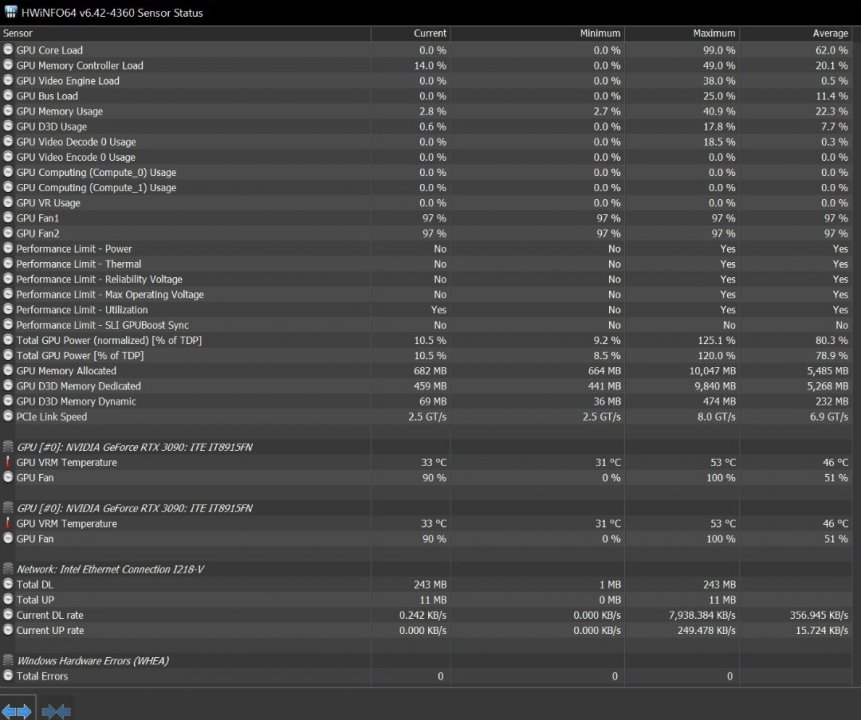

@Laithan ...further to your query above re. bandwidth and related, I just did a fresh 'max' run to log server download speed and amount. I strongly stress that this was max max max everything...4K Ultra/everything on full...new (non-cached) busy landscape via Osaka and surrounds, flying a jet at over 640 knots as low as 350 feet (probably going to get a ticket for that

...) and as high as 5,000 feet, thus maximizing the requested downloads. Regular 4K play will obviously less in terms of overall downloads from the Microsoft Azure server network.

...) and as high as 5,000 feet, thus maximizing the requested downloads. Regular 4K play will obviously less in terms of overall downloads from the Microsoft Azure server network.

I ran this test for exactly 5 minutes, and had reset HWInfo to '0' before the start...nothing else was running:

Nice! Yes ticket is in the mail

So that works out to roughly 3GB per hour, which is considerable but not super bad. And you won't always be playing unached either, but theoretically it could add up quick. If you figure 3GB per hour, 4 hours a day, playing 3 days every week for a month it could rack up around 144GB.

-

Disclaimer I'm no expert as I have never played a Flight Sim seriously.. but the discussion about the controllers made me curious. I was shocked to see how many choices there really are out there and some of them look fantastic. The Extreme 3D Pro was also highly recommended from what I could see. I figured I would leave these links here because they look pretty fun and if someone was looking to buy a new controller this could be a good place to start.

https://www.logitechg.com/en-us/products/space/extreme-3d-pro-joystick.963290-0403.html

http://www.thrustmaster.com/products/t16000m-fcs-hotas

http://www.thrustmaster.com/products/hotas-warthog/

https://www.logitechg.com/en-us/products/space/x52-space-flight-simulator-controller.945-000025.html

https://www.logitechg.com/en-us/products/flight/flight-simulator-yoke-system.945-000023.html

-

2

2

-

-

Apparently there was a re-release of this fix (maybe). The first update that I installed on 2/6/2021, the build number was version 1.21.3.4014. Today I noticed there is another update which includes the same DDoS description (below) and is now build version 1.21.3.4021. This notification came directly from within the app by way of the "What's new" button next to the "Install updates" button. When I tried to confirm via web, I didn't get too far and didn't want to spend a lot of time on it either but I just remember from memory that the same fix listed below was mentioned both times.

FIXES:

(Security) Mitigate against potential DDoS amplification by only responding to UDP requests from LANI also wanted to leave this link in the event there was ever a need to revert back to a prior version for some reason.

Repository of prior Plex Media Server versions

-

1

1

-

-

I understand that this game will stream maps while you are playing. It seems that a lot of ISPs are imposing data caps on plans. Does playing this game for a significant time actually consume a lot of data? When you crank up the detail to max, are you really downloading at 6.25MB/s constantly?

SPECIFICATION MINIMUM RECOMMENDED IDEAL Internet requirement 5 Mbps 20 Mbps 50 Mbps -

On 1/28/2021 at 4:28 PM, J7SC_Orion said:

...thanks, @Laithan ...Microsoft Flight Simulator 2020 deserves the credit as it is easy to adore and thus invest a bit of time in to share, especially given the accuracy re. area I live in - I usually start my workday early by driving to the local mountains (only 20 min away, unless rush hour) for a quick hike and also some reading over a nice coffee, and I actually use Flight Simulator 2020 (set to live weather...) to help pick a particular spot, like this local ski area...

I just discovered there is a VR update for this game also now... that's got to be intense!!

-

1 hour ago, Sgt_Swanny said:

They released a patch for it today: https://forums.plex.tv/t/security-regarding-ssdp-reflection-amplification-ddos/687162

Thanks for the heads up, applied it just now

-

1 hour ago, BWG said:

I don't have much to add other than my AX6600 streams 4k HDR wirelessly and perfectly. It's a consumer mesh router. The Asus Zenwifi xt8.

Also, Chromecast is pretty weak decoding some 4K and causes a lot of transcoding, so I suggest a Shield TV Pro instead. Works way better than integrated smart tv hardware/software.

I can't wait to build a house with hardlines everywhere!

Awesome router. Looks like it belongs in Star Wars

.

.

Agree with you. I have noticed that more than one brand of TVs with ROKU integrated have a really hard time with 4K direct play content. It will tell you that the server isn't powerful enough or that your wireless connection isn't strong enough... but the truth is, the TV itself is at fault as it doesn't have enough processing power. I have tested and found the same issues with both TCL as well as Magnavox smart TVs with ROKU integrated. I even tried a wired connection as a test and there was no difference.

I also have an NVIDIA Shield PRO and it has no issues with 4K content.

-

11 hours ago, schuck6566 said:

Thanks for the heads up!

I personly don't access my server from outside my private network nor do I have any kind of SSDP running... but that's really good to know as I had not even heard about this SSDP "feature". I can't understand why anyone would even want to use this kind of "service" as it is obvsiouly a security vulnerability that seems to be designed for those who don't have basic networking knowledge. UPnP should also be disabled of course... If easy is a priority over security then you clearly need someone else to manage your infrastructure.

-

I had heard of these a while back but forgot about them.. The Linus just posted a new video featuring the. The "HoneyBadger". Athough it doesn't use the term RAID, this appears to be a harware based solution that combines multiple NVMe drives together which would essentially be similar in functionality to RAID.

This thing is extremely impressive... 24GB/s

https://www.liqid.com/products/composable-storage/element-lqd4500-pcie-aic-ssd

-

2 hours ago, ENTERPRISE said:

FYI, I ended up buying the HighPoint M.2 NVMe RAID Controller via PCI-Express 4.0 x16, not so much for playing with RAID (not that it is needed to do RAID) but because it also allows me to swap out NVMe drives more easily than compared to when they are on my motherboard. Will also be reviewing this add in card so keep your eyes peeled for that

Are you able to set that up in RAID 0? Did you have to use Windows DM for the RAID? What kind of performance are you getting with ATTO? I guess I should wait for the full review

-

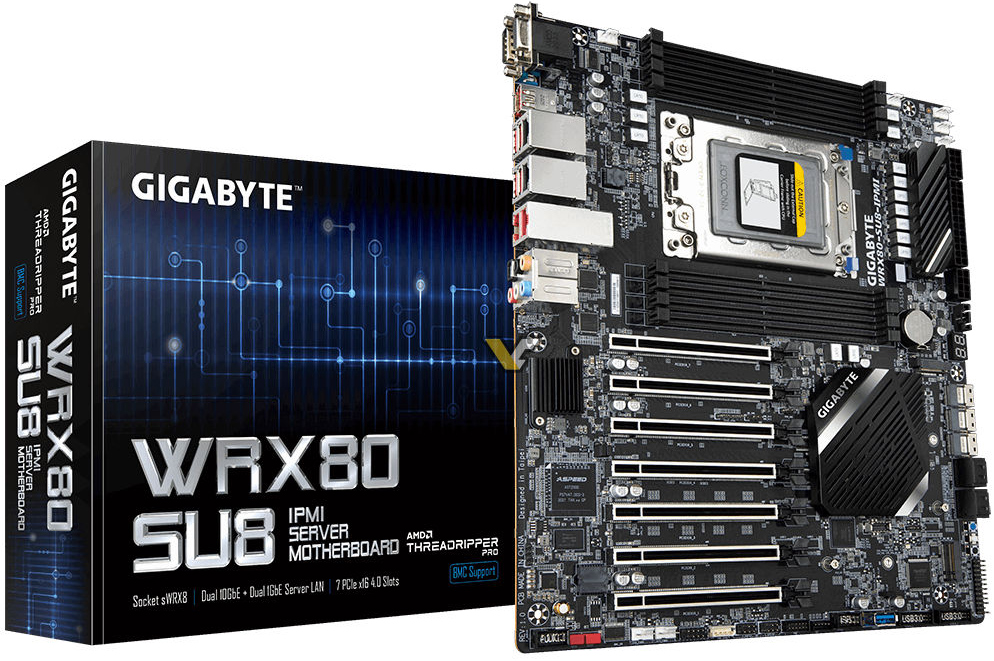

On 1/1/2021 at 2:12 PM, J7SC_Orion said:

@ENTERPRISE @Laithan ...a bit off-topic, though this would hold a heck of a lot of nvme raid cards, and apparently also has 2x U.2 onboard...

From the 'pressing-your-nose-on-the-Porsche-showroom-window' file: Gigabyte's upcoming TRX80 Threadripper Pro board...up to 2 TB 8-channel RAM (!), 7x PCIe 4 x 16, dual 10 GbE Lan - and it's black/silver shiny !

Now that's my kind of motherboard!!

With regard to NVMe RAID, I think the current answers are only true... for now...

Like they say "If you build it, they will come"... I think that it is only a matter of time but there are a couple of barriers that only time can really address.

For one, I think with desktop board the # of PCI-e lanes is extremely limited (for NVMe) creating a bottleneck fairly quickly. PCI-e 4.0 can help with this however it might not be until NVMe is supported on PCI-e 5.0 where this can really shine.

The other issue is the "controller".. since NVMe interfaces directly with PCI-e natively, a "controller" isn't really what is needed in a traditional sense. NVMe RAID would essentially have to be an add-in PCI-e device or emulator, in hardware that gives each NVMe dedicate PCI-e bandwidth and presents those drives to the PCI-e interface on the motherboard and "seen" as a single drive. I think this is something we will in the next few years possibly but we are just not there yet IMO... but I could be totally wrong, we shall have to wait and see.

-

2

2

-

-

This is just an amazing thread and I wanted to show my appreciation for the time and effort @J7SC_Orion took to put this together. Well done sir, well done!

-

1

1

-

-

2 hours ago, ENTERPRISE said:

Aside from the octo channel of RAM, I am really liking all the PCIe lanes.

You made me realize that in order to obtain full memory bandwith, this means you must populate with 8 sticks... which is not only expensive but is also going to make overclocking a bit more challenging. I wonder what the performance difference really is at the end of the day between quad channel and octo channel.. Gimmick or the real deal? Hmmm....

-

28 minutes ago, J7SC_Orion said:

^^Nice ! And yeah, it really depends on the use case. If your concurrent workloads aren't heavily threaded, then the 22c/44t Xeon doesn't make a lot of sense (never mind dual Xeons).

BTW, I can relate re. 380W 2080 TIs - I've got two of them in my work-play hybrid and they do pull a combined 760 W (stock bios). The TR 2950X is a nice sample, all-core at 4.3 GHz / 1.03375v, and a couple of cores on non all-c have hit above 4.6 GHz. IMO, the 16c/32t 2950X is right between a gaming CPU and a full HEDT workhorse such as Xeon and Epyc. It has 'NUMA' vs 'UMA' modes which change its character, speed, latency etc. Perfect for a hybrid build:

My use case scenario is nothing less than "overkill" lolz! You are totally right though.. I could have probably got away with a single 8 core in my ESXi server never mind 20 cores. At the time, I was using it for my Plex server also which was about the only thing really pushing it but now it runs file servers, pi-hole, steam cache and a few others that don't need much at all.

That's a sweet system you have there. I like the way the hard line tubing runs. I see you have an open Thermaltake. My next case will definitely be an open design especially since I always have to leave the side panel off anyway to get rid of the heat.

-

1

1

-

-

2 hours ago, Diffident said:

TDP isn't total power draw. Plus Intel TDP is listed as its base freq not the turbo freq.

I have 2 Xeon E5-2670's in a single system that I have now retired because of the power consumption. A single Ryzen R9 CPU can outperform them with less power draw.

Yes they are getting a bit old now but I guess it really depends what you are using them for. Are you sure TDP is @ base frequency, it seems to be at boost actually.

My gaming PC, it draws a ton of power.. well, the 2080Ti alone will draw 380W easy and the CPU is overclocked which definitely draws a lot of power.. I would guess around 200W under load maybe more.

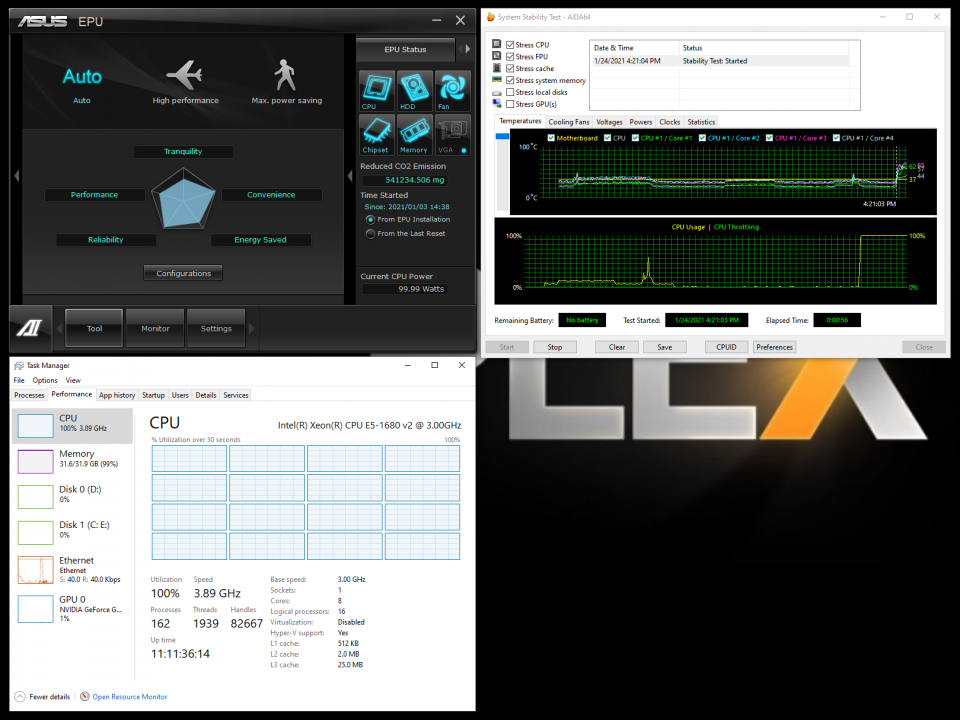

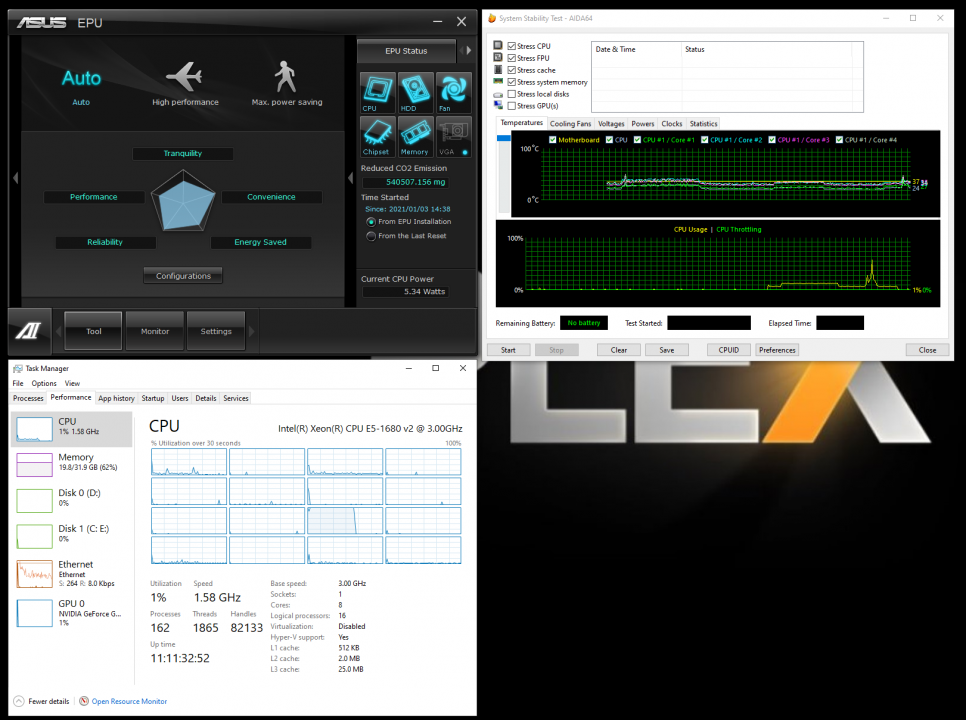

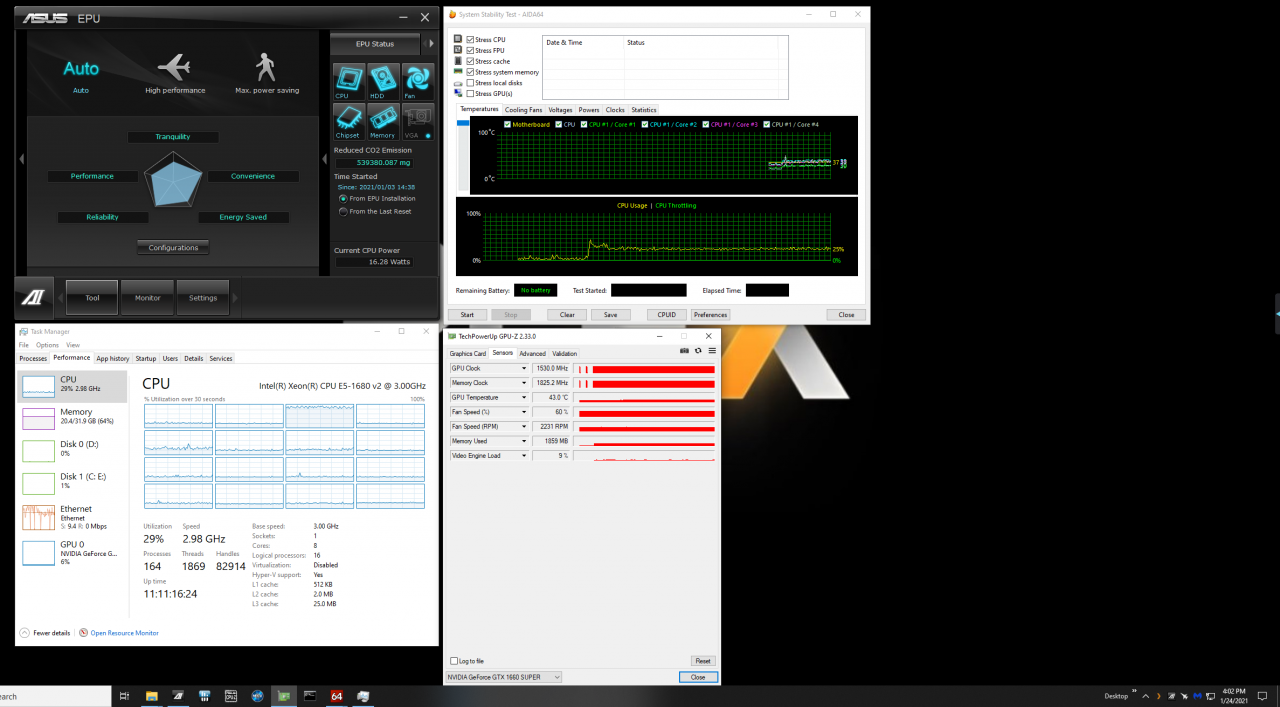

My servers are pretty power efficient (The CPUs anyway) though although I don't have a Ryzen system to compare them to. I figured I would take a look at my Plex server since it is also using an E5-1680V2 (Stock TDP is 130W). I don't have it overclocked right now. This doesn't measure total power consumption but for just a quick test I figured I would take a look. 3 scenarios: (1) Idle @ 5.34W (2) Transcoding a 4K video to 1080p @ 16.28W (3) Stress testing @ 99.9W. It doesn't go above 100w which really isn't too bad.

My ESXi server has Dual E5-2690V2 CPUs and the CPU usage is very low most of the time so I would say they should be using slightly more idle power as above doubled and maybe as much as 50W-75W at most. Both CPUs are never ever maxxed out.

The CPU usage reported by EPU seems to match the TDP rating pretty close anyway.

-

1

1

-

.thumb.jpg.da47d41b0fe2fb25cdc12b65539247b3.jpg)

Intel Raptor Lake CPUs 5.8GHz boost rumored

in Rumour Mill

Posted

Sounds sexy! Hard to beat raw Mhz. UE5 seems to love speed, not cores so this should be great for that engine.