Welcome to ExtremeHW

Welcome to ExtremeHW, register to take part in our community, don't worry this is a simple FREE process that requires minimal information for you to signup.

Registered users can:

- Start new topics and reply to others.

- Show off your PC using our Rig Creator feature.

- Subscribe to topics and forums to get updates.

- Get your own profile page to customize.

- Send personal messages to other members.

- Take advantage of site exclusive features.

- Upgrade to Premium to unlock additional sites features.

-

Posts

145 -

Joined

-

Last visited

-

Days Won

5 -

Feedback

0%

Content Type

Forums

Store

Events

Gallery

Profiles

Videos

Marketplace

Tutorials

Posts posted by LabRat

-

-

2 hours ago, bonami2 said:

Dead mobo my old Intel DP45SG did that. It would even run overclocked but end up freezing randomly. He had a few trip sliding on the carpet that probably static damaged it somewhow...

ESD damage is insidious.

IIRC, ESD doesn't immediately 'kill' a lot of ICs, it 'erodes' and moves elements/ions @ the nano scale.TBH, I'd been wondering if +5V (pulsed/unfiltered) DC on the ground plane* (the HDMI 'dapter incident) damaged capacitors (or other components) from reverse polarity (and/or 'noisy') current.

*Was it ever verified if the anomalous 5VDC was actually on ground, or was it just overriding the PSU's +12VDC?

I've had a couple cheapie FP USB bays directly bridge +5VSB (motherboard-allocated to USB) to +5V; which, caused a similar symptom but, only on +5Vrail-connected devices.

It might be worthwhile to further-investigate the suspected cause for the failure: the HDMI adapter. I know you have a DMM (somewhere).

-

3

3

-

-

8 hours ago, bonami2 said:

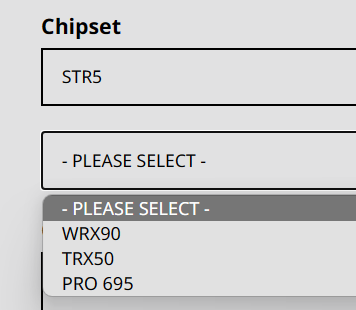

The lack of PCIe x16 slots is deeply disappointing, however.

At least you get: 3*PCIe x16 slots for Multi-GPU and 4*PCIe x4 M.2 slots.

'Not seeing if they're all SoC-connected, and Gen5.

Though,the math says: "Yes; they're CPU-direct Gen5"

Quotex16-lane PCIe slots x3 = 48 lanes

x4-lane M.2 slots x4 = 16 lanes

48 + 16 = 64 lanes

5 hours ago, RageSet said:Why did AMD cut down the PCIe 5 lanes so much compared to the TR 7000 Pro platform? Then they allow TR 7000 Pro CPUs on TRX50, but again you don't gain any of the lanes and you lose four memory channels. This smells rushed, last minute.

Yeah... half the lanes (but double the bandwidth) is disappointing.

I supposed it is further delineation of product lines (between TR7k and EPYC Genoa)

Also, it's worth keeping in mind:

-EPYC Genoa can only allocate 64 of its 128 Gen5 lanes for CXL use-This is officially TRX50. (Don't forget WRX80 was seperate and more-featured vs. TRX40.)

-WRX90, is still to come:

-

2

2

-

-

26 minutes ago, pioneerisloud said:

We became wobbly willionaires. So we figured to celebrate with some cake at the coffee shop at the local mall.

@LabRatgetting sucked back into the coffee shop as he was try to pose in front of his creation.

And I don't know who let the rift raft in at the local fair, but man they sure did litter. -_-

Themesong?

Wobbly Things...

-

1

1

-

-

IDK how to properly convey it but, this fan seems like the 'crosspoint' of many designs across other industries.

-a 'no duh' design; one that no-one'd been willing to 'put together' for the consumer/enthusiast-facing market.

Very attractive, both aesthetically, and technically.

TBQH, this looks like the first 'axial blower' that I'd be willing to pay $20+ for.

Any chance EHW might get some review samples?

-

3

3

-

-

IMO, just like 'cholesterol' in Heart Disease

these amyloid proteins are tangential to the problem, not the cause.

Glad to see there's now some research to back up that "uh, duh?" on my part.

-

1

1

-

-

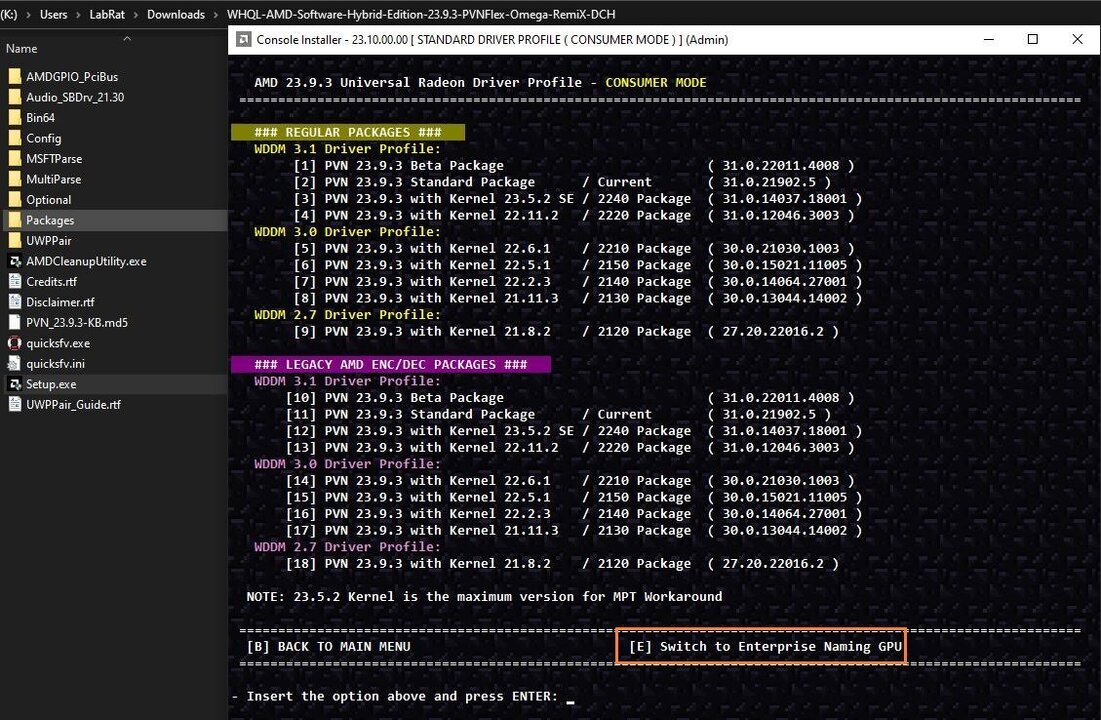

Have you tried 3rd Party Drivers?

Through several updates, I've had excellent luck with RdN.ID / AmernimeZone (NimeZ) drivers.On my Vega(s).

RdN.ID @Sourceforge WHQL-AMD-Software-Hybrid-Edition-23.9.3

Amernime Zone - AMD 3rd Party Drivers

WWW.AMERNIMEZONE.COM

Amernime Zone - AMD 3rd Party Drivers

WWW.AMERNIMEZONE.COM

3rd Party AMD GPU Drivers with Customizable TweaksIt'll also let you chose 'branding' and 'feature level' through the setup-configurator.

-

1

1

-

-

5 hours ago, damric said:

Alrighty I finally got a chance to work on this again after having to put it on the shelf for a while during the Team Cup.

Looks like TRIXX allows higher clocks via higher power limits, but it's a bit sketchy on whether it will crash or not once I apply. If the bench can start, it runs flawlessly though.

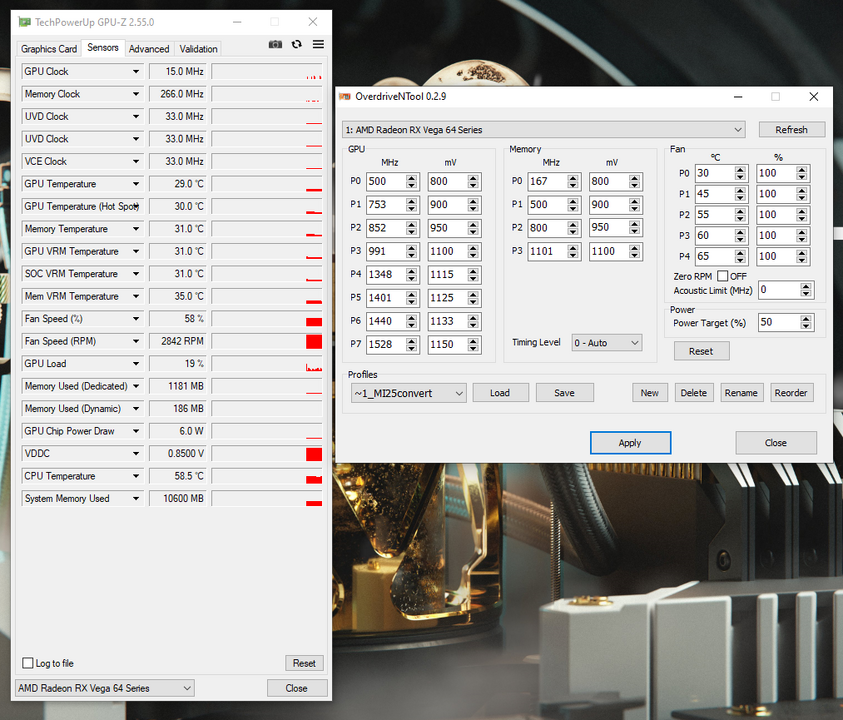

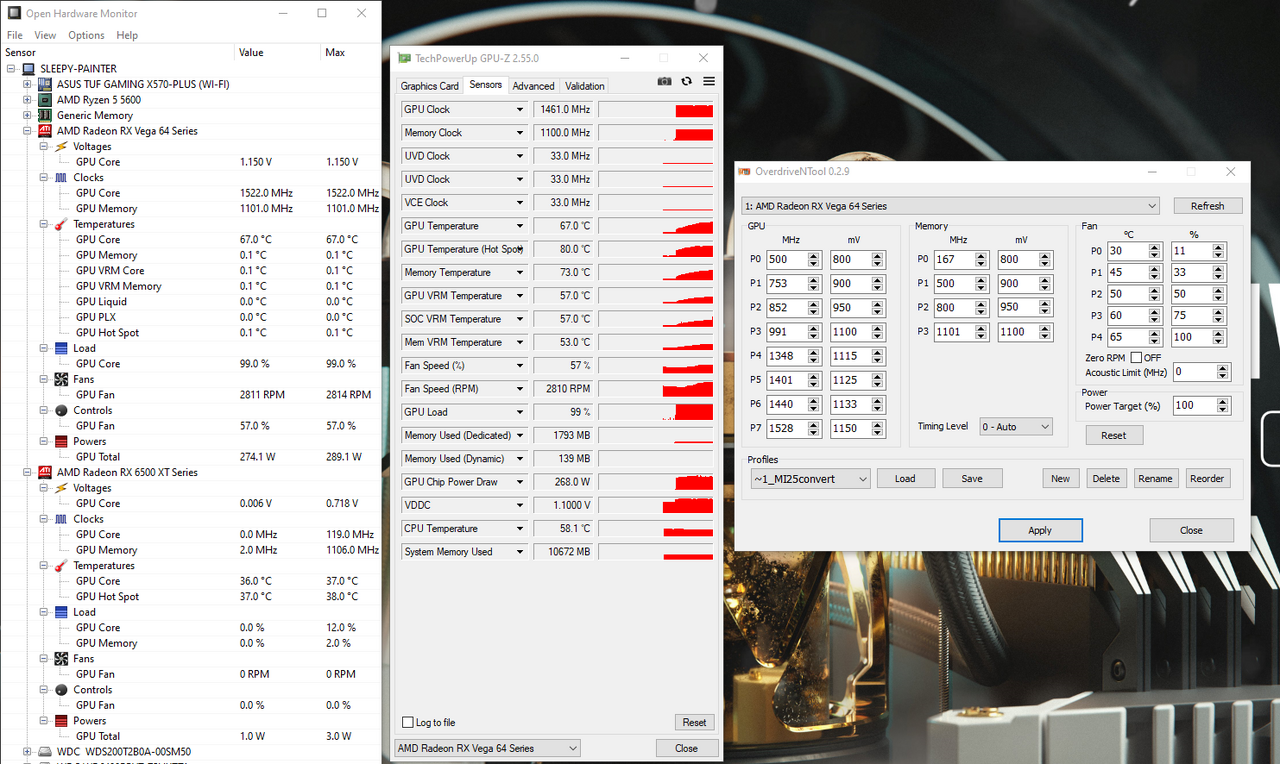

It's been my experience that the 'fall back' P-states, have to be customized too. AFAIK, these MI25s are the only Vega that *needs* tweaking beyond P6 and P7, for max Watt/Perf.

I mean, it makes sense. We're basically 're-binning' Vega 10 16GB into another product line

note: Samsung HBM2 seems to run 1+ghz no problem, even with a 1025-1050mv 'HBM voltage floor' set.

I played STALKER: Anomaly for *hours* yesterday, sustaining 1390-1528Mhz core*, at less than 1100mv.

220ish-260ishW sustained, 280ish peak.If I push voltages to 1100mv or higher, I can get 1600+, but hotspot or HBM temps eventually cause instability.

(Also, the 2-less power phases trying to push 300+W might be related. I've seen 350W+ pulled from my card, before overheating)

*After using OverdriveNtool to 'find' stable clocks, I used RadeonWattman to create a profile for STALKER Anomaly that locks it in p-states 5-7

(some areas in the game don't 'load' the GPU, even though the clocks are needed for smooth framerates)Any idea on how to edit the vBIOS's clocks/voltages? I'm very close to finding my card's "sweet spot", would like to make it 'permanent'.

PS: I've got a 6+8pin MI25, on the way; I'm very curious what the differences between the 2 MI25s are.

-

7 hours ago, Avacado said:

Wow, that's almost an impressively-low score from me.

My Titan *was* watercooled; the original owner couldn't get it working, so I scored it CHEAP a few years ago.

(he mis-mounted the waterblock, and shorted it. Thankfully, non-destructively).

So, instead of a proper GTX Titan OG cooler, it has an eVGA Titan Black cooler on it. Which, required some 'gorilla mods' with a pair of pliers and a knife before mounting.Some of the pads/cold plate don't make PERFECT contact, so I didn't want to OC and push it. @pioneerisloud got me to try anyway, and she's not a good OCer with such meager cooling.

Still, a nice card in my collection.

HWbot ever do CrossfireX/SLI challenges?

I've got 1GB G92s enough for 3+way SLI, and at least 2 working RX580s left hanging around.-

3

3

-

-

1 hour ago, Bastiaan_NL said:

It didn't make it due to 3 other subs being higher. @Sir Beregondposted his sub after you and knocked yours out

Cool, Thank You

No complaints. Just assumed I'd messed something up. -

Did I miss something, or is the name a binary pun on an extension-expansion to AVX2?

AVX

AVX2

AVX-512

AVX10 ("2" in 'binary'?)

-

As a learning experience for me:

What happened to my Titan run?

I know I had to edit it due to a browser mishap re-populating my old sub's details.

-

New Fuzzy Donut!?

Sweet.-

3

3

-

-

3 hours ago, bonami2 said:

Egpu are kinda dead because of streaming. We just need better network availability worldwide. Why carry a laptop when you can carry a foldable tablet with a keyboard and mouse!

Perhaps, you find the Latency acceptable, I do not.

(Even, over a simple and modern LAN or WirelessLAN)

I believe that's something of a sentiment shared by a portion of Retro-Console community, too.See: FPGA Retro Consoles

You have a point, nonetheless.

For those who either "don't know any better" and/or "cannot tell the difference",'streaming' is an apparent, simple, and affordable solution.

From my PoV (in researching eGPU's progress over the years),

I'd say the biggest consumers of eGPU enclosures were

professional artists, graphic designers/animators, CADworkers, and Pro media editors.

That's a market, and one that can/will easily shell out $$$$.

Note: Even for Pros, Amazon AWS (aka: streaming) allows a similar utility.

Yet, I still think this is more a cost-availability (and publicity-marketing) thing:

If no AIB/manufacturer is willing to make 'affordable' eGPU 'kits' (and advertise them),

then it'll never become popular.

Ex: Many (Apple) users cannot even conceive of upgrading their machine without just buying a whole new one. Why would they think 'plugging in an upgrade' would be possible?IMO, Intel's just trying to stir-up attention on ThunderBolt 5,

and their License(d) Partners' "Features and Abilities".

Sadly, Thunderbolt has almost always been relegated to 'premium' offerings. Rarely, if ever will you've found Thunderbolt on a Sub-$800 (MSRP) laptop.

To me, Intel's attempt @ PR here is entirely self-defeating.

-Advertise something that will only become popular if affordable

-Yet, relegate that "advertised feature set" to only premium units.

Edit:

QuoteUSB4 as implemented on Windows 11 laptops, however, looks to be a little more clear cut as Microsoft seems to require advanced PCIe support for any laptop with a USB4 port. That hopefully means every new Windows 11 laptop with a USB4 port must be compatible with Thunderbolt 3 devices besides working with USB4/Thunderbolt 4 devices. Where this gets a little odd is that Microsoft cites USB4’s requirement for PCIe to mandate it, but the actual USB4 spec makes it optional.

I just used USB4 on an AMD Ryzen laptop and it's amazing! | PCWorld

WWW.PCWORLD.COM

I just used USB4 on an AMD Ryzen laptop and it's amazing! | PCWorld

WWW.PCWORLD.COM

Not gonna lie, I legit got giddy plugging a Thunderbolt device into an AMD-based laptop with real USB4 support. USB4 Systems PCIe Tunneling Support | Microsoft Learn

LEARN.MICROSOFT.COM

USB4 Systems PCIe Tunneling Support | Microsoft Learn

LEARN.MICROSOFT.COM

Verifies that systems support PCIe tunneling on all exposed USB4 ports.

-

1

1

-

-

19 minutes ago, pioneerisloud said:

Fan speed % = exactly that though. The percentage the fans are spinning at, COMPARED TO (you're forgetting that part) maximum RPM. What is maximum RPM for the fan you're using? Does your card's VBIOS know? The RPM's are accurate. % is not.

Even on stock cards, I've seen this same behavior sometimes with aftermarket AIB coolers. Completely bone stock cards, but "max out" around 60% fan speed........but yet insanely high RPM's. Yeah. Ignore fan speed % unless its actually a stock and accurate fan installed.

Even on stock cards, I've seen this same behavior sometimes with aftermarket AIB coolers. Completely bone stock cards, but "max out" around 60% fan speed........but yet insanely high RPM's. Yeah. Ignore fan speed % unless its actually a stock and accurate fan installed.

Your simplification-explanation, belies and describes the precise reason I'd mis-lead myself:

Duty Cycle % would also fit that definition.

Any magnetic or resistive load cannot exceed 100% Duty Cycle. -Regardless of its rated rotational speed, torque, or thermal dissipation.Honestly, I just didn't think 'it was that "dumb" '.

Makes a lot more sense to me to be a duty cycle (to me) but,

I can see the utility in it being simply compared to the known-equipment's RPM rating.Ex: if your card is 'stock' and the fan % is low, that'd be a sign the bearings are failing, there's air-restriction, or dust has built up on the fan (slowing it).

Edit:

QuoteI know the % reading in GPUz is based off PWM signal, which isn't present if the stock fan is gone.

Incorrect. Replacement is also a 4-pin PWM fan, pin-for-pin adapted to the GPU's fan header.

Also (AFAIK), RPM is off a 'pulse signal' from a hall-effect sensor/equivalent* in the fan.(*IIRC, 'back EMF' can be used as a sorta in-situ hall-effect sensor.)

-

1

1

-

-

2 minutes ago, pioneerisloud said:

Most common RPM for a 120x38mm "bloweymatron" fan would be 3000 RPM though. So just going by averages, seems accurate enough to say 3000 RPM is "probably" your highest RPM the fan can do. It's at near 3000 RPM in your screenshots at 100% set (60% reading). I know the % reading in GPUz is based off PWM signal, which isn't present if the stock fan is gone. Sooooooooo yeah.

Being PWM, I was worrying about the "Fan Speed %" being derived from "PWM Duty Cycle"

rather than a comparator to a 'fixed, expectant value' in vBIOS/PPT.One of those times where 'other experience' can mis-lead one away from the answer

Like part numbers on Brake Calipers, they're *not* unique for each side....

NowIwe know.

-

1 minute ago, pioneerisloud said:

I mean I did tell you hours ago on Steam, the RPM reading is accurate still. But hey, glad you got it sorted.

Well, when you have no clue what the 'rated' RPM of my kit-bashed Dell workstation fan is...

(and no pics of the hub, for datasheet lookup)

-

2 hours ago, Avacado said:

Tried removing the Acoustic limit? Set to 0MHz and see. Though it already looks like the fan speed is maxing per your sensors tab. I can't tell if power target relates to fan or the card. Maybe try 100% to see if there is a difference.

*Edit, I think max fan speed should be somewhere over 4000RPM, so you might be right.

This might help, roughly goes over the settings:

OverdriveNTool [v0.2.9]: Download, Settings, [Undervolt]

MININGSOFT.ORG

OverdriveNTool [v0.2.9]: Download, Settings, [Undervolt]

MININGSOFT.ORG

Overdrive NTool: Download from the official site, change timings using the example of AMD 580 and Vega. In this tutorial, you will learn how to set up and use OverdriveNTool v0.2.9 and newer.Good ideas, TY for linky.

Edit: Tried the acoustic limit to 0. Same behavior.

Edit 2: Tried moar powah (for funsies) and actually under a load, instead of manually setting 100 for all fanstates.

Kudos for getting me to try that. Not 'the solution' but using the VegaFE PPT, I can actually get more than +50% power now.

Also related, is the fact I'm using a 120mm x 38mm axial blower, instead of a 'real' Vega's centrifugal fan assembly.

QuoteThe fan speed percentage is based off the stock blower fan so it doesn't report that properly if you're using a different fan, just pay attention to RPM. It also changes based on what bios or PPTable you use.

On mine with a GTX 480 fan 100% reports as ~80% IIRC with WX 9100 PPTable.Underlined offers an explanation as to why I previously saw it max @ 50% but,

this latest PPT I'm using off an unlisted/unverified VegaFE (air) card goes to 58%.

-

2

2

-

-

14 hours ago, ENTERPRISE said:

I agree with the sentiments. eGPU was extremely niche and even with TB5, I do not see people flocking out to by eGPU's. Ultimately it is about the demand for the product, not the underlying technology, you can improve the technology all you want but that does not mean you are creating more demand.

I've been looking for eGPU solutions for laptops, since before it was cool. /s

No, seriously.

As a teenager, every few-months I'd research the topic. In my 20s, I'd research it a couple-few times a year.

[I felt like I was "contributing, at a distance" as I watched the very first 'solutions' come into being, and then Retail eGPU enclosures coming to market]When the eGPU concept got popular w/ 'Content-Creators/Pros' I was deeply saddened to see it 'relegated' to Intel-Apple Thunderbolt.

In both my personal and generalized opinion(s)

eGPU is/was 'niche' due *entirely* to the expense and specialized requirements of ThunderBolt.

or, physically modifying your laptop for externalized access to an mPCIe or (PCIe-equipped) M.2 Slot

and, using 'kludge-like' (or overpriced) 'import' riser-adaptors.

USB4 has a chance to change that, and make eGPUs a 'normal thing'.

(all Win11 'sticker-certified' laptops *must* support USB4 PCIe and DP operation)

Topically, though...

Gotta agree that TB5 isn't gonna do a single thing to help/hinder eGPUs. Full-implementation USB4 will.

(I also think Intel is having regrets @ sharing TB3's spec w/ USB-IF. They're trying very hard to not let USB4 cannibalize ThunderBolt)

-

2

2

-

-

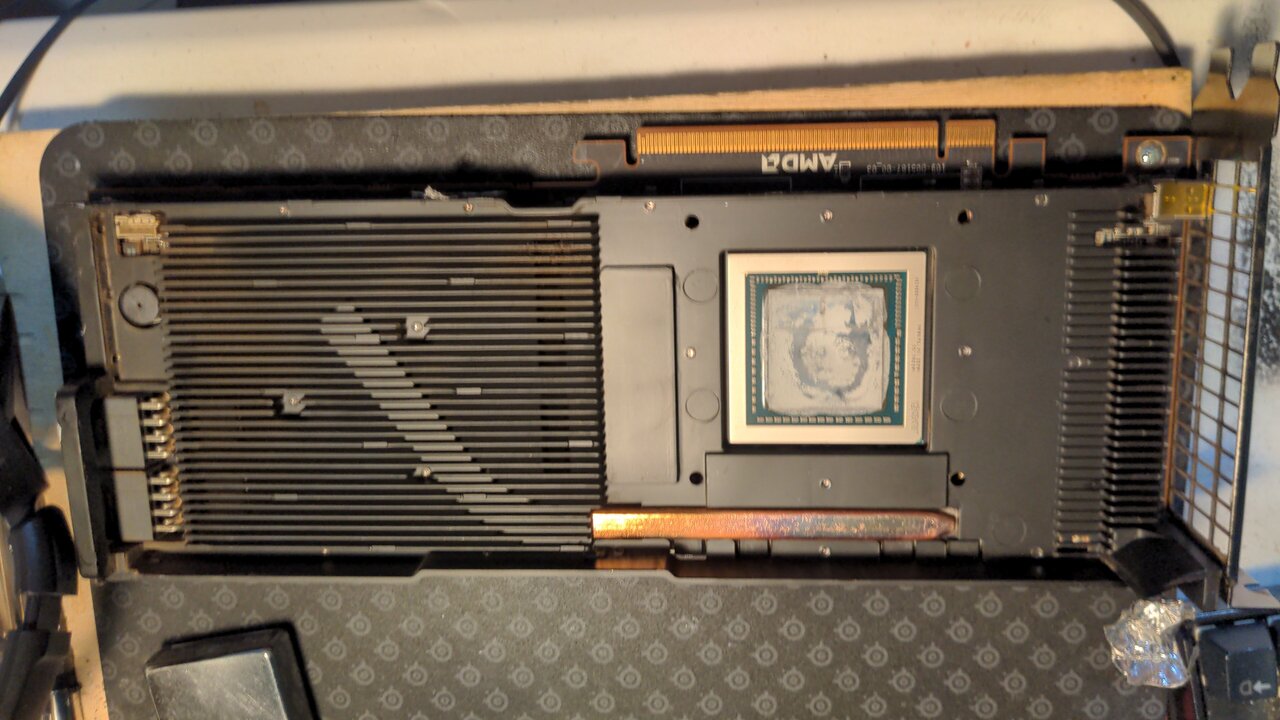

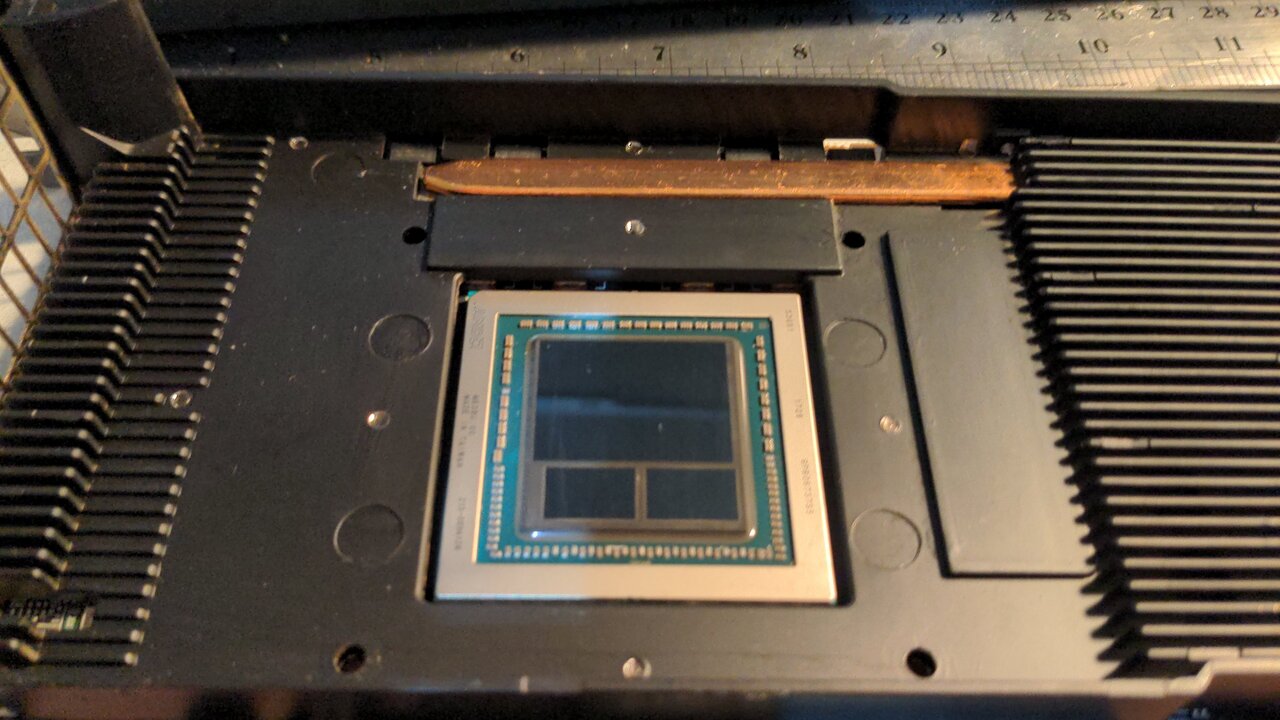

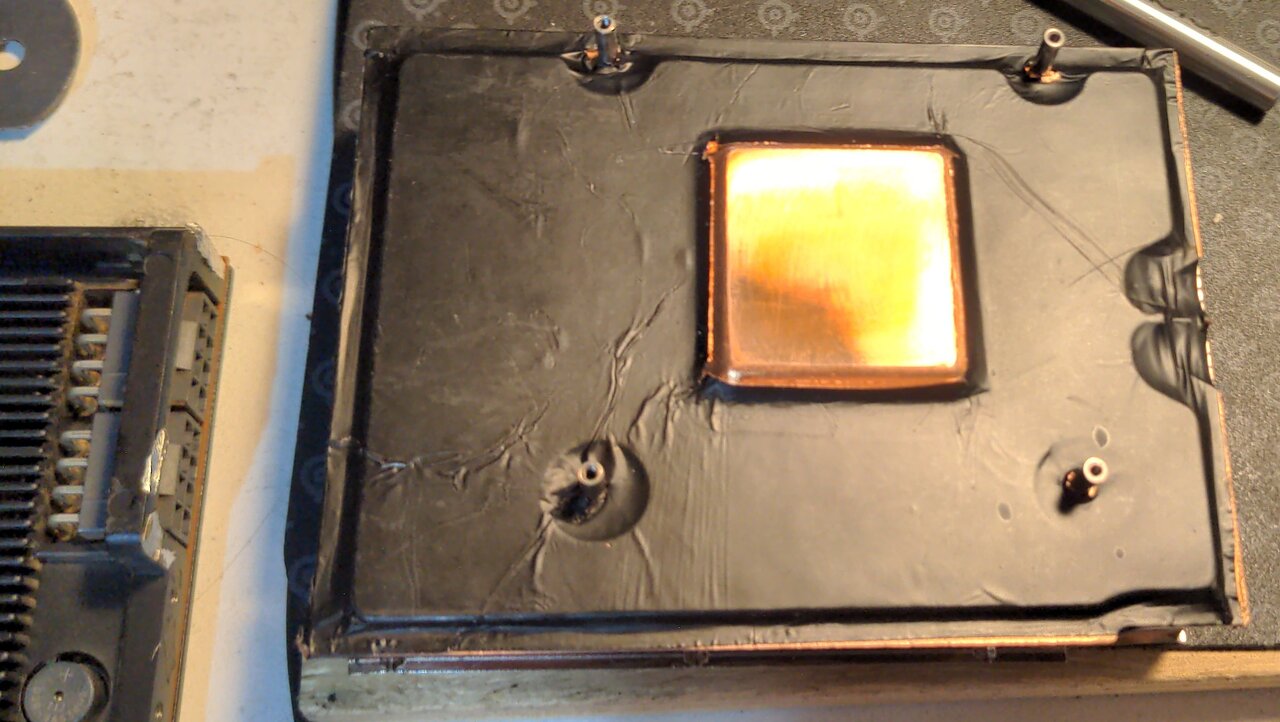

Radeon Instinct MI25, flashed to WX 9100, using VegaFE(air) PowerPlayTables. The card is powering and controlling the 4-pin 120x38mm (ducted) server axial-blower.

Note: The Axial Bloweymatron is of lesser power draw than the stock Vega56/64 blower

Windows 10x64 Professional (22H2)

GPU-z 2.55.0 / OpenHardwareMonitor 0.9.6I have had this issue across both AMD-official and Amernime Zone drivers (multiple versions)

I'm not even sure if the fan isn't *actually* at 100%. (It's kinda hard to tell by noise.)

I would greatly appreciate anyinput, suggestions, or solutions.

For those that are aware of my 'adventures' with this card, I just re-pasted it, and modified the heatsink.Great improvement! Now, I just wanna make sure that I'm getting the most cooling 'ability' possible.

Thanks!-

1

1

-

1

1

-

-

Update!

After"Mixed-CrossfireX Adventures"

and

finding a 'cheap' AIB/aftermarket cooler for a 65x65mm mount-pitch GPUBoth, went about as smoothly as I'd expect...

I decided to just re-paste and re-mod my MI25's cooling.

'stock' TIM - looks like it dried up some, and wasn't a very good application.

Clean n' pretty - Vega 10, and its two stacks of Samsung 8GiB HBM2

The Mod - Heatpipe > Copper | Allotropic Carbon > Heatpipe

Used a sheet of Thermal Adhesive-backed Allotropic Carbon-coated Copper Foil

AKA: Space Magic (NVMe) Heatspreader

TIM is 4+ year old MX-4, that I re-homogenized using a drill and paper clip

(Same TIM is on my 5600. lol)

Previously, the card would crash if sustaining over 1400core-1100mv (190-210W sustained, 245-250W peak)

Now, I can sustain 1528core, 1100-1150mv (240-250W sustained, 280W+ peak).

'light loads' like Quake 2 Remaster (@ 240fps) don't even get to 45c now. The fan rarely if ever becomes audible.

Note: PPT loaded (and edited) from Vega FE 16GB Air .rom. There's still room for improvements.

Lastly (sorry for no pics @TM) - I re-oriented the fan.

Instead of the 120x38 axial bloweymatron being 'semi-flat' against the card (making a 3+ slot card)

I set the fan 'up on its end', and taped a 'plenum' around it (making a non-symmetrical* 5+ slot card.

*With the shorty 6500XT in my X570-gen4x4, everything 'fits'

The Vega's fan 'rests' on my HDD cage (doubling as a strain-relief support), and the shipping tape plenum angles to Vega's heatsink; juuust 'touching' the edge of the 6500XT.

[will edit-in pics later. "If it looks stupid, but works; it aint stupid" needs pics to properly convey)

Now. I have a question.

Why are some 16GiB MI25s using 2x8-pin power (like mine)

and

Some are using 1x6-pin+1x8-pin

????

If I wasn't concerned that a 6+8 Mi25 might clock worse, I'd buy another for matched CrossfireX/AMD MGPU

[Wow... was gonna post pics of an eBay listing I asked that question to. He pulled the pic that showed the power inputs. Guess he wants to 'move' those cards...]

Edit 1: Went ahead and sent the eBayer a 'liquidation-pricing' offer on 2.

-

2

2

-

-

7 hours ago, pioneerisloud said:

Cheapazz Chipz is coming up soon.

This interests me greatly

Look forward to having excuse(s) to get my workspace/homelab set-back-up.

-

3

3

-

-

Almost forgot to post again...

LabRat810`s 3DMark - Fire Strike (GPU) score: 9848 marks with a GeForce GTX Titan

HWBOT.ORG

LabRat810`s 3DMark - Fire Strike (GPU) score: 9848 marks with a GeForce GTX Titan

HWBOT.ORG

The GeForce GTX Titanscores getScoreFormatted in the 3DMark - Fire Strike (GPU) benchmark. LabRat810ranks #276 worldwide and #6 in the hardware class. Find out more at HWBOT.

-

5

5

-

-

2 hours ago, pioneerisloud said:

@LabRatyou forgot to post your score here. -_-

LabRat810`s 3DMark2001 SE score: 2354 marks with a GeForce4 MX 4000 PCI (64bit)

HWBOT.ORG

LabRat810`s 3DMark2001 SE score: 2354 marks with a GeForce4 MX 4000 PCI (64bit)

HWBOT.ORG

The GeForce4 MX 4000 PCI (64bit)scores getScoreFormatted in the 3DMark2001 SE benchmark. LabRat810ranks #6692 worldwide and...

Gotta show off your run with the MX4000.I don't even know how many hours I'd been up for.

I submitted that and promptly PTFO'd

-

1

1

-

-

5 hours ago, pioneerisloud said:

I just let them know, the spreadsheet should get fixed.

@LabRat we've got you down for an MX4000 now. Holding you to at least that one sub. Got 13 days.

Holding you to at least that one sub. Got 13 days.

I suppose I'm gonna have to dual-box the machine it's in @ my desk... It wouldn't be fair to pass it thru to a Win9X/XP VM via PCI-PCIe bridge in my Daily Driver R5 5600 build.

I literally forgot that I'd already built the thing; I have a different case for it, but this one appears to 'work'.-

2

2

-

.thumb.png.7a5e694c97441b33ec57268691da1dc7.png)

.thumb.png.23fb410f0b76683b23ae63d2436296b3.png)

The Mechanical Keyboards Club

in Keyboard/Mice

Posted · Edited by LabRat

99% agreed.

the 1%: I can kinda 'see' being upset @ the base-packaging. Though, depending on marketing/pricing, I can also see the vendor/manu's PoV. That's probably the most affordable way to bulk-package them.

IMO, the 'proper' way would be layered-in cheap molded-plastic trays, or cut-out closed-cell foam. However, doing so drastically affects the density and cost-efficiency of freight (at scale).

TBQH, as long as every switch works, you're (typically) never seeing them (once keycapped)...

Overall, I too would be very-appreciative of the community-collectable tin.

Edit:

I went ahead and actually read what this is all about.

Seriously?

The details in the reviews illicit a picture of a fairly well-ran operation yet, were used to support saying they were slow?

IMO here,

USPS is responsible for the lacking tracking, not The Vendor.

I'd be giving benefit of the doubt, as I've had this kind of thing happen multiple times with multiple Vendors/Indiv.sellers.

Note: This is also 'expected behavior' for semi-DropShipped purchases; actually coming from overseas on regular shipments and handed-off to USPS.

Unless The Vendor was within (reasonable) driving distance, I don't see the problem.

[Ex. I've "RMA'd" in-store w/ Monoprice in SoCal]

Funny, I'm actually interested in purchasing from this vendor, 100% due to "attempted bad PR"

(and I don't even need keyswitches... Tho, do they have metal keycaps? )

)

Edit 2: Oh, darn; no fullmetal keycaps.

Only Keycaps they have listed: