Welcome to ExtremeHW

Welcome to ExtremeHW, register to take part in our community, don't worry this is a simple FREE process that requires minimal information for you to signup.

Registered users can:

- Start new topics and reply to others.

- Show off your PC using our Rig Creator feature.

- Subscribe to topics and forums to get updates.

- Get your own profile page to customize.

- Send personal messages to other members.

- Take advantage of site exclusive features.

- Upgrade to Premium to unlock additional sites features.

-

Posts

90 -

Joined

-

Last visited

-

Days Won

1 -

Feedback

0%

Content Type

Forums

Store

Events

Gallery

Profiles

Videos

Marketplace

Tutorials

Posts posted by Alastair

-

-

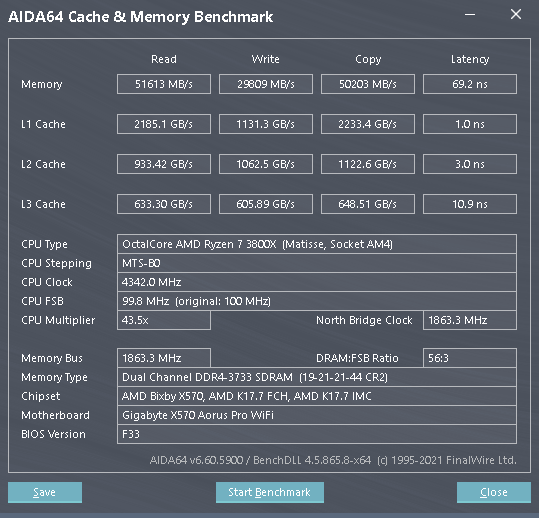

So after tweaking and tinkering and a lot of trial and error for most of this week and the entire weekend. I must say I am rather disappointed with my results. After managing to boot 3800MHz I REALLY thought I might have a chance at stabilizing 3800. But it turns out I can't even get this memory to stabilise at 3666. What can I expect from C-Die I suppose.

I went the route of tightening up my secondaries and tertiaries. I couldn't even get my primaries to budge.

In the end I managed to improve my time in Dram Calculators Membench easy preset from 143 seconds down to 128 seconds.

Geek3 memory score from about 5500/6700 to 5900/6900.

Not much improvement in AIDA.

I am sitting here and seriously considering selling these sticks and buying some OEM B-die kits. Just to see if I can get lucky on some cheap OEM ram.

-

1

1

-

-

27 minutes ago, pioneerisloud said:

Been running GPU's since GeForce 2 / Radeon 7000 days myself. I too, have NEVER had an AMD / ATI driver flat out kill hardware. That 196.75 driver did ruin probably about a dozen G92 cards in my house though back in roughly 2007 / 2008 when that driver released.

However, I DO have issues with "current" AMD drivers for legacy cards like the HD7970 and R9 290x. They completely gimp perfectly capable older cards anymore. That does kind of suck. My 5700XT works beautifully though, as does my RX 580's. I just find it frustrating a 290x doesn't work in 2022 with official drivers, but yet an R9 380 or 390 works just fine. It's the same dang card!

GCN came out with the 7970. There's no reason some GCN cards get updates and others don't when they're functionally the same. Meh...youtubers rant about this enough as is though.....

GCN came out with the 7970. There's no reason some GCN cards get updates and others don't when they're functionally the same. Meh...youtubers rant about this enough as is though.....  Maybe AMD will change that with a future "final" driver. I doubt it though. I really probably should get off my butt and install the latest modded drivers for those cards on the systems they're in. Supposedly the modded drivers make RDR2 and Crysis Remastered enjoyable on even a 7970. By all means, it "should" be capable enough to play modern games (on low / medium). /rant

Maybe AMD will change that with a future "final" driver. I doubt it though. I really probably should get off my butt and install the latest modded drivers for those cards on the systems they're in. Supposedly the modded drivers make RDR2 and Crysis Remastered enjoyable on even a 7970. By all means, it "should" be capable enough to play modern games (on low / medium). /rant

Oh yes I agree with you. I was pretty shocked when I heard they were basically dropping everything from Fiji right the way through. It was pretty insane. I think Terascale had longer driver support than GCN 1-3.

-

1 hour ago, The Pook said:

Yes I have verified this. When I set 1.35V in BIOS I GET around 1.38V as reported by software and SLIGHTLY higher than that according to my DMM. At 1.38v GET my XMP (3600cl18) isn't even stable. At 1.33V SET (1.35V GET) xmp is stable and with it I am currently sitting at a very unstable 3800 cl18 that I now need to figure out how to stabilize.

My first step is I am going to try increasing procODT. And then I will try decreasing the drive strength values (lower resistance as I understand it) Hopefully I might find stability.

-

I also have a thread on hardwareluxx as that seems to be the most definitive thread on C die accross the interwebs. There are a lot of good results. And people getting great results. But no real guidance on what various settings do and how various setting correlate to one another.

If I can get 3800 cl18 stable I will be really happy! Because it would mean around 54GB/s to 55GB/s and 65ns which I will be really chuffed. Not B-die speeds but still respectable for RYZEN

2 hours ago, ArchStanton said:I saw your post in the big thread over at OCN. As I think you are already aware, I am in no position to provide any guidance in the RAM OC'ing arena. I can only cheer you on from the sidelines. I too hope to learn the "mystical arts" without simply copying/pasting the settings of others in, how did you describe it, "willy nilly" fashion

.

.

-

I have managed to get 3800 18-21-21-21-44 2T to boot into windows!

Now any ideas how to stabilise it? Samsung C-die doesn't respond to voltage. And at 1.35ish I am already at the point where C-die starts to negatively scale.

I have a wad of options in my BIOS ands have no real clue where to start. Any ideas?

I don't know what various RTT, and CAD bus settings do. What do I do with procODT. I am busy reading the c-die thread on Hardwareluxx but I still don't have much clue on what I am doing once I have primaries down. I have only ever bothered with primaries in my ddr3 days I never played with the secondary stuff.

-

1

1

-

-

3 hours ago, pioneerisloud said:

Don't forget 196.75's that killed entire fleets of G92 cards......

NVIDIA retracts WHQL-certified “GPU Killing Drivers”

BRIGHTSIDEOFNEWS.COM

NVIDIA retracts WHQL-certified “GPU Killing Drivers”

BRIGHTSIDEOFNEWS.COM

Recently, nVidia posted a WHQL driver “Release 196.75”. The driver brought WHQL-certified support for nVidia ION, recently renamed...Seriously, both sides have driver problems. Pick whichever card fits your budget, has the features you want, and just be careful what drivers you use lol. Goes for both sides of the fence. I had experienced G92 failures with the above driver, and never again did I just blindly trust a driver on release from either company. Lost too many cards that day.

Well in all my years between amd and nvidia. Rankine's, Currie's, Tesla's in various G80 and GT200 flavours, Kepler's and Maxwells, Evergreen's, Northern Islands, Pirate Islands and vega's. I've never had an AMD driver kill a card. I can confidently say that between my years of experience with both teams. Although during my G80 days I was still in school and was getting my driver updates off of DVD's that my local computer magazine (PC Format) had on their disks. So I probably missed 196.75. I also did not own a Kepler during 320.18 so I missed that one as well. But boy did OCN blow up when 320.18 came out I remember that.

-

1

1

-

-

On 03/04/2022 at 06:06, bonami2 said:

As an enthusiast that used Eyefinity Surround VR and 4K 120hz

The nvidia panel is 10000x more stable than that piece of crap amd thing that crash and never hold any setting

from 7950x2 crossfire to rx 480 rx 580 and now my 6800xt.

I miss my 1070

Ways overcomplicated going around the amd setting.

Remind me of Xfire and Raptr thing

That was a long long time ago. These days it almost always just works.

-

On 03/04/2022 at 10:22, PCSarge said:

the problem with both camps is everyones had different experiences, i had a bad nvidia driver release destroy 2 brand new cards and a motherboard back in the G92 days. neither nvidia or evga would replace them after 2 weeks of ownership.

however the motherboard company who wasnt at fault did replace thier board, and i buy thier boards to this day because i got customer service

people get used to certain things on both sides, its a never ending topic where everyone is going to answer differently.

Remember the dreaded 320.18? Bricking Kepler based 780s and titans? Damn.

-

On 03/04/2022 at 00:12, ArchStanton said:

I've only begun messing around with it (Radeon software). Minor annoyance: needing to turn off their (data mining I think) "customer experience program" after each update. Also, does it offer the ability to save multiple, or even better application specific, overclock profiles? I notice it "runs home to momma" and defaults back to no overclock whatsoever any time any part of the system has a hiccup. It would be nice to have specific one click profiles for various benchmarks/games. It may have this functionality already and I am simply unaware, as I have not delved deeply into all the available settings yet.

As far as I know you can save application specific OC profiles.

Go to global graphics and then you see all your installed applications. Select a game or application you want to tune and click on it. You can se all the graphical settings you can adjust for the app. There will be a wrench with the option "tune game performance". That will allow you yo adjust the clocks on a per profile basis I think.

-

2

2

-

-

4 hours ago, ArchStanton said:

I see you have played this game before.

If there are any truffles in Tennessee, I highly doubt Waddles will be motivated enough to seek them out. I'll be lucky if he is willing to eat the multitude of acorns around the house and yard.

Sadly, I don't think it will work that way. When Waddles kicks the bucket, I will be expected to dig a grave and bury him with due "decorum and respect". We have a "pet cemetery" on the hillside opposite the pond.

I'm sure you can find a way to fake a death and a burial.

-

1

1

-

-

Hey look on the bright side. at least you have a source of farm fresh bacon and pork right at hand.

-

1

1

-

-

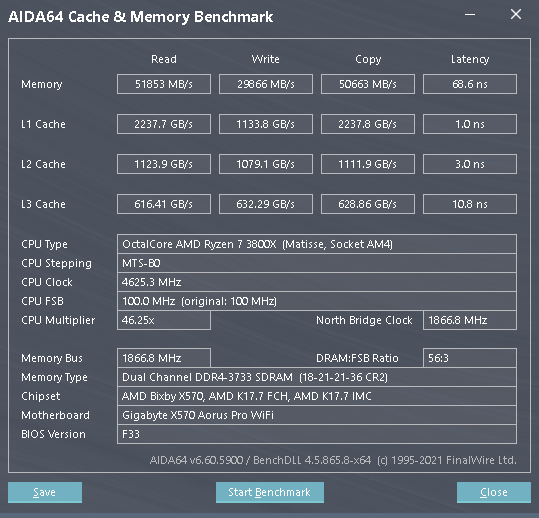

A little more tweaking gets me to 3733MHz at 18-21-21-21-36 2T

-

1

1

-

-

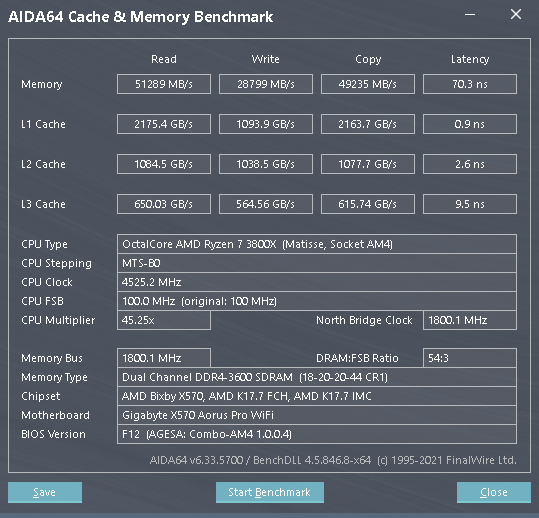

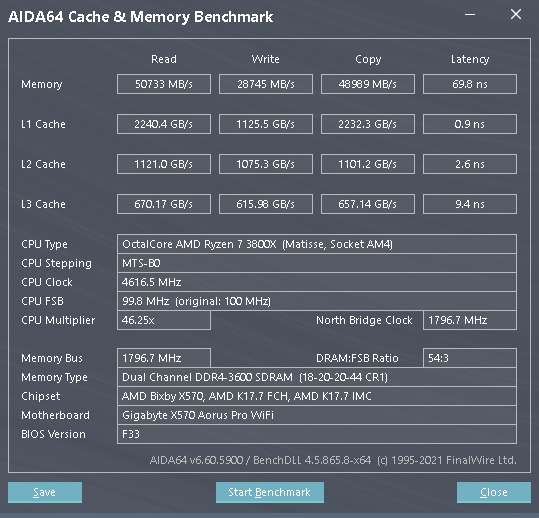

I have been messing around with my memory the past few days.

I have 32GB Avexir 3600 cl18 memory. 4x 8GB sticks. They are Samsung C-Dies and are pretty underwhelming to work with. Can't crank the voltages unfortunately as they just fall over. So all I have is 1.35v to work with. I tested 1CR and 2CR AT 3600/1800 with my stock xmp settings and saw NO improvement which I was pretty disappointed by that. I figured going to a 1CR I would see at least a bit of a latency improvement.

I have managed to get to 3733MHz with an fCLK of 1866MHz. I had to drop the primaries down to 19-21-21-44 2T. I did at least manage to break even on latency. I was getting ~69-70ns at XMP and am managing about the same but with at least a bit of an improvement in bandwidth.

I also tried going down to 3200 and trying to tighten the sticks a bit. I could do 16-18-18 which is very common for c-die 3200 kits. I couldn't get cas15 to boot.

So any suggestions as where to from here? The thing that I get PARTICULARLY lost on are the secondaries and tertiaries.

Stock xmp (Actually 2CR as GDM enabled)

SpoilerStock xmp (Actually 2CR as GDM enabled)

Stock XMP 1CR GDM Disabled.

SpoilerStock XMP 1CR GDM Disabled.

Spoiler

Spoiler3733 19-21-21-21-44 2T

-

Looks like a sweet little machine. I love budget builds. Just something about squeezing every penny

-

1

1

-

-

7 hours ago, J7SC_Orion said:

Yeah, it only works under select circumstances (and only on Asus CH) for the Ryzen 3k series - it is actually counterproductive on Ryzen 5k, at least when I compare my Ryzen 3k with my 5k.

I would focus on your heat generation issue (180W) for improvements.

180 watts was just for suicide runs. That was the 3800X at 4.5GHz across all cores at 1.525v. That was an aVX workload.

Daily driving with my OC it hovers in the 120w range.

-

1

1

-

-

1 hour ago, J7SC_Orion said:

fMax by the Stilt in the Asus Bios is s.th. slightly different from adding boost in the bios, though related - but it doesn't apply to most other mobos.

180W / 90C / 1.525v ? Wow. Yeah, if you can get temps down into the high 70s / low 80s that should help.

I had a quick oogle about the Google. And it is only for asus boards. But won't help me much as it wouldn't work with the EDC=1 bug. And from what I can gather doesn't increase performance by as much of a margin. I don't seem to see anything similar for gigabyte boards. I wish zen 2 owners got the curve optimiser in a microcode update.

-

54 minutes ago, J7SC_Orion said:

fMax by the Stilt in the Asus Bios is s.th. slightly different from adding boost in the bios, though related - but it doesn't apply to most other mobos.

180W / 90C / 1.525v ? Wow. Yeah, if you can get temps down into the high 70s / low 80s that should help.

Could you provide any more information? I'd be very interested investigating this avenue to see if there is anything similar for Aorus mobos.

Yeah. My cpu and block are already lapped. I'm using Coolermaster Master gel Maker Nano (gosh what a mouthful Coolermaster and their masters) paste which is = to kryonaut. I've still got some conductonaut LM. Maybe at my next rebuild I'll seal around the package so that nothing leaks out and switch to LM. I don't know what else to do to improve thermals.

44 minutes ago, ArchStanton said:I think he said he uses the "EDC bug". I am very far from 100%, but I think they may do something very similar to one another and/or possibly conflict?

I think we are talking about different things. But I am using the EDC bug.

-

4 minutes ago, J7SC_Orion said:

Nice work !

FYI, I don't know of your Gigabyte Aorus Pro has s.th. like 'fMax' (per Asus X570 CH8) but that would help a bit w/ single score Cinebench (on Ryzen 3K, not 5K). Also, on my 3950X, I run a very slight undervolt offset (-0.00625) for best results, stock bios settings otherwise.fMax? Is it like the PBO Offset? Where you can add 200mhz to the PBO offset so the CPU could if it was a perfect world boost 200MHz above the stock max boost? If so then yes. I run un undervolt of 0.05v for my PBO oc. Scores and "effective clocks" scale up until there and start to drop off after after -0.05625v. I achieved the all core results with a static 4.5GHz but that required a whopping 1.525v which was taking me to 180w power and a BLISTERING 90C on water. I am seriously trying to think of ways to improve my thermal performance as much as I can with my current block. I am wondering if taking the dremel out and attempting my own "micro fins" into the block is a good idea. (probably not)

-

I know its not CPU-z but pushing some scores in cinebench too. Trying to compete in the HWBOT Div2 challenge.

I managed to get the fastest 3800x in 1T for R23 @ 1373

5th place 3800x R23 @ 14117

And 2386 R15. I was trying so hard to hit 2412 so that I could take 17th in stage 2

-

3

3

-

-

34 minutes ago, Mr. Fox said:

I am nearly 60 years old and I don't remember ever seeing things as messed up as they are now. Sleepy Joe and his insane clown posse have really done a number on us. It's going to be difficult to recover from the multi-faceted damage. So yeah, $100 USD buys less than ever.

Yeah so maybe 100 bucks isn't all that bad for some more mhz. I'd have to pay alot more for a cpu upgrade anyway... Besides. If I can reuse an optimus block like I have been able to on my Raystorm (gosh this thing must 9 years old by now) it wouldn't be a bad investment.

-

1

1

-

-

7 hours ago, Mr. Fox said:

In my case the load temperatures were about 6-8°C cooler with the OptimusPC block versus the Raystorm Pro. Idle temperatures were affected less, maybe 2-3°C. That was with an Intel 10th Gen setup, so it may not translate the same with AM4.

Right now the best IC for DDR5 overclocking is SK Hynix. That was definitely not true with DDR4. Samsung B-die was, by far, the best IC on DDR4 and SK Hynix was not good.

I would love to get my hands on one of those Optimus blocks. They look great. They really do. But I won't. Firstly I don't think they would ship to the @ss end of Africa. Hardly anyone does these days. And secondly. I would be spending over 100USD for maybe 5c-10c and possibly 50mhz-100mhz more boost clocks. (although admittedly 100usd these days wont get you as much in the pc space as what 100 got you three years ago)

-

2

2

-

-

47 minutes ago, Avacado said:

I'm assuming the picture in your sig rig is in question. I think fixing your airflow might be more beneficial. Do you have 3 intake fans at the front of that case in addition to the bottom 2 on top of the EK 240? What is your ambient?

There is nothing wrong with my airflow. I've checked how air flow through the system with a smoke machine. Its been set up with this for gosh darn about 8 years now. The only thing that changed was the fans themselves over the years. It is as follows:

200mm CM Force 200 front intake

4x140mm Intake Push/pull on rad Corsair ML140s bottom 280 radiator.

6x 120mm exhaust Push Pull Noiseblocker Eloop B12-4's on Top 360 radiator.

1x ML140 rear exhaust.

with my smoke machine I am just on the bit on the positive pressure side of things. the 360 rad is fairly dense while the 280 isn't.

So essentially:

The bottom 280 rad is an intake. (plenty of space at the bottom of the case they aren't choked)

200mm is front intake.

360mm top rad is exhausting

140mm rear is exhausting.

How much airflow do you want?

Me: Yes

To give you an idea, pulling full cpu load and GPU load. Im pulling around 750 out the wall. Probably 650 to the components that matter. Vega 64 with OC is doing about 450w total board power.

GPU Temps 32c core (7c-8c over ambient) / 42C HBM/ 55-65C hotspot

CPU temps 65C - 70C (40-45C over ambient)

-

1

1

-

-

7 minutes ago, J7SC_Orion said:

My first delid was a 5 giggles 1.3v 3770K - bare-die and LM pushed it way beyond that. I delidded a few other Intels since then, but no AMDs at this stage... and unlike @Mr. Fox, I love both the 3950X and 5950X...the latter even does DDR4 4000, but most of the time, I undervolt relevant parameters for DDR4 3800 CL14 for both chips.

Looking forward to LG1700 / DDR5 when the RAM I want releases. I probably delid it and use bare-die, but likely skip LM, just in case I want to freeze it.

I wonder what the B-die of the DDR5 generation will be.

-

1

1

-

-

5 hours ago, Mr. Fox said:

I think a better water block is definitely worth considering. I had an OptimusPC foundation and an EK monoblock on the 5950X. They both worked well and produced equivalent results. The monoblock was gorgeous to look at, but I tinker with things often enough that I found it inconvenient. For a person that does not do a lot of messing with the hardware, the monoblock is probably the best bet because of enhanced aesthetics with no loss in cooling performance.

I have been a fan of OptimusPC blocks since they were first released. The Signature and Foundation blocks are excellent. I was using a Raystorm Pro block on my work desktop for a long time. I recently replaced it with a Signature block and saw immediate improvement in CPU load temps. Having owned 3 Intel (Signature and Foundation) and 1 AMD (Foundation) CPU blocks, I don't want to use anything else now. I actually prefer the Foundation block with the clear top so I can see if/when cleaning the jet plate is needed. The Signature block looks awesome, but it is solid metal and you cannot tell if the jet plate is collecting sediment or debris without taking it apart for inspection.

Here is a photo of the EK monoblock. I loved how it looked.

How much of a difference would a better block make though?

Are we talking 5c over my Mesozoic Era XSPC Raystorm original. Or are we just talking about a degree or two here? I can't even remember how much of a difference Der8aur's bracket made. Cause I am thinking about getting that too.

Currently in a heavy all core workload. Like Cinebench or Blender or something like that. At my present OC settings (PBO, 10X, +200MHz, EDC=1, -0.05v) at 4.4GHz boost across 16Threads I see. 65-70C. Or more accurately around 40C-45C delta over ambient. I can't see it improving much.

C-die overclocking

in Memory

Posted

Ok this really is the end of the road now I promise. Its stable enough that I can daily this in my gaming rig.