Welcome to ExtremeHW

Welcome to ExtremeHW, register to take part in our community, don't worry this is a simple FREE process that requires minimal information for you to signup.

Registered users can:

- Start new topics and reply to others.

- Show off your PC using our Rig Creator feature.

- Subscribe to topics and forums to get updates.

- Get your own profile page to customize.

- Send personal messages to other members.

- Take advantage of site exclusive features.

- Upgrade to Premium to unlock additional sites features.

-

Posts

335 -

Joined

-

Last visited

-

Days Won

6 -

Feedback

0%

Content Type

Forums

Store

Events

Gallery

Profiles

Videos

Marketplace

Tutorials

Everything posted by ArchStanton

-

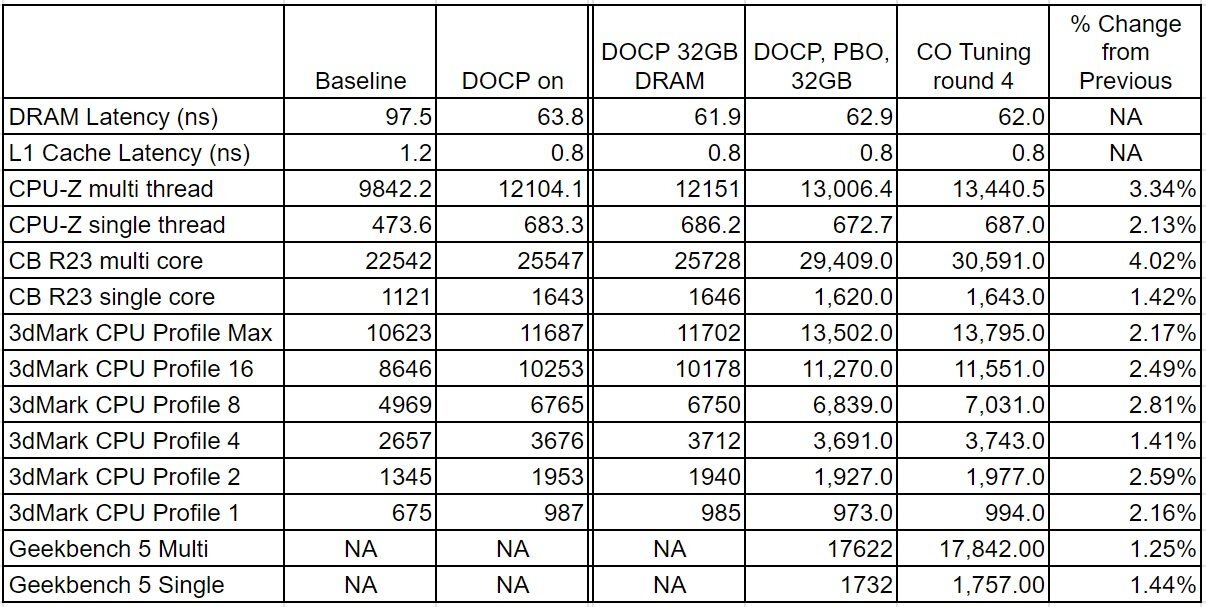

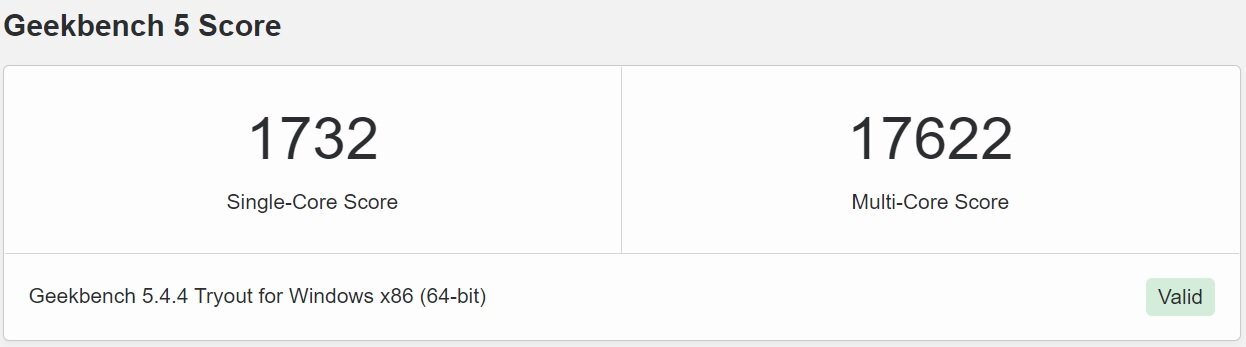

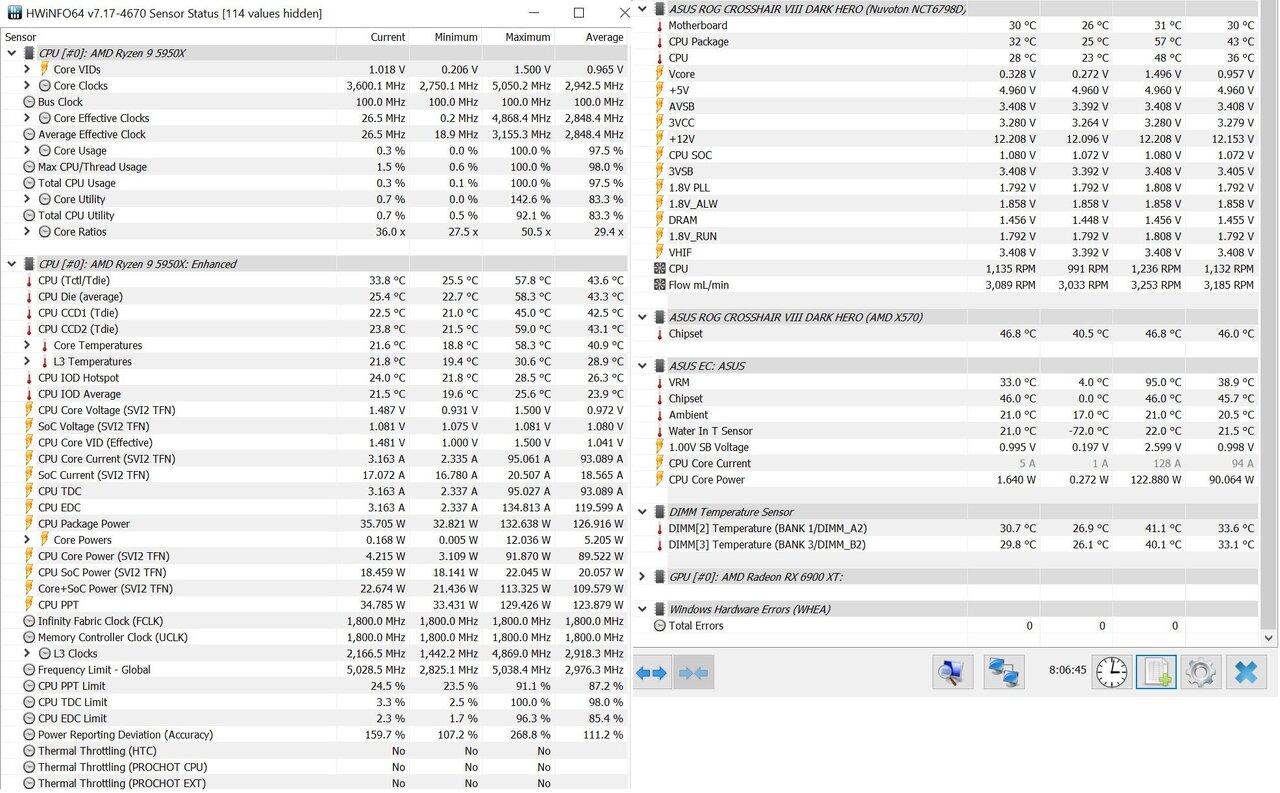

Small update: After a lot of additional reading, I swapped corecycler from the default test to 720K, and as others elsewhere had indicated, this exposed additional instabilities. I reduced the CO offset (less negative) for about half the cores to regain stability. Naturally, this resulted in regression for all benchmarks. Currently, my plan of attack is to "tune" curve optimizer for the best stable (passes all benchmarks along with 24hrs of every permutation of corecycle I have the patience to throw at it) performance I can get from a single core. Then, I will make use of the Dark Hero's "Dynamic Overclock Switching" feature to layer a per CCX static OC on top of the PBO/CO settings. Once that is accomplished, I hope to start squeezing fclk and RAM. In the meantime, I've been trying to improve my understanding of the PPT/TDC/EDC limits and their effects on performance (I believe I am correct in stating that these parameters are really only relevant for high thread count workloads). The "auto" PBO limits are 140/95/140. If I change the setting to "Motherboard", the Dark Hero's default limits are 395/255/200. From what I've read, it seems quite common for the sustained amperage limit (TDC) to be set higher than the short-term amperage limit (EDC), but I've been unable to completely grasp why this should be the case. I made quite a few runs in CBR23 single last night with CPPC and CPPC Preferred Cores enabled. Under those conditions, Windows always assigns the load to core 1. So, I suppose it has the most aggressive factory V/F curve and will likely have a less negative CO offset when stable than my weaker cores. I tried various combinations of Boost Override and Curve Offset on core 1 last night. I do not consider the values in the table below to reflect the performance of a "stable" system as I did not run any other stress tests or benchmarks.

-

Forums first comp: CPUz Benchmark

ArchStanton replied to Storm-Chaser's topic in Benchmarking General

-

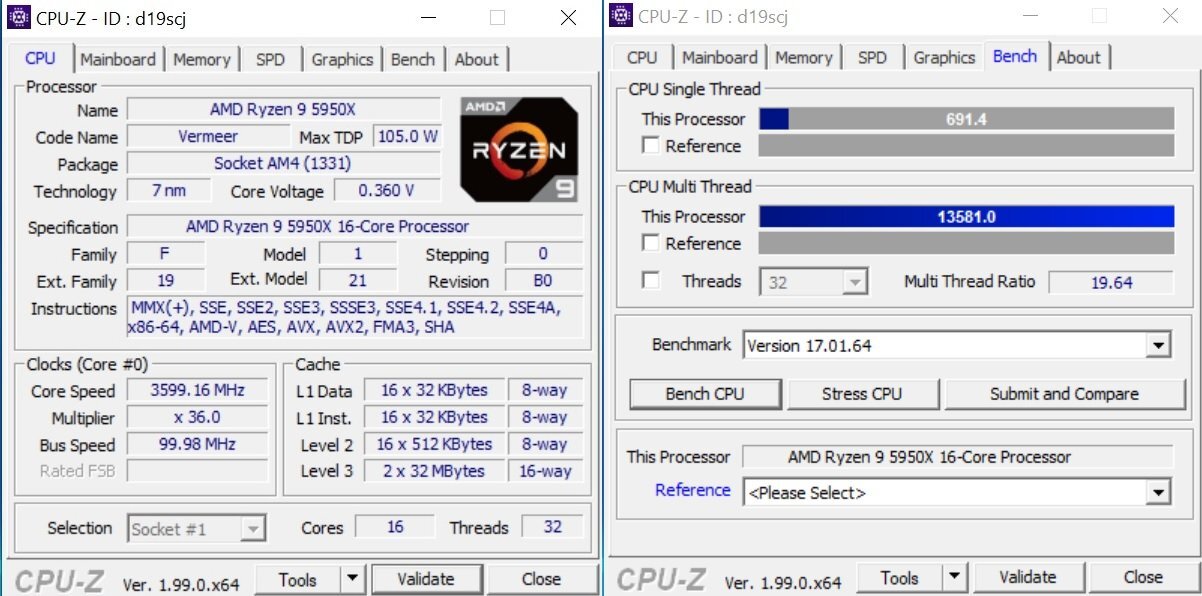

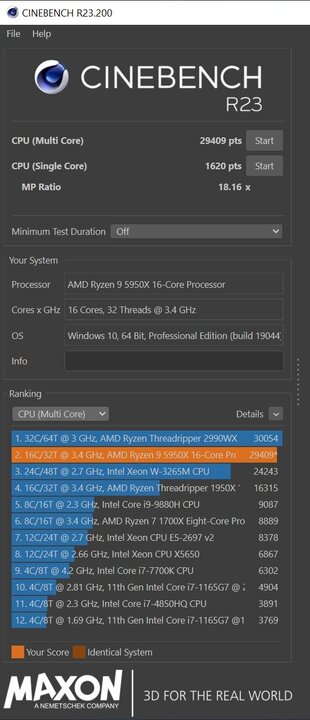

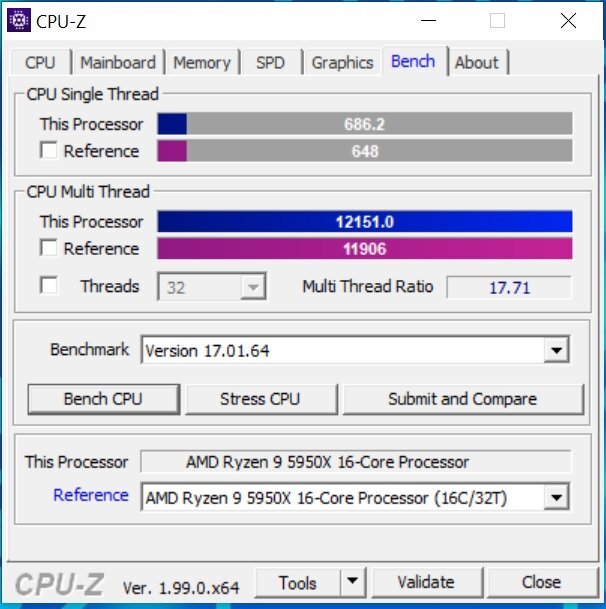

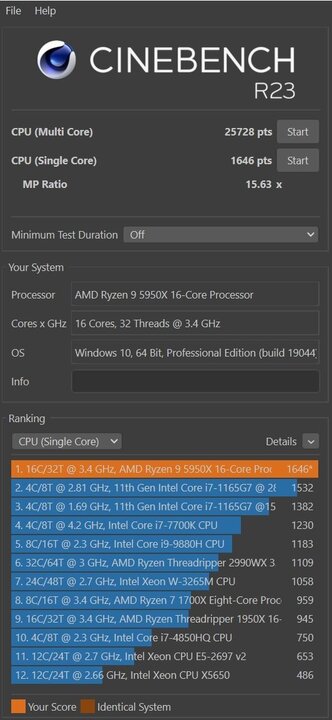

Began with some Core Optimizer tuning tonight. I don't have it fully dialed in by a long shot, nor is stability truly confirmed, but it felt like progress none the less.

-

In general, I like it very well. I could really use a small novel explaining all the BIOS options, but I'm getting there one google search at a time . I'm very glad there's an option to disable the MB's onboard ARGB effects, as there's a ridiculous amount of ASUS bloat required to control them, and they default to "rainbow puke". It will be interesting to see what kind of fclk she can carry in the days to come .

-

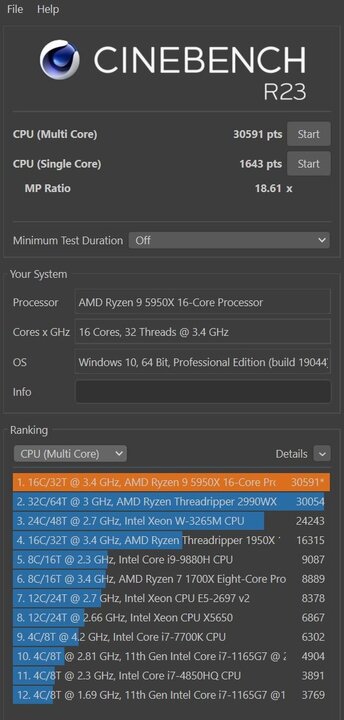

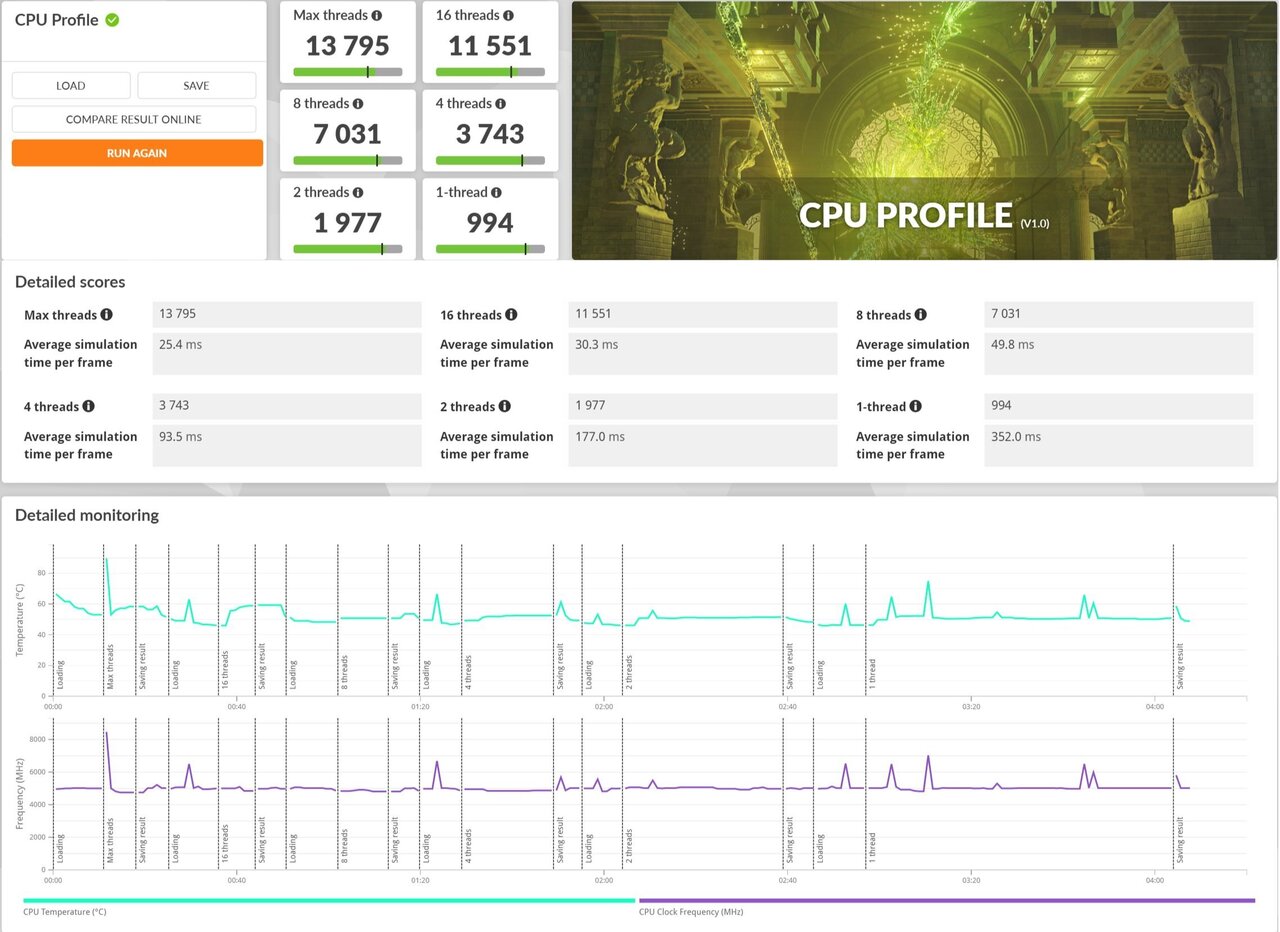

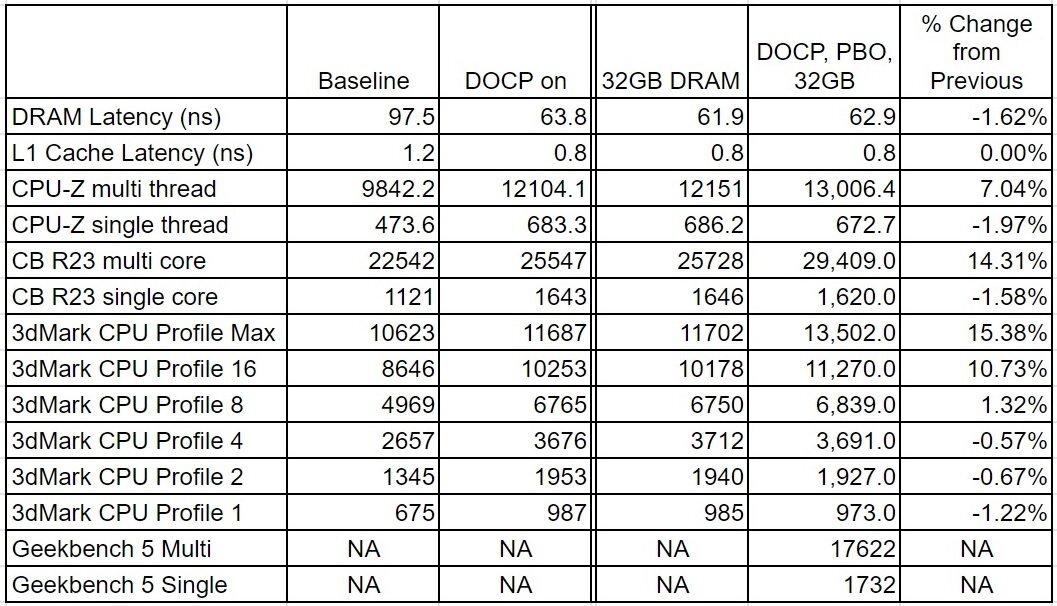

Summary. Significant jumps in multicore performance. Slight degradation in single core performance. Good for productivity and less than ideal for gaming.

-

-

Just curious, do you fellas make a distinction between "distilled" and "deionized" water? If so, do you regard deionized to be overkill?

-

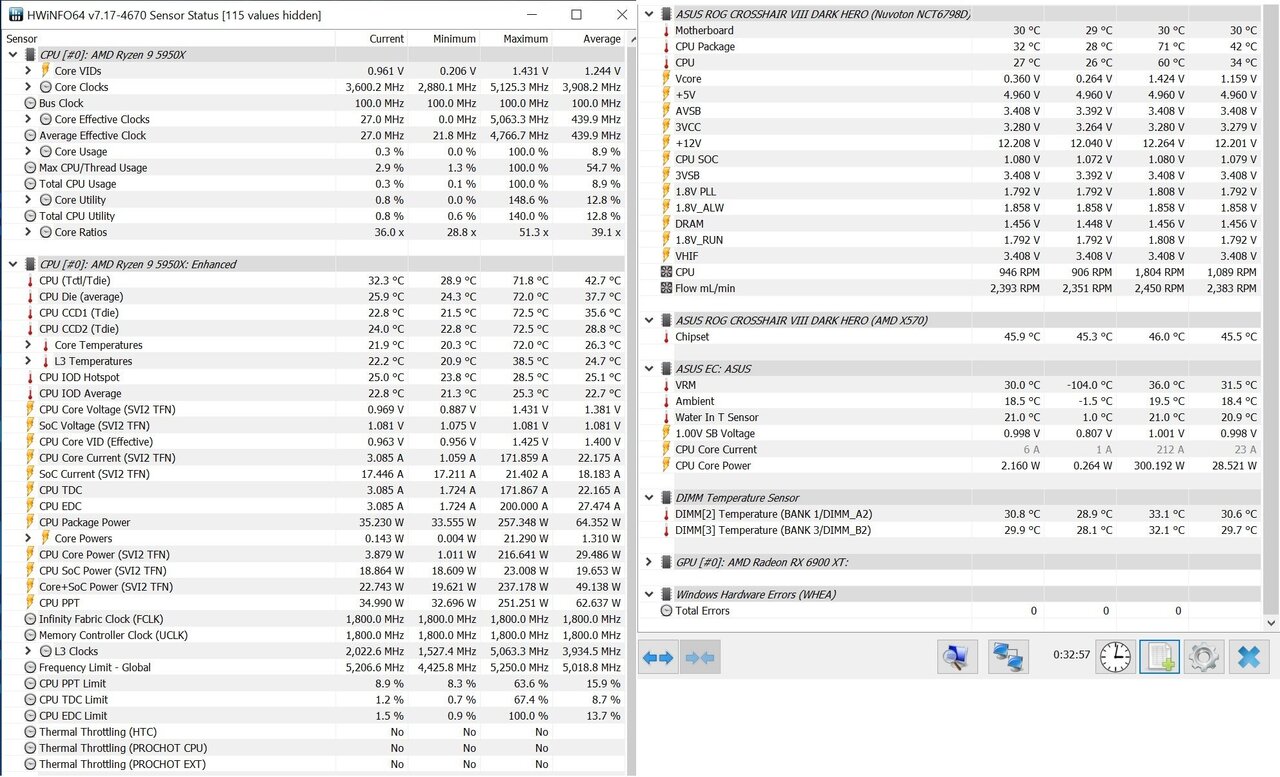

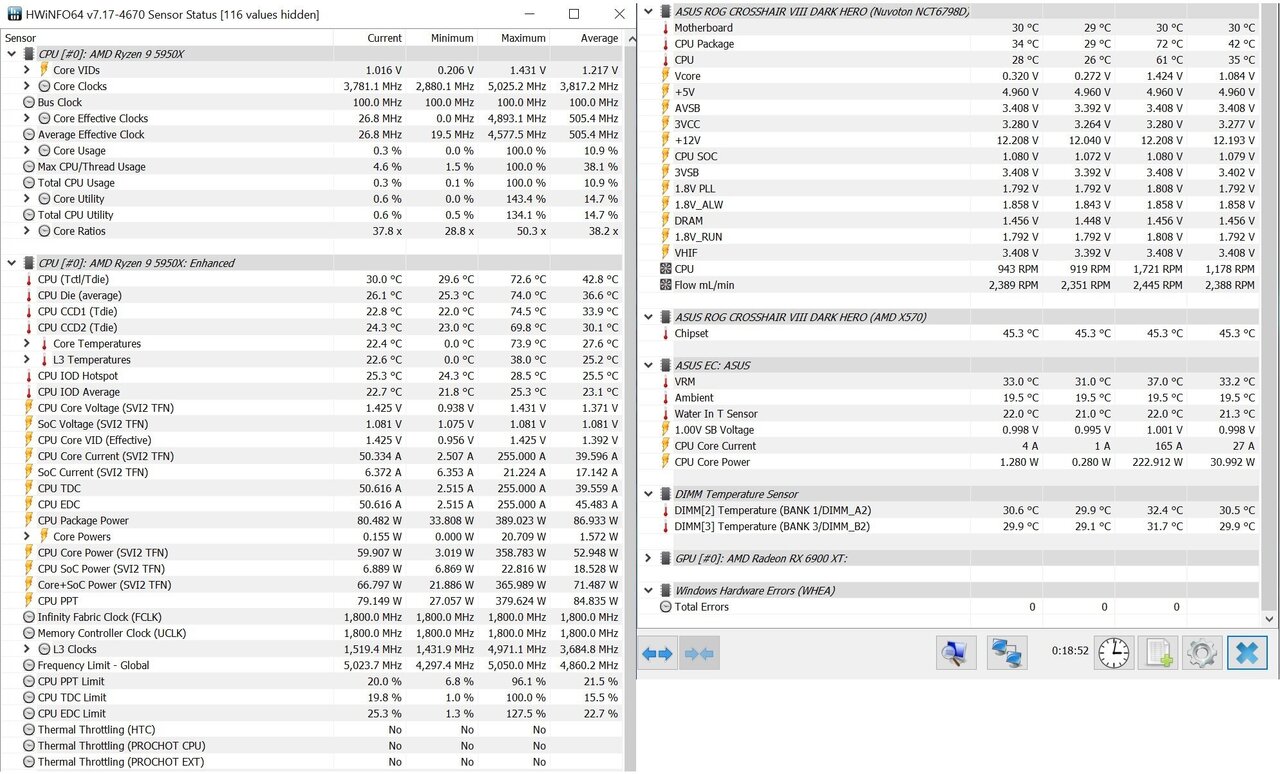

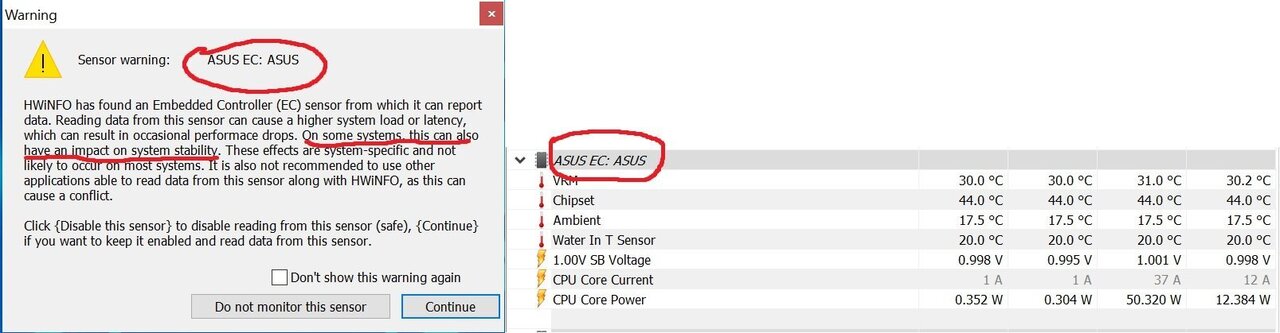

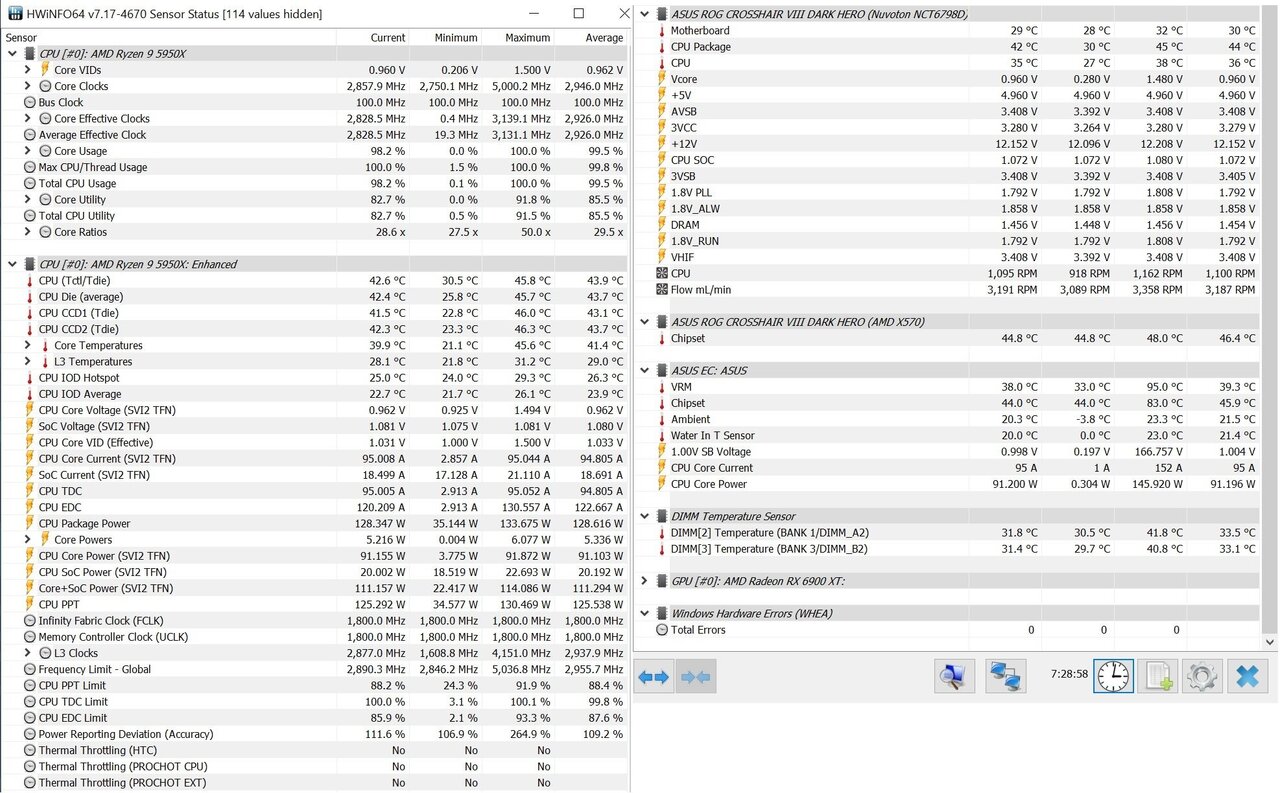

The good: Another 8 hours of prime95 without issue. I did save the .CSV log from HWiNFO64. I'm pretty confident my VRM temperatures peaked at 40 Celsius rather than 95 indicated in the picture below since there was only 1 reading above 40 in 27,331 data points . I also think it very possible that my mysterious wonkiness two nights ago was caused by the "Embedded Controller" issue highlighted in my post just prior to this one (rather than my RAM). This controller's inability to play nice with HWiNFO64 at all times could explain some of the erroneous temperature readings I've seen in the past as well. The bad: Feeling like an idiot after wasting two nights because after reading that warning the first time I launched HWiNFO64 I completely ignored it from then on.

-

-

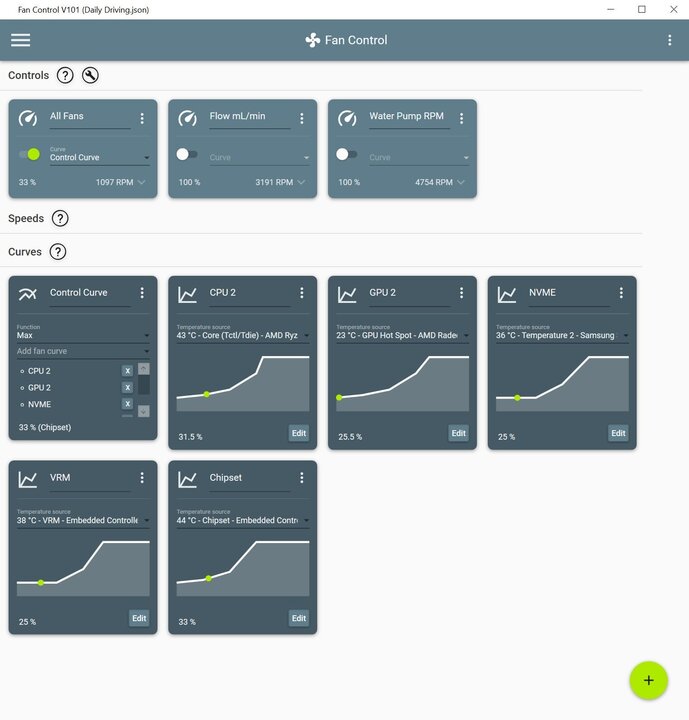

This particular piece of software has exceeded my expectations. The screenshot was taken while prime95 was still active. Hence the elevated temperatures on some of the components. All of my fans are governed by the master "control curve" in the upper left of the picture. It uses the maximum PWM duty cycle dictated by the 5 separate fans curves tied to individual temperature readings in the lower portion of the picture (I have each base curve set to 1-degree Celsius hysteresis with a 2 second response time to smooth changes in RPM out to levels I find acceptable). Currently I do not have the option to create a base curve based on memory temperature as Fan Control v101 doesn't have the DRAM temperature readings in the list of possible sensors when creating a new curve. I need to find or create a HWiNFO64 "plugin" for FCv101. However, creating one is, for the moment, pretty far outside my comfort zone. Regardless, those with more ambition and or time could really optimize the settings of their cooling system with FCv101.

-

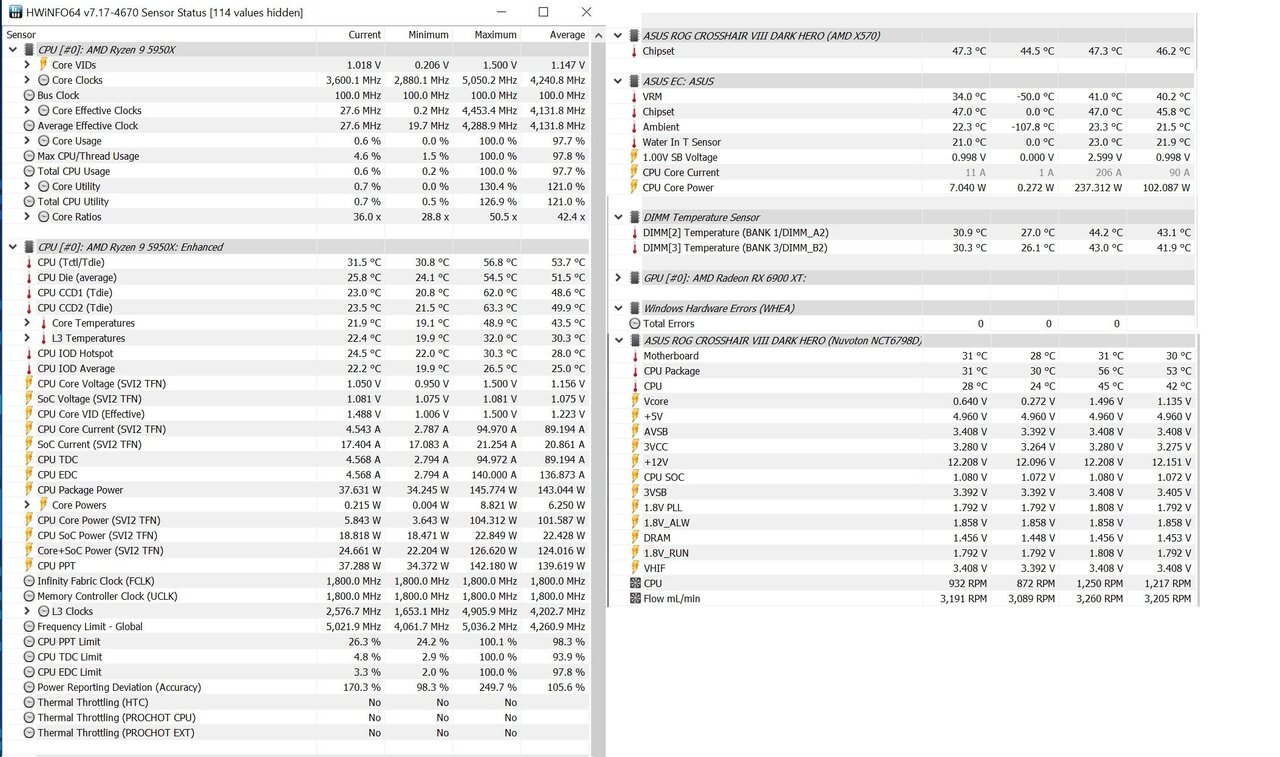

7hrs of prime95 and no errors. Far from conclusive, but certainly encouraging. I don't have much faith in some of the maximum temperature readings being reported by HWiNFO64 (or they are exceedingly fleeting), since if they were present for more than a flickering moment there should be a corresponding increase in recorded fan RPM. I may let the test run again and log both the temps and RPMs just to have a better picture of the duration and frequency of the spikes.

-

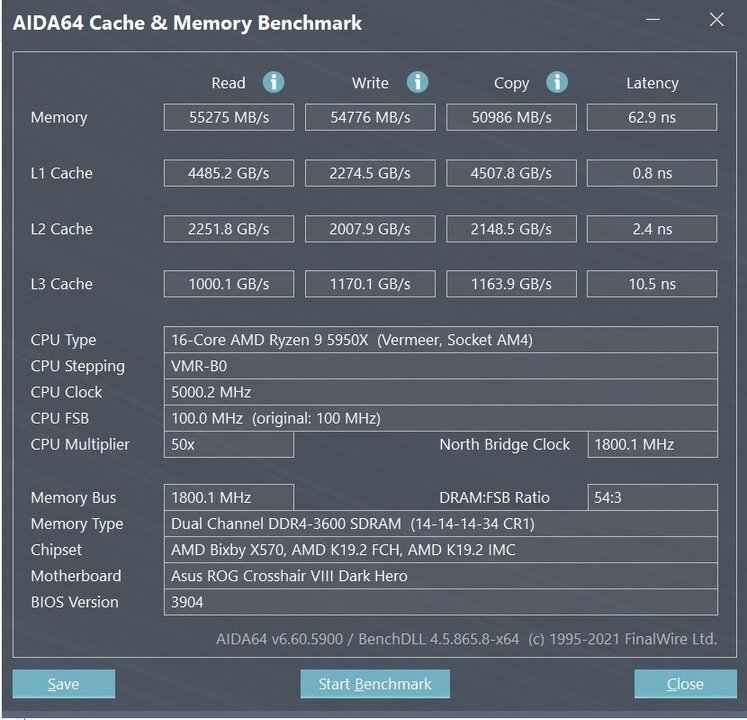

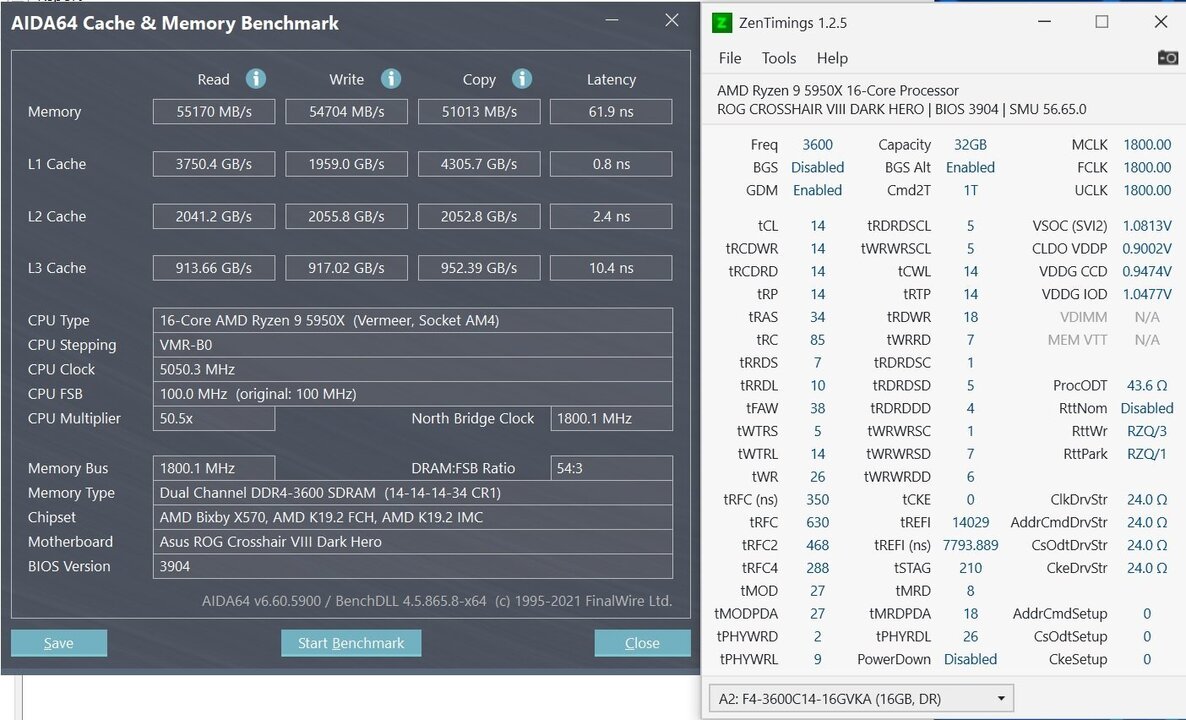

Thank you for the encouraging words. Sub 60ns latency and an fclk of 1900 are hopefully in the cards for me.

-

Being a novice, I won't claim to know better. However, given the monetary investment at risk, I'll pretend the "secret sauce" anti-corrosion additives Mayem's claims are included in their X1 Eco PC Coolant Concentrate are more than just marketing for the time being. I added their product to some deionized water that was also relatively cheap.

-

Some Thermaltake units that I spent the better part of a day cleaning and an XSPC TX360 Ultra Thin up top because it was one of the few that would fit in my desired "envelope". They are probably all "okay" on the bang for buck meter, but nothing to get excited about. All of my noobish purchasing decisions are detailed here: (1) My middle-aged overclocking adventure | Overclock.net

-

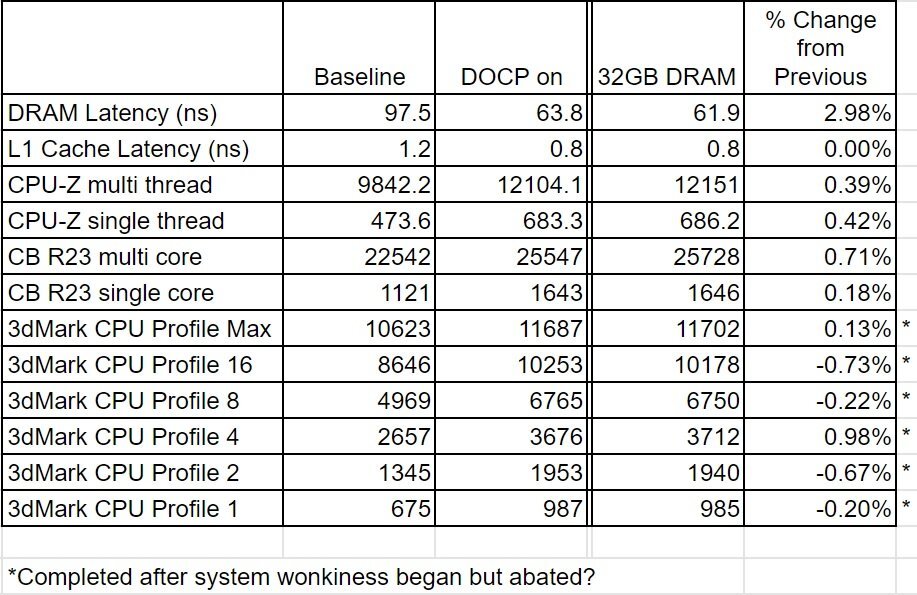

5 run average memory latency in Aida64 Cache & Memory benchmark = 62.04ns. So possibly a genuine 2.5 to 3 percent improvement over the 64GB results, but this did not seem to translate into the scores from my stable of other benchmarks.

-

5 hours of Aida64's seemingly underwhelming "stability test" yielded the following (I used it because it was at hand and ready to run). I think I'll make a few passes of their "cache & memory benchmark" to see if that slight reduction in memory latency might be real and then maybe some prime95 small fft with AVX for some additional assurance that things are like they should be. I do find it interesting to note that my RAM exceeded 40 Celsius with only two modules installed while using my current fan control curve, but it's still running at the "DOCP" specified 1.45v. I plan to see if there are gains to be had with a manual memory OC using 1.40v at some point in the future.

-

They were out of stock across almost the entire board when I was shopping. I got impatient and opted not to wait for them. They seemed to be reviewed the highest, as a brand, of just about everything out there that I found.

-

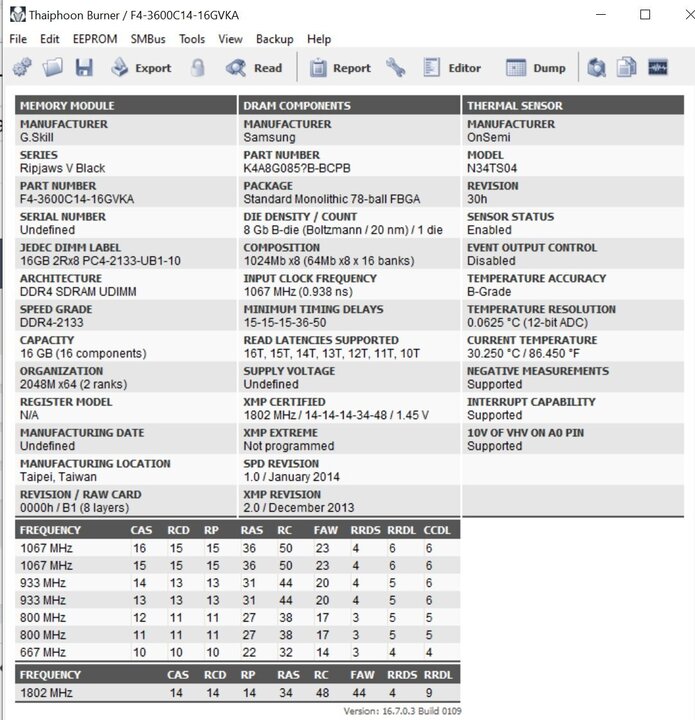

If I understand this correctly, my system is in "quad rank" mode with 2 modules of this RAM installed. 2 ranks per module * 2 modules installed = 4 ranks total.

-

Summary. No real performance changes detected by my test regimen. Likely just some thermal and run to run variance even for the memory latency, I think.

-

-

-

Aye, I have seen both of the linked videos in the past, and both they and further reading elsewhere prompted me to investigate this avenue since 32GB is likely perfectly sufficient for my day-to-day needs, but having the option to compare to 64GB was something I wanted as well.

-

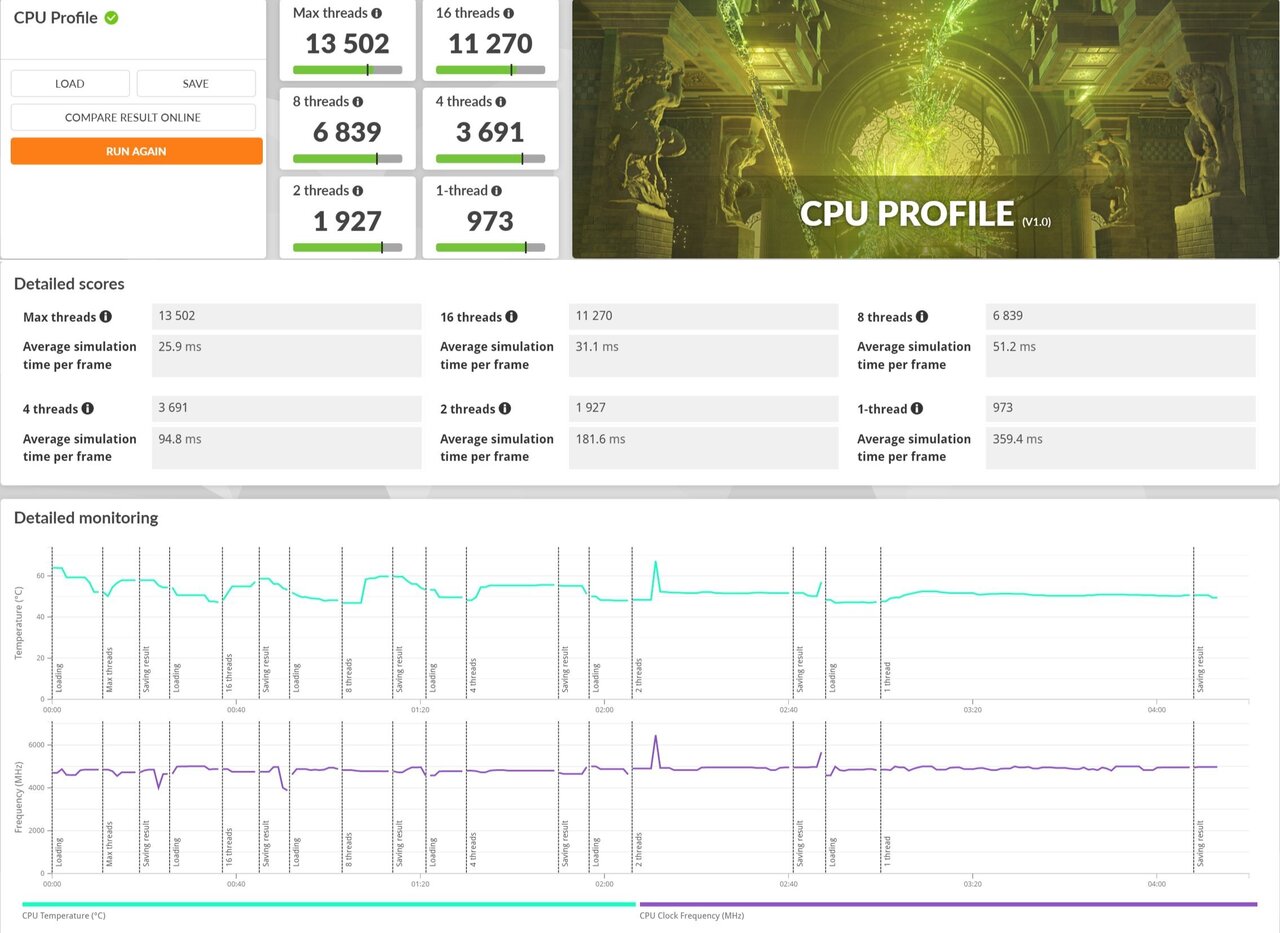

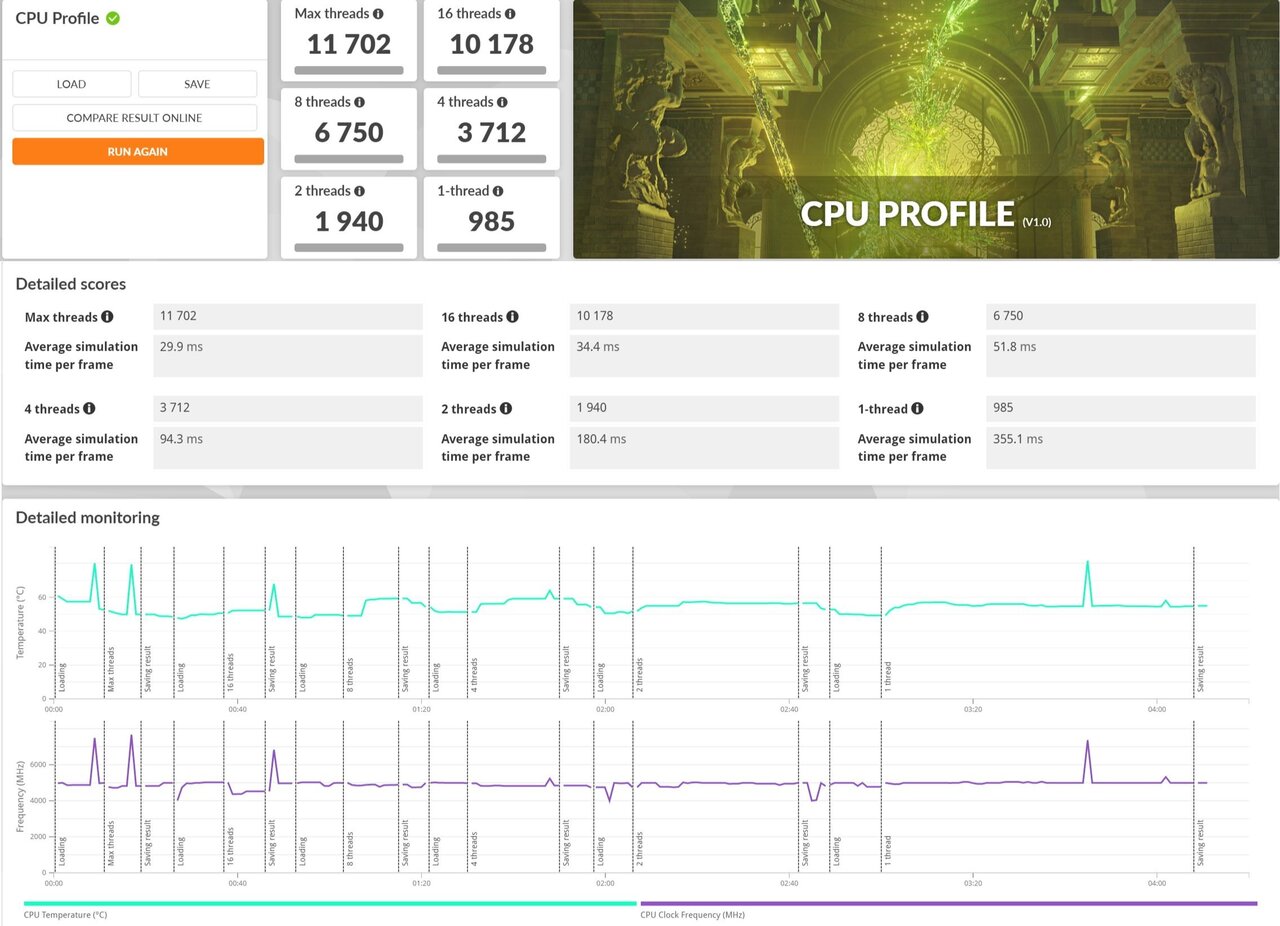

I had planned on further tweaking and testing last night, but I hit a snag. Initially, I was going to rerun all of my original benchmarks with only 2 DIMM slots populated. I wanted to see if asking a little less of the IMC had any effect on the performance at stock settings. Also, I convinced myself that I will have more headroom running only two sticks for all the usual reasons plus the added benefit of better cooling of the RAM itself (some of my reading yesterday has led me to believe that keeping the RAM at 40 Celsius or lower is likely to pay dividends when trying to push a memory OC). After removing the inner most stick from each channel (the MB manual indicates populating the 2nd and 4th slots is the preferred configuration when only 2 sticks are used) the system operated normally through all but my last benchmark (3DMark CPU profile). When the test reached the 4-thread section, performance dropped down to comically low levels and stayed there through the duration of the test. In fact, the system remained "gimped" after the test concluded. Laggy in the extreme. Here I failed to document the situation correctly. I got flustered and focused on resolving the issue without bothering to track what I was doing. I checked task manger to see if Windows was up to something in the background (not that I could see). I restarted the system and initially things were normal. I began to rerun the 3DMark bench and this time the system entered "borked" mode during the 8-thread section. I restarted and looked things over in BIOS. I booted into Windows and fired up CB R23. I was blessed with a "mind-blowing" MC score of 3551... I started thinking that maybe I had dislodged a stick of RAM while removing two of them. Three RAM re-seats/restarts/borked benchmarks later I decided that wasn't the case. It was "cool and dry" in my laboratory last night, and while I hadn't donned my anti-static wrist strap, I had been careful to ground myself right before touching anything in the system, but maybe not careful enough. I say this because, removing the RAM from dimm B2 and replacing it with one of its fellows seems to have eliminated the issue. I'm not convinced that this stick was defective from the factory because it had passed at least 4 iterations of memtest86 in this system prior to the creation of my original post. I left the unit running AIDA64's generic "system stability" test overnight and things seemed correct when I left the house for work this morning. I keep telling myself I'll need to be more "detached" when/if "hiccups" occur in the future . I'll try to stay calm and do a more thorough job of diagnosing/documenting the issue. All the benchmarks that completed correctly before the issue manifested showed at most a marginal improvement that I think could be explained by a temperature variation in the testing area (about 1.5 Celsius cooler than previous). I hope to post those results later today (those that I didn't inadvertently erase in my haste to "fix my baby"). I think I'm likely to run a battery of longer stability tests prior to making any further tweaks. I plan to include OCCT/testmem5, generic prime95, and maybe prime95 through corecycler as well. I'm considering adding geekbench5 as another general-purpose performance metric. c'est la vie