Welcome to ExtremeHW

Welcome to ExtremeHW, register to take part in our community, don't worry this is a simple FREE process that requires minimal information for you to signup.

Registered users can:

- Start new topics and reply to others.

- Show off your PC using our Rig Creator feature.

- Subscribe to topics and forums to get updates.

- Get your own profile page to customize.

- Send personal messages to other members.

- Take advantage of site exclusive features.

- Upgrade to Premium to unlock additional sites features.

-

Posts

647 -

Joined

-

Last visited

-

Days Won

43 -

Feedback

0%

Content Type

Forums

Store

Events

Gallery

Profiles

Videos

Marketplace

Tutorials

Everything posted by tictoc

-

With the integrated heat sink on the 905p temps are nothing to worry about as long as you have some air flow. I just wrote 600GB to one of the 905p's and temps never hit 50°C. Most of the enterprise grade U.2 SSD's are in metal enclosures, and as long as you have some air flow temps should never be an issue in a desktop case.

-

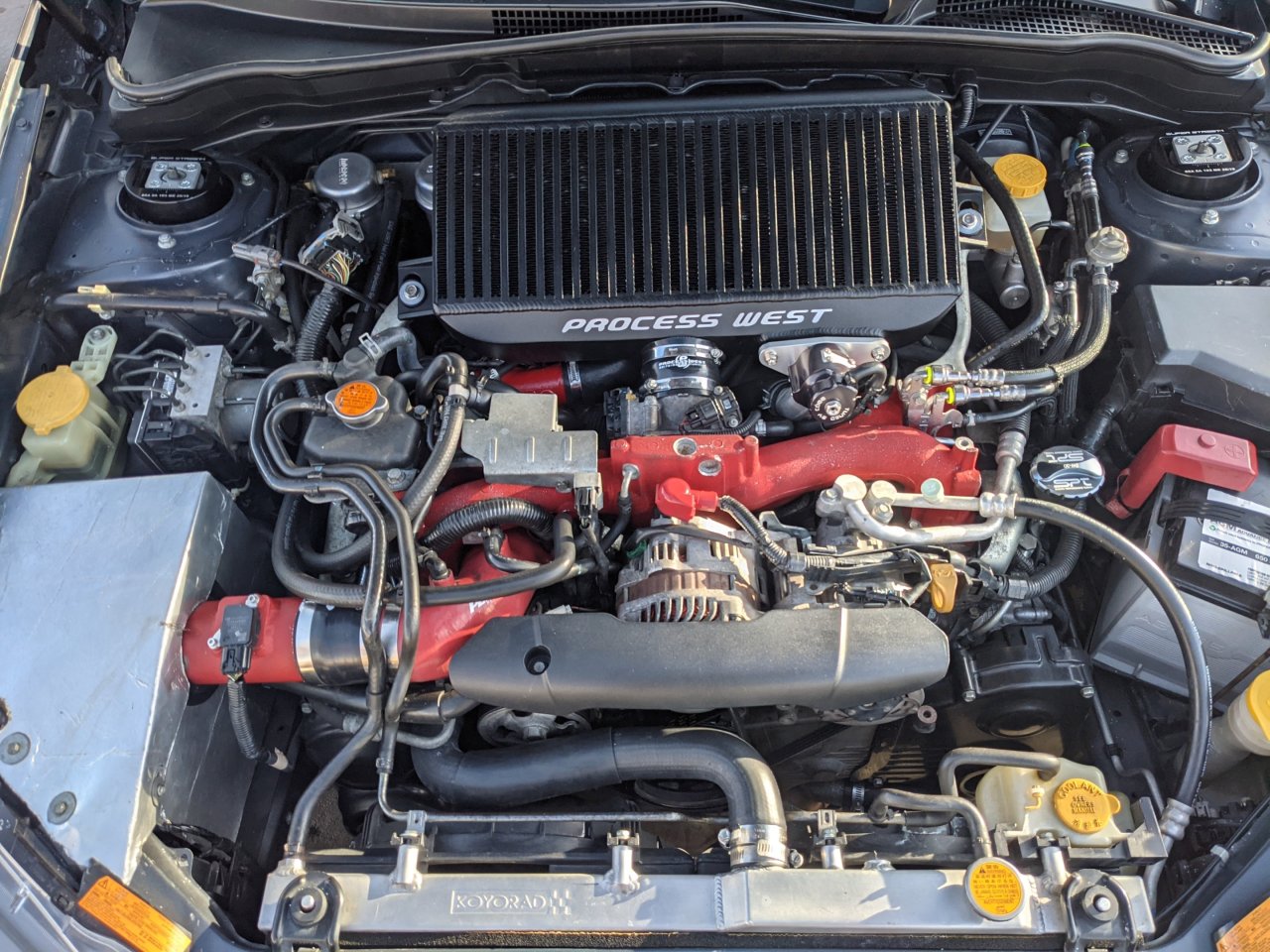

This is my daily so no big power, just rolling the same base that I've had for the last 5 years. Exhaust: Stock uel manifold, Grimmspeed Up-pipe, TurboXS Catted Downpipe (v2), 3" mid-pipe, Nameless muffler delete with dual 3.5" tips Fuel: Cobb Accessport with a pro tune, DW65c fuel pump, ID1050 injectors, Cobb flex fuel

-

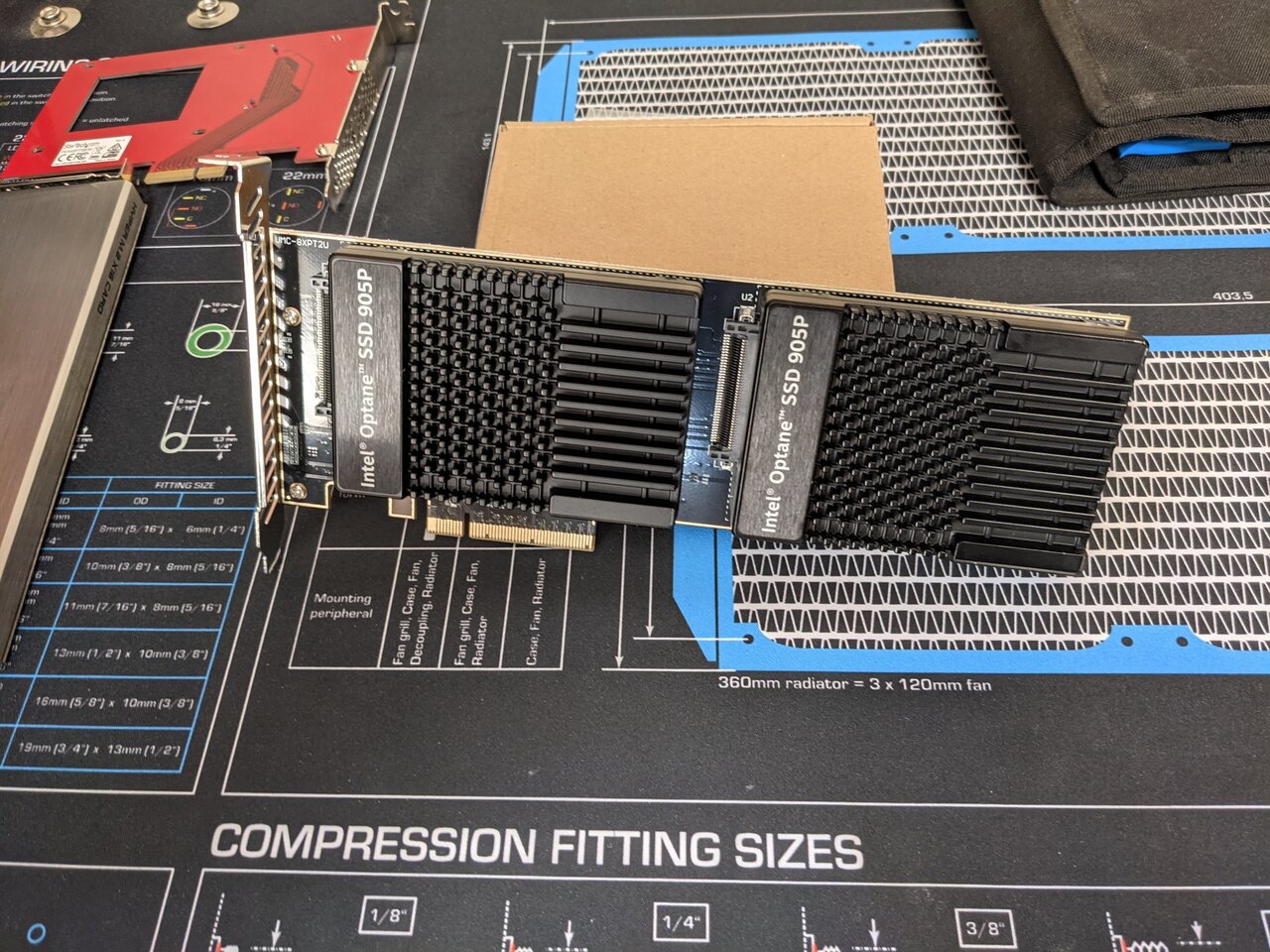

I have a handful of 6.4TB U.2 drives with only a few PB written that I haven't put to use yet . Right now I am running two 1TB Optane 905p ssd's in my server on a dual u.2 to PCIe x8 adapter. There are some other dual adapters that flip the drives, so shorter length but adds some height. As long as your board supports bifurcation, it's easy to add a few more if you have empty PCIe slots.

-

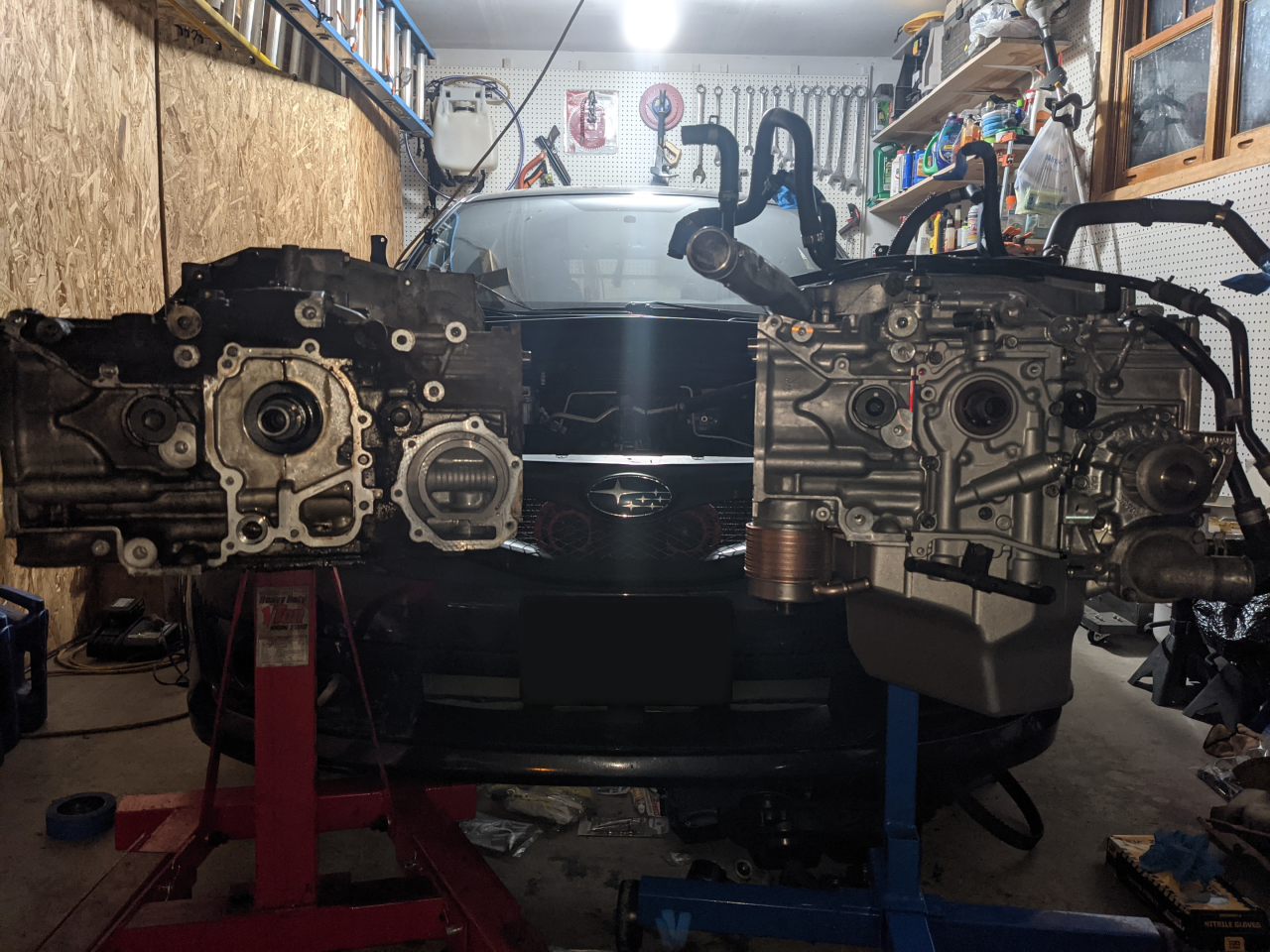

Brand new 2.5L type RA short block and a new IHI VF48 Hi Flow turbo. Most everything else I swapped from the old motor. Other new parts included pretty much anything that touches oil and can't be easily cleaned, so new oil pump, AVCS solenoids, AVCS gears, and oil cooler.

-

-

It is kind of a bright red so... Maybe 10HP.

-

Decided to add 5 HP to the turbo while I'm waiting to get the heads back, which is supposed to happen this week.

-

I think I might get something back up and folding. Maybe the 2080S can return to folding duty. I'll figure it out in the next week or so.

-

Things have been busy, but I had some time over the weekend to get this partially up and running. I'm leaving the PCIe cables just in case this chassis gets repurposed some day. The rest of the cables are stashed under the PSU. Currently stress testing (mprime, y-cruncher, and stressapptest) on a minimal Arch install. At stock clocks, CPU boosts to 4050MHz all-core while running mprime blend, with CPU fan set to "Standard" mode in BIOS. Looks like there is quite a bit of headroom on the CPU, so I will be OCing the CPU on the overkill router. Currently power usage is sitting at 85W at the wall while running mprime. Both NICs detected and all ports are working with the PCIe slot set to x8 x8. Bifurcation options in the BIOS are x8 x8, x8 x4 x4, and x4 x4 x4 x4. ECC UDIMMs booted right up at 3200MHz, and can be monitored for errors via rasdaemon. Totally unnecessary for a router, but I have a bunch of extra ECC UDIMMs, so into the router they go. No issues at 3200 with jedec timings, so it looks like I will also be OC'ing the RAM. List of things still to complete: Fab custom top panel with filtered cut-outs for cooler intake and intake above the NICs Replace secondary 8-pin EPS with custom length 4-pin EPS for the bifurcation card Fab/install power switch, power LED, and activity LED Install and wire up remote power break-out board for PiKVM Once I finish the rest of the build, then it will be on to testing VyOS.

-

This would be pretty sweet if it was like first gen Ryzen. I jumped on the 1700, threw it under water, OC'd it, and then it magically turned itself into an 1800xX :)

-

Added 44 threads of my 3960X to the mix, and that looks to be good for about 1M ppd. I forgot how little power AMD GPUs use for F@H compared to other compute work where they are actually being pushed hard. Currently running F@H on the following: 6900XT @ 2800core/1075mem 2x Radeon VII @ 2080core/1200mem 3960X (44 threads) @ 4200MHz Total system power load (as reported by the UPS) = 1140W

-

Current state of affairs on the STi. The mess on the old block was from a power steering pump leak, that started about a year ago, that I never fixed. IHI VF48 Hi Flow ported/polished, with billet wheel, and ceramic coated hot side. I'm just waiting to get my decked and rebuilt heads back from the shop, and then I'll be able to put the top end back together and get her back on the road.

-

Sorry I couldn't make the time to get some subs in. You guys all did pretty great, and made a great showing for the first time competing in a team wide comp.

-

-

I do have an AM3+ setup that I could throw on the bench. It will be a few days, but I should be able to get something up in the next week or so.

-

I might join in. I'll take a look through the thread and see what we need to run. Off the top of my head, I think I could do Quad 7970s or Dual on some wickedly high clocking 290s. I'll mess around with a stripped down Windows 7 install tonight, and then see what I can get up and running over the next week.

-

EVGA Exiting GPU Market, Citing Abusive Treatment by NVIDIA as Reason

tictoc replied to Mr. Fox's topic in Hardware News

Sorry for the double. I can't possibly agree with this more. Nickel coating inevitably flakes and corrodes, plexi cracks, and I couldn't care less about RGB. The biggest enemies of long term uptime on loops are corrosion and growth. Copper/acetal blocks (which look that best anyhow), coupled with epdm tubing, and some biocide and inhibitor will allow you to have loops running 24/7 for more than a year with the only maintenace being topping off the res. If you don't care about bling, then you can pay less, and end up with a more reliable loop. -

EVGA Exiting GPU Market, Citing Abusive Treatment by NVIDIA as Reason

tictoc replied to Mr. Fox's topic in Hardware News

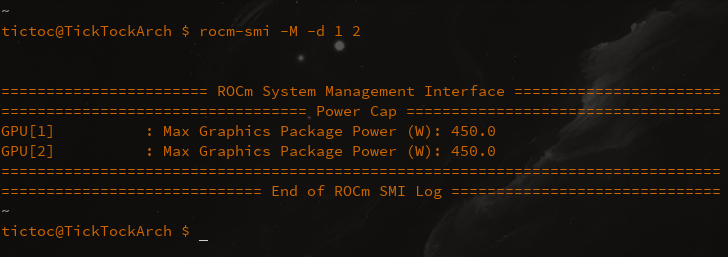

The last twenty minutes of that video is basically what mostly pushed me away from NVIDIA. That coupled with NVIDIA's disregard for open source GPU drivers, and more importantly open source user-space, has made it easy for me to mostly (still have a number of NVIDIA GPUs running) move away from NVIDIA for my personal machines and projects. With AMD I can easily have things like this on my Radeon VIIs: As far as the overall topic is concerned, I hope to see EVGA make a return to manufacturing a high quantity of top-of-the-line PSUs like the SuperNOVA G2 1300. I still have two of these running, and they have been going more or less 24/7 at 90+% capacity for nine years (still one year left on the warranty ). -

I don't disagree with that. I had the 400 out of my '78 Bronco in about 30 minutes. I am about 25/75, flat-four:V8, over the last twenty years. All the V8's except one have been pre-1980's, and they are a breeze to work on. I also must tinker with everything, so having AccessTUNER Race to dial in the Subies is pretty great.

-

While it's a bit more involved than pulling a small block out of a pre-80's vehicle, it's actually pretty easy to pull the motor on these cars. I've pulled at least 10 EJ's, and the way the motor is designed, nearly everything can stay attached and the whole thing comes out. It is pretty much as simple as Remove hood Disconnect and remove battery Remove intercooler Disconnect fuel lines Disconnect and pull radiator Remove A/C and PS belts Disconnect down pipe from turbo Disconnect main harness Disconnect misc. other wiring harnesses Unbolt pitch-stop Disengage clutch Unbolt A/C compressor and PS Pump and flip them out of the way Remove the 6 bellhousing to block bolts Remove 2 engine mount nuts Personally without a lift, I think it is faster and easier to yank the motor when replacing the clutch, since there is probably some other mainteneace to be done that will be easier with the motor out like: plugs, timing belt, water pump, etc. This motor has been out of the car twice. Once for a new clutch, and once to do the head gaskets. All in it's 1-1/2 to 2 hours to pull the motor.

-

I posted a big guide in a spoiler, and it got eaten by the forum. I'm following this thread now, so hit me up if you have any Linux questions. I'm not super familiar with Unraid (my VM hosts are running Debian or Arch), but I would probably go the container or VM route with folding on Unraid. For last months Folding Comp I ran F@H on my GPUs via podman with a slightly modified Docker container from the official F@H containers. https://github.com/FoldingAtHome/containers I was running on AMD GPUs, but the NVIDIA container is fairly similar. It should be pretty easy to set up if you have any interest in running containers, and want to isolate the different GPUs in their own environment. There is a thread in the Unraid forum, but it appears to be dead now. https://github.com/FoldingAtHome/containers I haven't looked at the Unraid community container, so I'm not sure why it isn't working. The offical gpu container from F@H worked fine for me.

-

The indestructible STi has traveled it's last mile. Rod went a knocking yesterday. I have a fresh shortblock to put in it, so I might post some build pics, and some pics of whatever carnage there is once I pull and disassemble the dead motor.

-

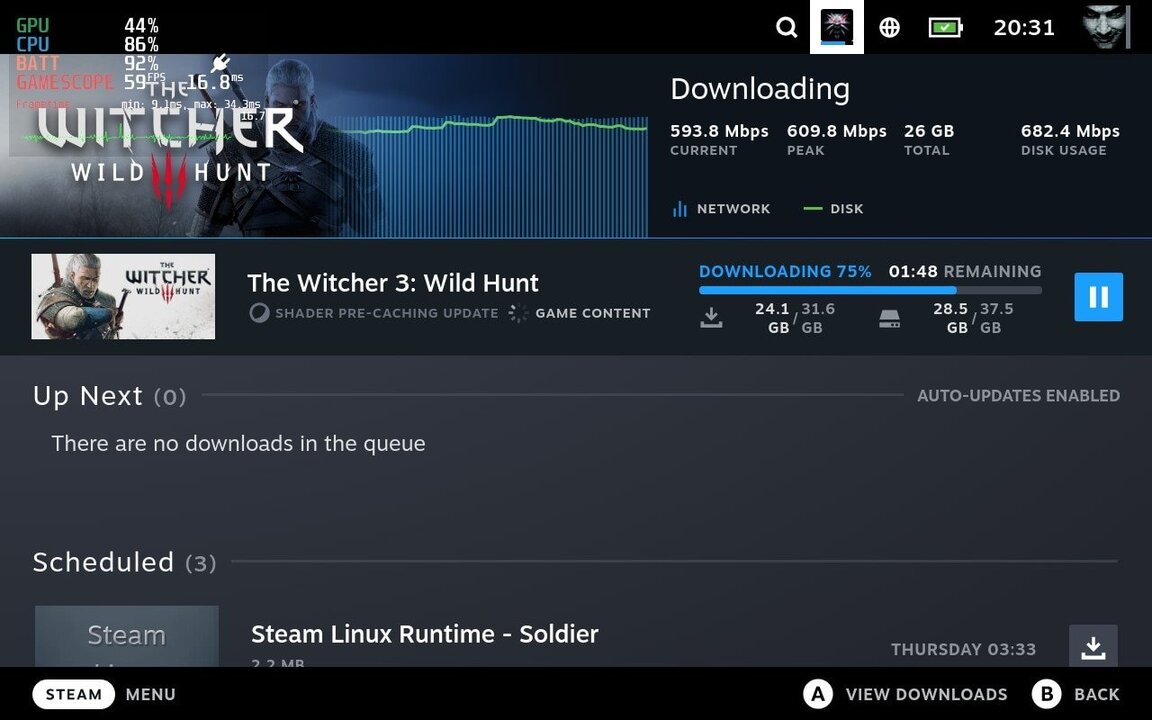

Docking station for the deck. Downloading from my SteamCache at about 600 Mb/s is a workout for that little CPU. Load is roughly 90% and temps are at 90°C. Not sure where the bottleneck is, but 600Mb/s beats the heck out of my 40Mb/s internet service.