Welcome to ExtremeHW

Welcome to ExtremeHW, register to take part in our community, don't worry this is a simple FREE process that requires minimal information for you to signup.

Registered users can:

- Start new topics and reply to others.

- Show off your PC using our Rig Creator feature.

- Subscribe to topics and forums to get updates.

- Get your own profile page to customize.

- Send personal messages to other members.

- Take advantage of site exclusive features.

- Upgrade to Premium to unlock additional sites features.

-

Posts

637 -

Joined

-

Last visited

-

Days Won

33 -

Feedback

0%

Content Type

Forums

Store

Events

Gallery

Profiles

Videos

Marketplace

Tutorials

Everything posted by tictoc

-

The board you linked (X11DAi-N) is socket P0 for Xeon Scalable-SP 1st and 2nd gen CPUs. x11dai-n_quickRef.pdf This is the only ATX form factor Supermicro board that I am aware of that supports Knight Landing. https://www.supermicro.com/products/motherboard/Xeon_Phi/K1SPE.cfm There might be others, but I've never seen a working one in the wild other than the boards from the Supermicro and ASRock Rack developer workstations. I think Intel originally planned on Knight's Landing/Mill being compatible with all 3647 boards, but scrapped that plan when they EOL'd the Xeon Phi. I am pretty sure (but not positive) that you need a Xeon Phi specific board.

-

If you really want to put it through it's paces and fully utilize the CPU, I would add additionl CPU slots. F@H works with threads rather than cores, so if you wanted to max out the system I would probably use three CPU folding slots, two slots at 20 and one at 26 (assuming you have a pair of 18core 36 thread CPUs in that machine). That will leave three full cores free, two to feed the GPUs and one for additional system overhead. The lastest F@H CPU core uses AVX, so expect a very heavy system load.

-

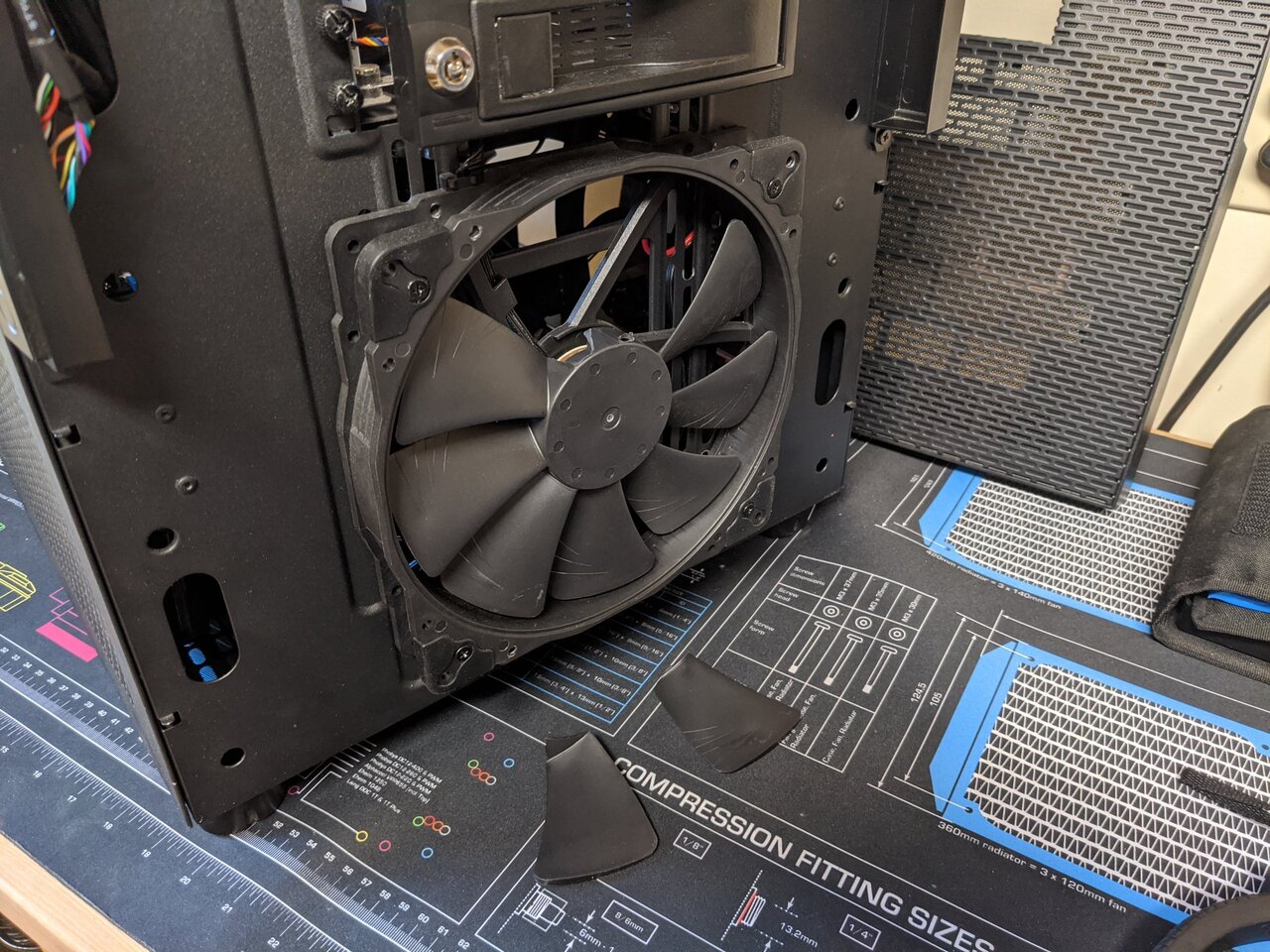

New front intake fan installed, and it moves a bunch more air than the Noctua. Only two SATA SSDs left to mount, but I will need to make one more extension for the SATA power for those drives.

-

That is correct. Two single rank dimms per channel is effectively equal to one dual rank dimm per channel. With dual ranked dimms you would effectively be running quad rank if you populate all 8 slots, but generally speaking depending on the workload you will see a bump in performance. I never really tuned my 2P v3 system, because I moved over to Threadripper shortly after acquiring it. The best performance on my Xeon v2 system, was using dual ranked dimms, but only installing 4 dimms per socket. My ASRock Rack board allowed me to bump the memory speed over the default for my sticks, so I was able to run 1333MHz sticks at 1866MHz. The board wasn't stable at 1866MHz with all 16 slots populated. Running in quad channel with 4 dimms per socket at the higher speed was the most performant setup, and I only moved away from that when I started to need more than 64GB of memory.

-

The fan was spinning at 800 rpms when "someone" decided to put the front cover on the case, and might have inserted one of the cover standoffs directly into the path of the fan blades. All the jet engines are going out to pasture, and I will basically end up with nearly the same overall performance and sweet whisper silence.

-

Octal rank is different than octal channel. Octal rank refers to the number of ranks per dimm. LRDIMMs generally come in quad rank and octal rank, which allows increased capacity per dimm. The increased capacity of LRDIMMs trades maximum capacity for performance. In regards to whether or not it's octa channel, the platform itself (2 quad channel CPUs) technically has 8 channels, but each CPU can only address 4 channels locally. While the QPI is a fast path (8GT/s on Xeon v3) it's no where near as fast as local memory access. Generally speaking taking the performance hit is worth it due to the doubling of addressable memory. Just saw your reply and that is all correct. Specifically for your system the setup is good. The memory you have is single rank, so if you wanted to increase performance, you could look at getting some dual rank dimms which would bump up the memory throughput. For maximum performance anything above dual rank will generally be slower, and is only worth it if you actually need the additional capacity.

-

Actually it's still quad channel. Only Epyc, Threadripper Pro, and newer Xeon Gold/Platinum CPUs have 8-channel memory controllers. Anything that is crossing the QPI to a far NUMA node, is going to suffer a hit in latency and performance. No idea how AIDA works, but in my experience, Windows generally tends to be less NUMA aware, so if the OS scheduler is not pinning processes to local memory then there will be a drop in performance.

-

After getting the 10 HDDs mounted, I decided to do some temperature testing to set the fan curve for the case fans. Had a derp moment while putting the front cover back on, and now I'll be installing a new front intake fan. It might actually be a good thing, since I ordered a Silverstone AP183 which should push quite a bit more air than the Noctua. I'll be cutting out all the unnecessary mounting points behind te fan which should help to increase airflow and reduce noise.

-

Since you are running it in an open top bench, the easiest thing to do would be to rig up a fan or two directly above the GPU. This will force air down between the cards and add some fresh air for the intake of the top card. I've had lots of open bench machines running all out 24/7, and increasing the airflow directly to the top GPU will probably knock the temps down 10°C or so.

-

I picked up one of these chairs on a sale at Office Depot. https://www.officedepot.com/a/products/510830/WorkPro-Quantum-9000-Series-Ergonomic-MeshMesh/ Excellent chair for the price. After 5 years of daily 6+ hour use, it is still in like new conditon, with only some small cracking of the foam/rubber armrests. Pre-covid I think I paid something like $325, but even at $450 I would still buy it agin.

-

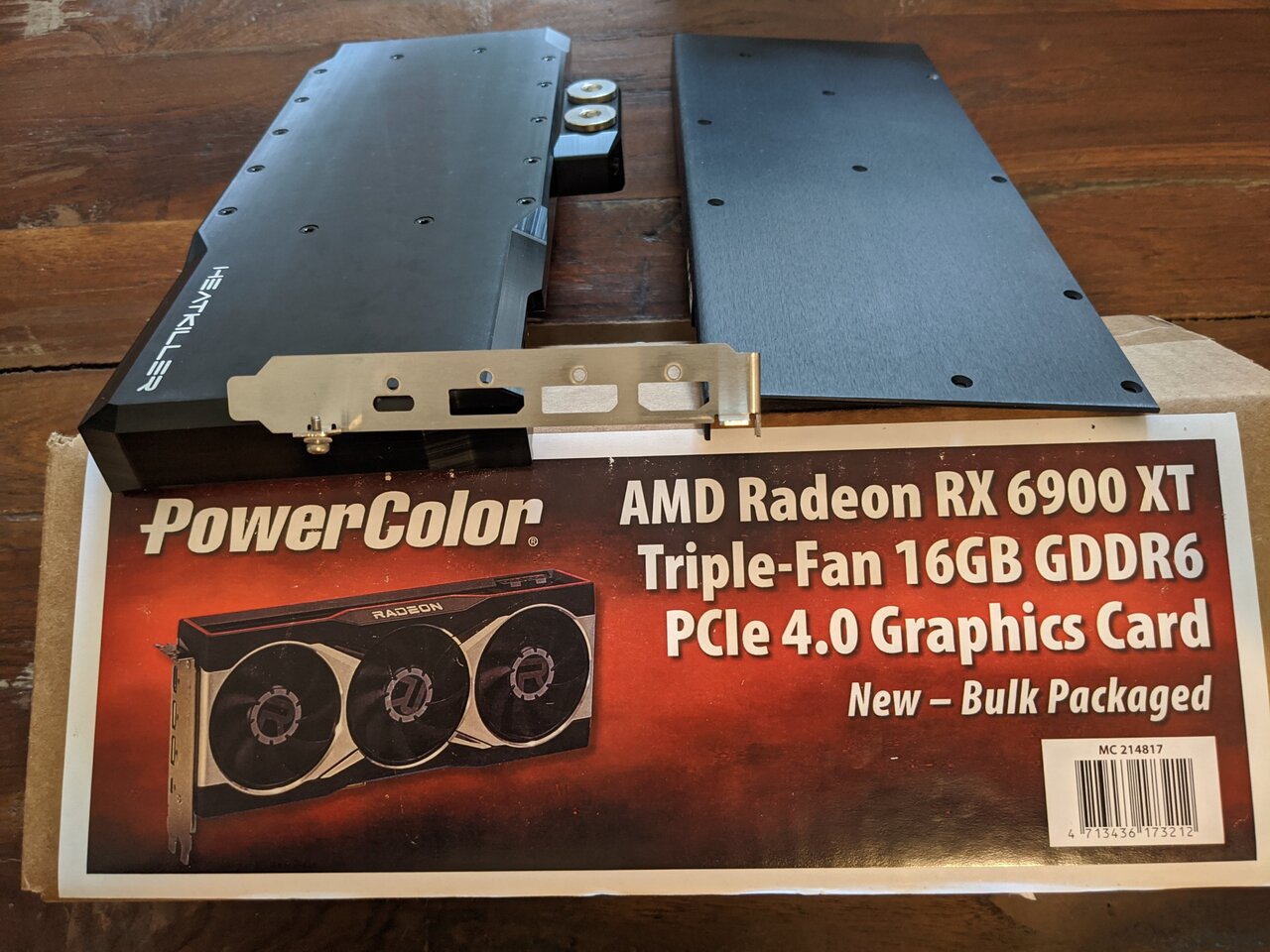

Great minds think alike. Yesterday I went to look at a 2.5RS for a parts car. No dice on the car since the tranny was shot and the motor had a bunch of top end and bottom end racket. The local MicroCenter is more than an hour from my house, so I decided stop in and browse around for a bit while I was in the area. I've had the block and backplate since they were released, and I was just waiting for a reference card at something close to MSRP. Yep reference 6900XT, and props to PowerColor for the minimal packaging. Last thing I need is another giant GPU box.

-

I missed this when you posted it. Big congrats on hitting 10B.

-

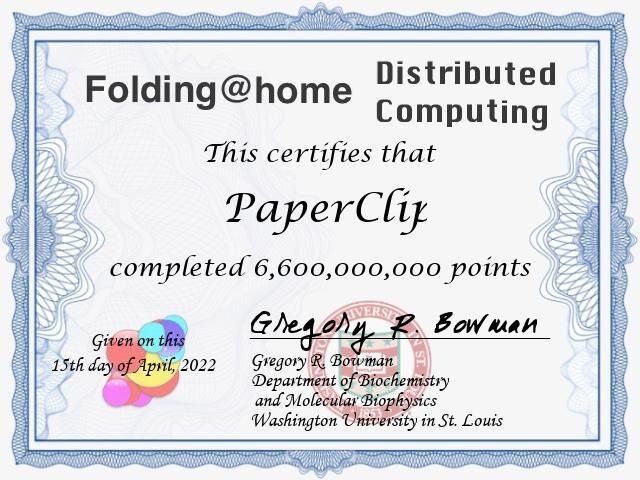

Here's your points certificate. To get the cert for points rather than WUs just delete the "&type=wus" off the end of the "My Award" button download link.

-

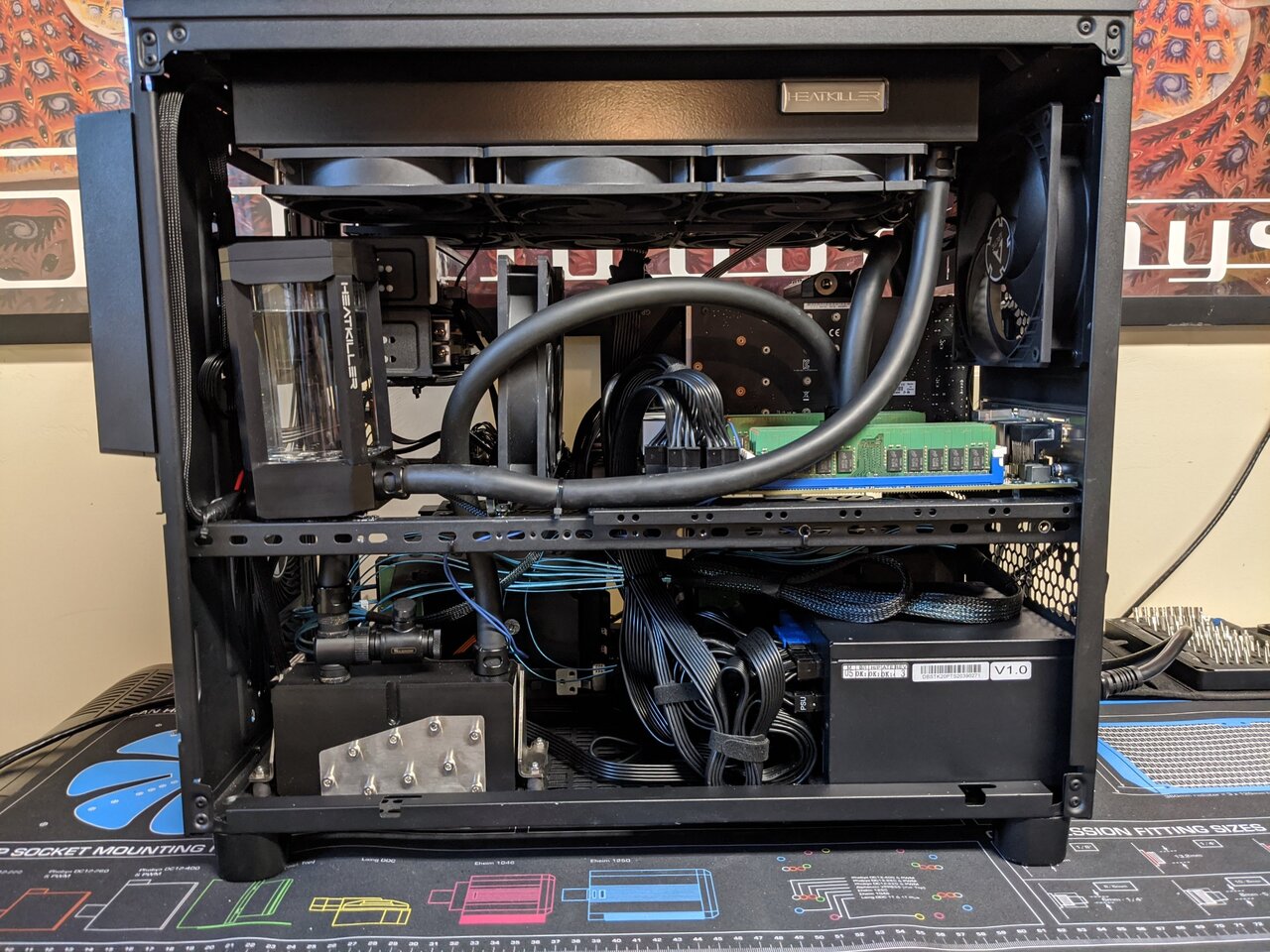

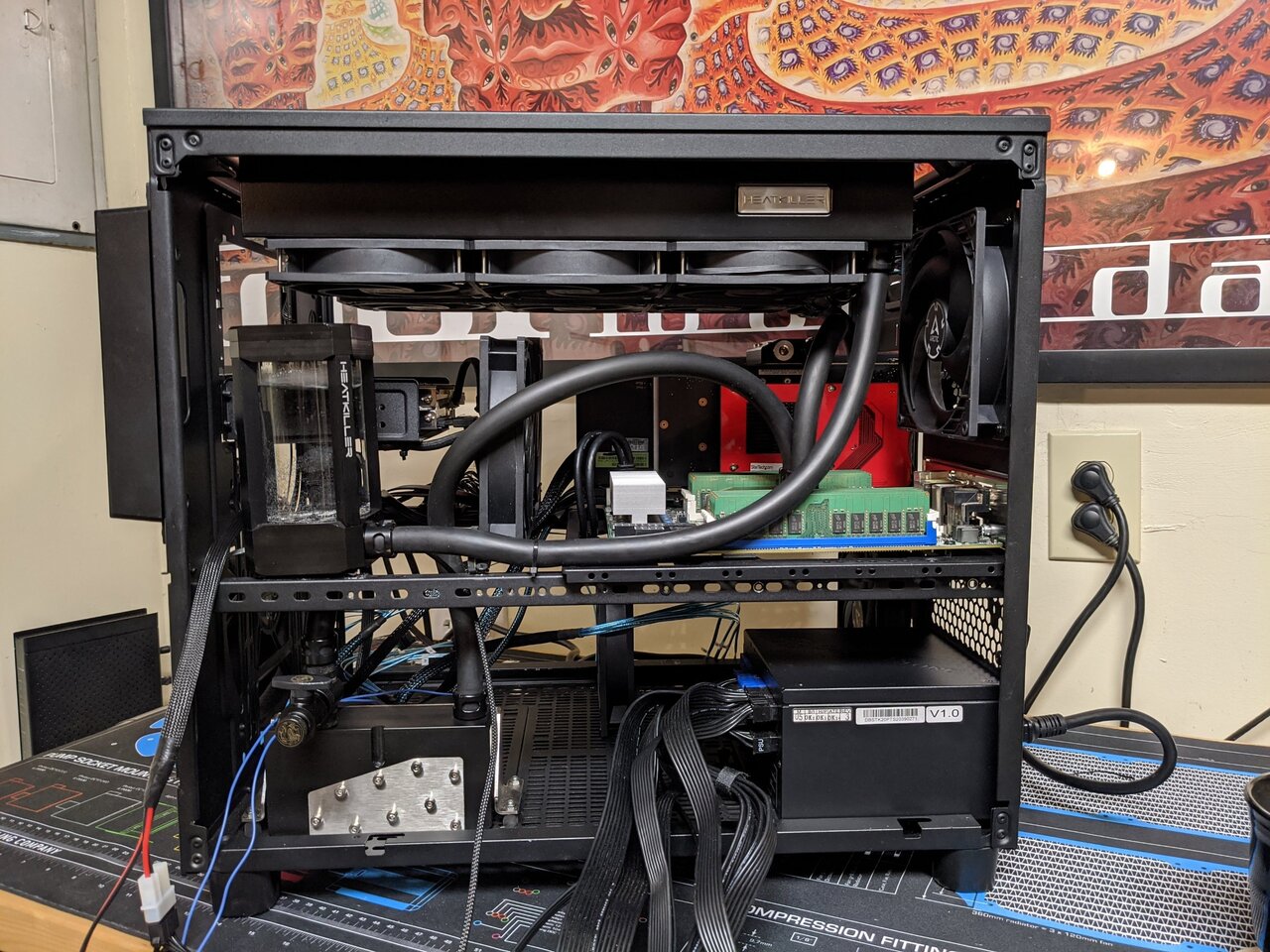

The never ending build log has been resurrected. This is without a doubt the longest I have ever spent on a build without really changing much from the initial config. Leak test done, and currently bleeding the air out of the system.

-

Had some hours of downtime today due to an extended power outage, but all the things are back up and folding now.

-

By default the AppData folder is hidden. Is it just the FAHClient folder that is missing, or can you not see your AppData folder at all? You can also navigate to the AppData folder by pressing Winkey+R, and then typing %APPDATA% in the Run box.

-

I have a bunch of the CPC QD's and they work great with not a drop spilled or any issues in the 4 years (maybe longer??) that I've been using them with the external radiators for my main workstation. There was a time when the pricing on those was really good. I think that all ended when EK started selling them stand-alone and with their Predator kits.

-

No worries on any extra work, it's pretty much just a one liner from the log file. The client stores all the logs locally, and you shouldn't need HFM. I haven't folded on Windows in something like 8 years, but unless something has changed, the logs should be in the FAHClient data folder at: C:\Users\{username}\AppData\Roaming\FAHClient

-

It should be pretty easy to sort out. Just need to parse the logs from the start of this month to when you removed the passkey, add up the points for the slot with your 2080ti, and then subtract it from your total. The credit estimate in the logs is usually really close to the awarded credit. @Supercrumpet if you want to attach your logs, I can give them a little grep-fu. -EDIT- If you're running Linux here's an easy one liner. Just substitute in the correct slot number. journalctl --utc --since "2022-04-03" -g 'FS00.*points'

-

@axipher What's the status on your 750ti? Things have finally slowed down for me, so I'll be around the forums a bit more now.

-

I diy'd one for testing before the kickstarter was funded and it worked really well.

-