Welcome to ExtremeHW

Welcome to ExtremeHW, register to take part in our community, don't worry this is a simple FREE process that requires minimal information for you to signup.

Registered users can:

- Start new topics and reply to others.

- Show off your PC using our Rig Creator feature.

- Subscribe to topics and forums to get updates.

- Get your own profile page to customize.

- Send personal messages to other members.

- Take advantage of site exclusive features.

- Upgrade to Premium to unlock additional sites features.

-

Posts

2,211 -

Joined

-

Last visited

-

Days Won

96 -

Feedback

0%

Content Type

Forums

Store

Events

Gallery

Profiles

Videos

Marketplace

Tutorials

Everything posted by J7SC_Orion

-

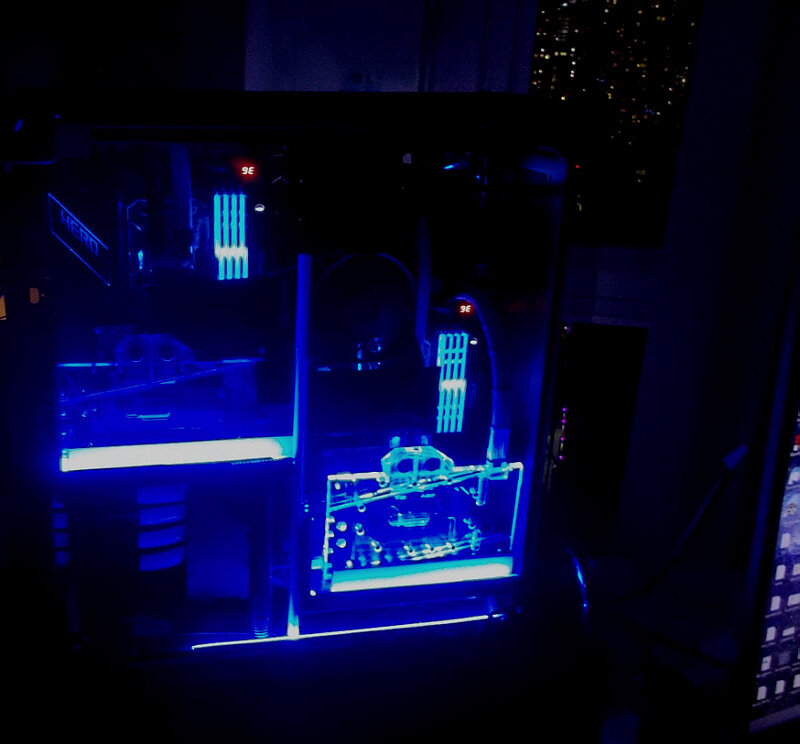

I seem to have fewer driver problems with my 6900XT (left in pic below) than you, perhaps because I tend to run older 'proven by moi' drivers - but clearly, the 4090 (right in pic below) is a far better match for 4K/8K resolutions. FYI, both GPUs are connected to the OLED...

-

I really like the looks of the Matrix, but underneath just seems to be a Strix OC (worst things could happen). FYI, DerBauer mentioned before on YT that he was working with Asus on a liquid metal solution. That said, 4090s biggest challenge is voltage limitation, not temps (I water-cool everything anyways). While Asus talks about 'highest boost clocks' in their media release and on their web site, so far they actually have not published any clocks, even under 'technical features'... I also wonder whether the Matrix has the original AD 102-300 (1.1V max) or the more recent AD 102-301 (1.07V max) core NVidia quietly introduced. A fial point re. the Matrix is that it only has one 12VHPWR connector, unlike the Galax HoF which has two 12VHPWR...a lot of things have to go right with a single 12VHPWR connector juicing 600 W or more; two 12VHPWR connectors would be a better option for the Matrix to underscore its top of the line claim... Speaking of 12VHPWR connectors, get a load of this...

-

...thanks for the reminder on the carpet chair mat I bought a new one but had it in the back of the SUV for almost a year ; finally brought it up yesterday and replaced the old one (which was the clear plastic type)...

-

...many eons ago, I drove the 2ng gen Doge Viper a friend owned at the time a lot - quite a handful on the twisties... Meanwhile, now back at the farm, 3rd week after car wash - still looks good from a distance

-

...quick business lunch at Earls > my fav there > Cajun blackened chicken with salad and warm potatoes

-

...you'll never guess ...MOAR FS2020 I could see this region with a perfect dusk from our place, so a quick flight was in order. Switched back to the Galax vbios and using only a very mild oc and PL of 80% out of 121%. Per top left, still managed to exceed the 4K/120 monitor max

-

A glimpse at future GPUs...but will they play Crysis ?

J7SC_Orion replied to J7SC_Orion's topic in Workstation

...It has been a while for this thread, but the vid below is extremely interesting, if a bit tedious at times. @Sir Beregond will appreciate the AMD vs NVidia wafer cost differentials, and @bonami2 might find his next graphics card in there some where... The long and the short of it is that AMD is about to fire a major shot and NVidia re. AI hardware, though NVidia's ecosystem on related software still rules. In any event, enthusiasts and gamers will just get the bread crumbs that fall off the AI table -

Replacing Desktop With Laptop, Recommendations?

J7SC_Orion replied to ENTERPRISE's topic in Laptops/Tablets & Phones

...a point to remember is that what they call a 4090 in laptops is actually a bit < than a desktop 4080, and it goes down the GPU stack like that. I would make sure that the laptop has very capable GPU power for connecting to and driving a wall-mounted OLED TV / monitor at 4K/120 to go with that couch for when you are home and relaxing ( ~~ vegging out). Dell/Alienware, MSI, Gigabyte, Asus, Acer etc all have good models around the 17 inch +- OLED screen-size; just bring your wallet... -

...good for 'floating' in OLED + Atmos space

-

...I might be utterly (get it: utter ) wrong, but DNS services might be one place to examine site performance issues...

-

-

I time-stamped YT, this is also used for sound-checking home theaters and sound bars. The graphics are neat, too. While I have a sound bar, it is not even connected to the LG 48 inch OLED...for gaming and YT, I sit quite close (~ 1.5 ft) and as such, the 'native' sound is superb, IMO. Have fun:

-

First carwash since last fall's first snows ...then: ...now: The protective crust of dirt that had built up protected the paint perfectly...

-

...^this is not an isolated incident, IMO. Of the last 14 GPUs I bought for work + play, the majority had badly applied thermal paste and high deltas for hotspot temps. My thermal pantry box is full of various thermal pastes, thermal pads and thermal putty (the latter vacuum-sealed and in the fridge for future builds). Only the 2x Gigabyte 2080 Ti WF factory full waterblock cards (from late 2018) have/had no issues and have never been apart. I water-cool most GPUs anyway which is how I also know about factory thermal paste disasters, even if they are not immediately obvious...My standard GPU cooling-mod approach is: Gelid GC Extreme for GPU die paste; thermal putty for the VRAM plus select VRM and back of die; decent thermal pads for the rest of the VRM; extra big heatsink for the backplates.

-

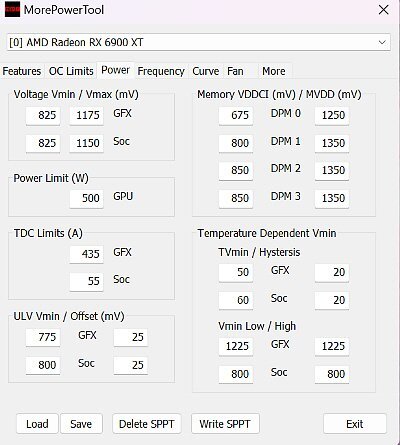

...yeah, but... ....one can increase the power limit of the AMD, and reduce it on the NVidia (I do both, coz, reasons) ...but seriously - NVidia segmenting the market to death with GPUs that have as little as 128 bit memory bandwidth is getting pretty silly. While AMD could take advantage of the situation re. a new and really competitive price-performance lead in this US$ 400 segment, they probably won't. Hopefully, I'm wrong on that.

-

...Good idea - and I have enough fans for it and also a good breeze wind ~30 floors up >>> but the condo strata council & city safety engineers would get too annoying if I hang that outside the windows...

-

...another new eye-candy release and great viewing for OLED personal bonus as this one actually has a few shots of our neighborhood in it, including the street we live on (I did a double-take - hey, I know that intersection)...

-

The build-in YouTube of the LG is a bit of a pain to maneuver around in even with voice commands compared to 'regular desktop YT' but it can eliminate both the PC-GPU and the cable as a potential problem. FYI, I just ran the vid below on the 6900XT switched to the 48 inch OLED and 4K HDR and 8K HDR worked well - though the 8K HDR stream is a bit jerkier on the AMD w/ 16 GB VRAM compared to the 4090 w/ 24 GB of VRAM (not due to my connection - same for both with is 1 Gbps up/down); could be codecs ...still, 4K HDR especially is stunning on both GPUs and the OLED; for 8K streams, I prefer the 4090.

-

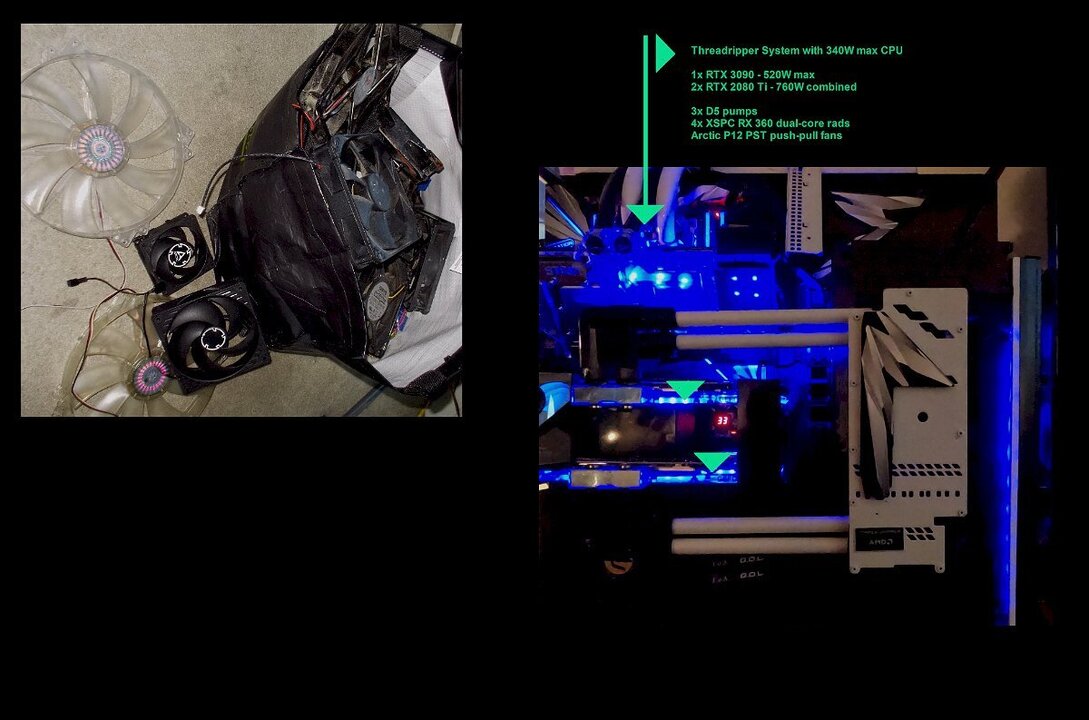

...I would love to see some additional upcycling ideas here, re. fans and 'other'...pic on the left is just one of three bags of old fans (OEM, custom, 80mm - 200 mm) and the fans need to find some gainful employment instead of just taking up space / dust ! Pic on the right is an upcycling solution of sorts . This thing with its three GPUs and TR gets normally used for some light ML and as a rendering backup machine. However, we had some window closing / locking issues right at the coldest time mid-winter, so in the evenings, I fired that thing up and ran a bunch of Octane benches which maxed all three GPUs at once; within 5 min, room temp had risen by 3 C (and more when running it longer). Nice and cozy space-heater !

-

I had a similar issue with a 2080 Ti on LG 55 IPS HDR some years back - at the time, I was using a 'good' HDMI 2.0 cable but it would only do 30 Hz at 4K no matter what. However when using a DP-to-HDMI connector with DP port on the GPU and then the same HDMI 2.0 cable as before, I got 60 HZ and far less stuttering, and in my mind anyway, a sharper pic. FYI, I just tried the vid above at 4K and 8K on my 6900XT > 40 inch Philips VA and it is nice and sharp with both DP and HDMI 2.1 ....will try the LG OLED / 4090 / HDMI 2.1 combo after work. Have you tried watching the same vid on the LG 48 OLED internal 'native' YouTube (if connected to a network) ?

-

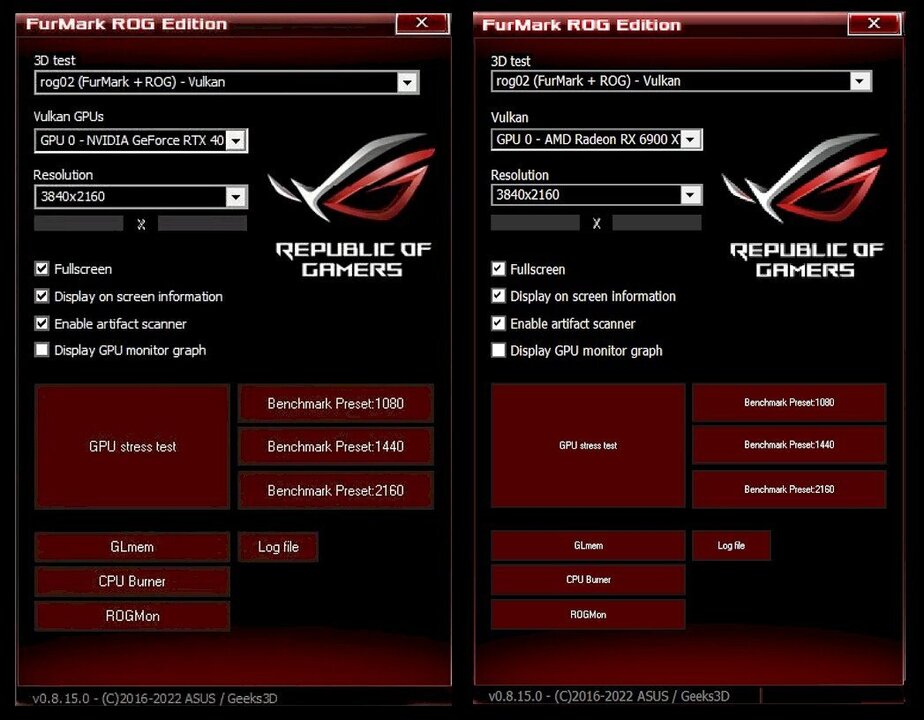

...kudos, really good result for ECC ...I'll try to find some older comparisons I did with ECC on vs off at the same MHz using memtest_vulkan...regular GDDR6X actually already has 'some' error correction built in anyhow, but 'full on ECC' is an unnecessary anvil to drag behind. ... @Avacado @Bastiaan_NL and I had some extensive discussions when this first arose...some HWBot elite league folks felt upstaged by 'regular' newbies who posted results which clearly had some artifacts. Then HWBot did a knee-jerk reaction and required only RTX 4K cards to run with ECC on. My HWBot elite league years are behind me anyway, but I argued that this rule change only applying to one model range is ludicrous and a failure of HWBot management....they introduced a huge bias with that even though a bit of sleuthing can expose artifact runs anyways. All that said, I don't like it either when 3DM has results that are clearly involved artifacts but as mentioned, you can usually tell by looking at extra details and fps curves. ...a couple of my runs below from up to ~ March '23 for both Port Royal and Speedway involved artifacts - but I didn't post those. I also have newer runs, but not handy. Anyways, 'the cure' is worse than 'the disease', IMO. Addendum: Also per earlier discussions, there are some up-to-date, quick tests to check and scan for artifacts (ie. below)...s.th. like that should be built into 3DM SystemInfo (which takes forever these days anyways ) for any card and model to make it a level playing field...

.thumb.png.e1468bf6a526fc168baeb1861367aacd.png)

.thumb.png.8bc4ae7b795aaadb1e55adb55b09314c.png)

.thumb.png.de452269228a2b2792a338ffb661500c.png)

.thumb.png.5ac8c86a1507f3009ad221cfb2936d40.png)

.thumb.png.01186cbf1e0966767559e8fdbe9faa6b.png)

.thumb.png.e871cb2b074507ec4a4e192d42cc7a27.png)

.thumb.png.1be1ba017fc56bfba67119771b1b1de6.png)