Welcome to ExtremeHW

Welcome to ExtremeHW, register to take part in our community, don't worry this is a simple FREE process that requires minimal information for you to signup.

Registered users can:

- Start new topics and reply to others.

- Show off your PC using our Rig Creator feature.

- Subscribe to topics and forums to get updates.

- Get your own profile page to customize.

- Send personal messages to other members.

- Take advantage of site exclusive features.

- Upgrade to Premium to unlock additional sites features.

-

Posts

2,211 -

Joined

-

Last visited

-

Days Won

96 -

Feedback

0%

Content Type

Forums

Store

Events

Gallery

Profiles

Videos

Marketplace

Tutorials

Everything posted by J7SC_Orion

-

...I am talking about efficiency - when you have two cards that both run at 400 W but one produces much higher results with that 400W (score, fps), it is more efficient in that it has less heat loss. Dumping the same 400 W into s.th does NOT automatically create the same external heat output. As a somewhat distant but akin example, when cars where first turbocharged en masse, there was a lot of energy loss due to heat loss (less of the fuel transferred into actual work, more into waste heat). Then they stared coating the headers etc, and finally moved into what is called 'hot-V' in a V6 or V8 But @Sir Beregond and @pioneerisloud , I was really just trying to help the OP re. his question on power connections for the 4090, and also compared it directly to my 3090 (up to 520W) in the same loop w/everything else the same...

-

...obviously, no need for coals to Newcastle as 'Watt' is used to quantify the rate of energy transfer. If you read my previous post carefully, I wrote the card is running cooler at the same wattage...for whatever reason, the 4090s are more efficient at the same wattage than the previous gen, and less warm metal in a case probably also helps with that efficiency. Overall, the 4090 gen seems to have fewer heat losses > higher efficiency.

-

...I thought @Sir Beregond was talking about heat in his case when he wrote '...sometimes this 3080 Ti with its 350W really is dumping out way too much heat'. On that, my point was that at the same wattage, my 4090 runs cooler than my 3090 in an identical cooling and case setup, including the area around the mobo, PCH etc. Not only is it a different node (4N) but the cards' pcb and water-block are MUCH smaller and more concentrated than the 3090 (and 6900XT) blocks - less overall warm metal in your case to heat things up. It's kind of funny if you strip a 4090 from its giant stock air-coolers and are left with just the pcb and water-block.

-

Oddly enough, the 4090 runs about the same temp to slightly cooler than the 3090 in exactly the same (extensive) loop. The 4090s do like higher VRAM temps than I am running though, but I can always turn the thermostat for central heat up if I want to bench

-

A glimpse at future GPUs...but will they play Crysis ?

J7SC_Orion replied to J7SC_Orion's topic in Workstation

...some more OP-related head-spinning future tech for GPUs...somewhat speculative of course, but interesting nonetheless... -

Per @iamjanco 's comment above, I got my Gigabyte Gaming OC RTX 4090 dual-bios model over a month ago...a local outlet of a national chain had about 30 or so for the equivalent unit price of US$ 1,619 but since those early few days, everything on the 4090 front (unlike the 4080s) seems to be sold out. In some tasks, the 4090s are almost twice as powerful as a well-running 3090.... Re. power consumption, yes they can get to 600W (more w/ transient spikes though they seem better-controlled in this gen), depending on the model. Typically though, they run very efficiently with far less power than RTX2K and RTX3K...for most games such as Cyberpunk '77 and FS 2020, I use about 380W-400W with a mild oc, and only hard benching will get me to 600W per a few adjustments in MSI Afterburner. The 4-into-1 PCIe 8 pin-to-1 x 12VHPWR that comes with the cards in their boxes do work, though great care has to be taken that they are not twisted and also FULLY inserted (no gap). Apart from the aforementioned 4-into-1 dongle, I also got a single cable from Cablemod (pic below) that uses 4x PCIe 8 (=6+2) pin connections at the PSU end. In addition, Seasonic was kind enough to send another 12VHPWR cable for free (for a recently purchased Seasonic PX1300W unit) and that cable 'only' has 2x PCIe 8 pin connections at the PSU end but is also rated for 600W (stamped right on it; wire gauge also play a big role in all this). I haven't tried that one yet but have seen other folks report that it works fine at 600W. The 12VHPWR cables are actually 12 + 4 pins (the latter 4 are sense pins / wires re. PSU capabilities). I do think you would have issues reaching full power with just using 2x 6 pin PCIe - or even powering up at all. In any case, Cablemod has a nice configurator > here - perhaps your model is still part of their database even if you don't buy from them, re. additional info.

-

...why not go all out for you next build ?

-

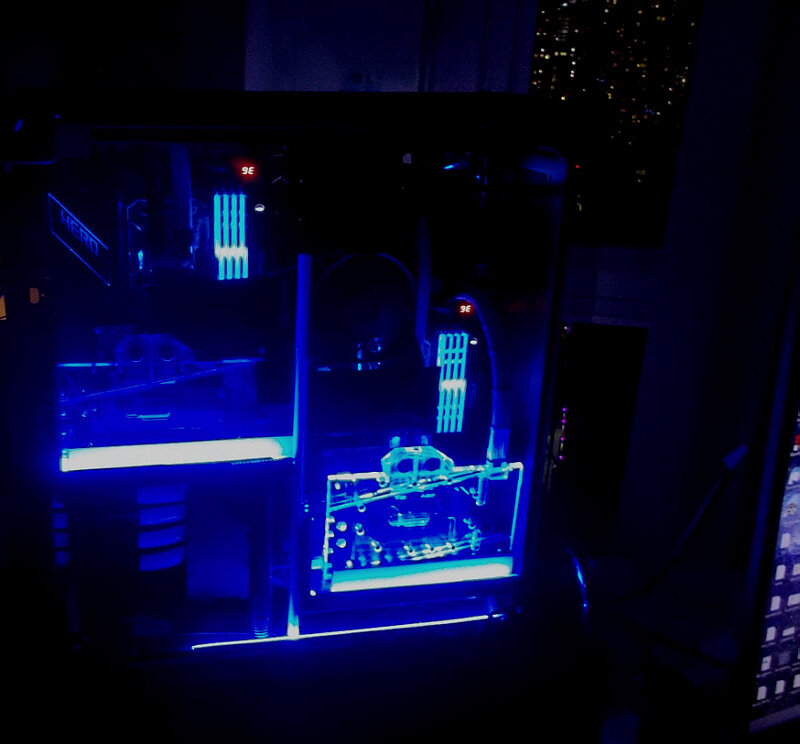

...some minor modding of the appearance; finally dealt with some smaller details that were bugging me for a while...better pics soon

-

A glimpse at future GPUs...but will they play Crysis ?

J7SC_Orion replied to J7SC_Orion's topic in Workstation

...they are probably waiting to make a few $ billion first from the HPC / Big Data / Ai corporate folks. However, if you're in a hurry and own your own power station (for that ~4 kW), you can try out this today... -

A glimpse at future GPUs...but will they play Crysis ?

J7SC_Orion replied to J7SC_Orion's topic in Workstation

mGPUs via tiles are supposed to appear as a single GPU to the OS and drivers...but there might be latency issues on the gaming desktop front, at least initially... -

...hope you've got enough flux capacitors on hand

-

...All too often, gamers think that they get to buy the 'latest and greatest' in terms of graphic processors, but the real money for and innovation by vendors is in HPC and enterprise solutions (AI and other). What is happening in that market (where a single GPU accelerator can cost close to $20k and doesn't even come with HDMI or DP I/O) can sometimes foreshadow what gamers get as cut-down hand-me-downs in future desktop and even workstation GPUs. With that in mind, below is a brief screen-shot summary of the latest by AMD, Intel and NVidia - the information and pics are straight from their respective corporate sites, so some of the performance numbers should be taken with a grain of salt (in addition to some numbers being doubled up via two physical 'cards' being used). Also, this is by no means an in-depth look, just a brief fly-over. Still, it is clear that multiple processing cores or 'tiles' per single card are here to stay. In addition, these enterprise HPC/AI offerings are highly scalable, both horizontally (including but not limited to adding more of the same accelerators, often w/ VRAM pooling) as well as vertically (integrating it into the system RAM pools). That in turn also underscores another 'trend': All three vendors want to max their revenues and minimize their clients' developer time by offering turn-key solutions. AMD has been making huge inroads into the server market over the past few years on the 'CPU front', and AMD Epyc Genoa with up to 96 cores/192 threads, 12 channel DDR5 and 128 PCIE 5.0 lanes looks set to extend AMD's inroads. Intel though is pushing a new combo ('Max' series) of both CPU and GPU that are highly integrated via Intel's oneAPI for heterogenous computing, with AMD releasing its own new software packages to go after the current leader, NVidia. NVidia's problem is that its attempt at purchasing ARM (RISC) failed, though they certainly have the licenses to design their own RISC 'CPUs' to round out their impressive 'accelerator' offerings. Eagle-eyed readers will note that Intel's latest offerings include the 12VHPWR connectors (at least on the PCIe versions) and looking at the power consumption figures, some of the 'cards' are rated at well over 500W. So enjoy some 'nerdy' numbers such as 128 GB of HBM+ VRAM, massive memory bus widths, 100 billion transistor XTOR GPUs and such... Will they play Crysis ? No idea... But when the hand-me-down consumer / desktop versions of these monsters make it to market, we get to try alphabetical order, images and tables sourced from AMD, Intel and NVidia respectively

-

Flight Simulator 2020 4K is my fav app and it typically gets the biggest chunk of my game time budget. FS2020 used to be horrible on CPU optimization (it would hammer one thread almost continuously at near 100%) but a big pile of patches later, it started to behave better. My 5950X still regularly maxes at 5050 to 5075 MHz on one core, but the latest patches combined with the improved DX12 beta for FS2020 and DLSS3 / Frame Insertion have literally transformed the whole thing ! ...At 4K max everything (including detail on 400/400) w/ DLSS3 and F.I. with DLSS on 'quality', it gets close to a consistent 120 fps even in trickier scenery such as flying low over a metro area. DLSS3 and Frame Insertion on FS2020 were one of the big 'launch partner' apps for the 4090s, and it really does work, even in tricky situations such as flying deep through high-rise canyons in a big metro area in a snow storm at night where you need instant response. My 3090 on FS2020 was entirely playable but the big challenge in FS 2020 isn't really the GPU but the CPU optimization (the CPU does a lot of the detail / ground pre-rendering). The key with DLSS3 is that it offloads from the CPU to the GPU. Re. frame insertion, that normally would introduce extra latency but the RTX4K has additional hardware to counter that. I now get to enjoy the C1 OLED at a full 120 fps in FS2020 with 4K max everything...

-

...on average, I only play a handful of tiles, most of which are newer and demanding...of those, FS2020 and Cyberpunk 2077 get over half of my time budgeted for gaming. I also play F1 2021, Team 4 (motorcycles) and Forza H5, the latter though is not that 'captivating' (apart from the full-on graphics). A few older ones I've had for years such as NFS: Most Wanted run superbly well at 4K on all the GPUs I have in active use. The 4090 is for the heavy-weights. DLSS3 / F.I. has done wonderous things for FS2020 - which was not very well optimized - by offloading from the CPU. Now it is buttery-smooth

-

...depends what you mean by 'need' - with GSync, the 3090 did quite well but once you start seeing 100 to 120 fps on the OLED 4K/120 with 4K ultra/DLSS3 and Frame Insertion, you want it all the time ...a ratchet effect of sorts.

-

guru3d GPU sales at lowest point in a decade

J7SC_Orion replied to UltraMega's topic in Hardware News

I've got a similar problem trying to locate an additional TR 2950X or TR 2970X for an extra mobo we have as we are about to switch to a newer gen - so the backup board for the previous gen can go into light-server duty now. New old stock pricing for those isn't quite as bad as with your 9900K, but still, it's annoying to pay more than the original purchase price. I also noticed that my X570 Asus DarkHero mobo has gone up by about 15% in one year. -

guru3d GPU sales at lowest point in a decade

J7SC_Orion replied to UltraMega's topic in Hardware News

...per below, I just checked RTX 4090 and RTX 4080 pricing and availability at caseking.de 'December 1' (> European, pls note that EU prices include a sizable sales tax). No 4090 models at all in stock, but six different vendor 4080s, some of which are getting their first discount. The GPU market is heavily segmented, and pricing depends on competition in each segment, as well as buyer 'demographics' (ie. per above, 4090 has multiple demand curves). ...in a few months, we should see some more 'true' pricing, with AMD's next gen entering the market. Intel might just turn out to be the great blue hope for mid-range to upper mid-range. -

....I saw that yesterday. I think it is entirely possible that AMD will spring a 'surprise' performance monster such as the '7990XT/X' as part of a refresh after the initial release. Good 6900XTs are already around 2900 MHz on ambient cooling in some apps, so the clocks for the 7900/7990 after a die shrink could indeed be up there per vid. My '4N' 4090 has already peaked at 3210 MHz (light 3D load), though since I also use it for work, I haven't gone completely crazy with it. ...the AMD desktop card monster I would like to see is a consumer version of the Instinct mi250 dual core server card (below, current price around $8,000 )...give it a few years and we might see a similar RDNA3x dual-core version w/o HBM.

-

...nope, no Canadian (direct) sales either or even warranty if you get one via international sales channels. For the RTX3K generation, Galax brought out several HoF 3090s versions that were clocked differently depending on the country (!). The HoF OCL on the other hand is not s.th. you can normally buy - it goes to select LN2 overclockers though some of them do sell them used once they are 'done with them'.

-

...some Cyberpunk 2077 (4K max / RTX Ultra DLLS/3?) ...above the local snow storm (FS2020 / 4K Ultra / DLSS3/F.I.) ' ...and below it

-

@Bastiaan_NL ...hadn't run Fire Strike Ultra in years but gave it a shot just to check s.th. - managed to get 14th in 3DM HoF so far with the 'old' 5950X...but VRAM temps were too low of this older DX11 bench when compared to the also shown DX12 games with RTX/DLSS3/F.I. ...ambient was 24 C for these samples; since I can't do much about the VRAM temps right now until I 'operate', I should probably do another Firestrike Ultra run but at 18 C or so ambient to stop the core from downclocking as it got past 43 C. ...some gaming fun with DLSS/3 RTX Ultra ...above the snow storm at sunrise ...and below the snow storm

-

guru3d GPU sales at lowest point in a decade

J7SC_Orion replied to UltraMega's topic in Hardware News

I guess it is all relative, also per your post > here . Anyway, I happen to make my income with computers so I probably have a somewhat different approach to hardware costs. Be that as it may, a couple of quick points: 1.) The 'cabling issue' has had multiple threads here (too many, IMO), most of which would degenerate into a continuous-repeat-tirade about 4090 pricing in particular and NVidia's market and pricing practices in general (pls see my comments above on not defending NVidia on that). Fact though is that the cabling issue actually only affected 0.05% of users, and most of which was traced back to user error, perhaps with some help of quality control on some connectors you had to be more forceful with. Never mind that the new cable is part of Intel's new ATX 3.0 standard and PSU producers are starting to switch over to it, also in part because of environmental regulations.... 2.) On card pricing, the custom AIB 4090 (dual bios, 600W) was actually a touch cheaper per MSRP than the custom AIB 3090 (dual bios, 475W) I have, and about the same as the custom AIB dual-bios 6900XT. The latter two were purchased well before the crazy price bulge - shortly after, said 3090 model was offered by various scalpers for as much as US$ 5k on eBay...and even the non-scalper regular MSRP went up significantly. So the 4090 at MSRP was cheaper at about twice the performance of the 3090 at (original) MSRP...probably why the 4090 is mostly sold out right now Then there is the whole issue of both general prices as well as average annual incomes moving up...quick example would be the Honda Civic...entry level MSRP in 1992 was ~ $ 11,900...in 2012 ~ $ 16,000...and in 2022 ~ $ 25,000. It is not just NVidia... Overall, everybody is entitled to their own opinion - about NVidia and everything else. However, if it starts to harp on the same issues already discussed ad nauseum a gazillion times before, it becomes disruptive and betrays the true motivations, IMO. -

guru3d GPU sales at lowest point in a decade

J7SC_Orion replied to UltraMega's topic in Hardware News

...you better be careful 'admitting to owning' a 4090 RTX ... @ENTERPRISE : for some reason(s), 4090s in particular have seen the most 'unusual' responses here at ExtremeHW.net in several threads; I can't help wonder why. Nobody here has to buy a NVidia product if they don't want to - just so happens that I run both AMD and NVidia concurrently. My advice to folks is to vote with your wallet and subject to both your use-case and budget. Also, I am not a spokesperson for a particular POV on NVidia's pricing politics nor do I enjoy those, as I at least tried to underscore already at the beginning in my previous post (apparently to no avail). So I'm not a representative of a specific POV pro/con debate... ...instead, I am simply pointing out that the 090 series have an additional market beyond enthusiasts, gamers and benchers, and that is a given segment in the workstation realm. 24 GB of VRAM is certainly is a lot better than 16 GB for those uses (among them video production / graphics, AI to name just a few). Current top professional / enterprise cards beyond that workstation segment go up to 80 GB even before VRAM pooling, and Intel just announced the 'max' series for that market with up to 128 GB of VRAM. So the amount of VRAM is indeed a HUGE differentiator for parts of the professional market, a segment of which also happily draws on the 090 series now - not really that hard to understand, IMO. Then there is NVidia-specific productivity software...back in Turing architecture introduction, NVidia enabled it for the 2080 Ti and Titan RTX, but not other Turing cards. The workstation / pro_sumer market segment is important to vendors even if they prefer to upsell you the enterprise models. After Titan RTX, NVidia switched to the 090 series as their top model outside the enterprise ones. Perhaps one day, they'll bring the 'Titan' label back for products, who knows....but for now, professional users consider the 090s 'Titan class' for very obvious reasons. Being in the software-related business for decades+, I just have to look at our own hardware configurations, or those companies in similar market segments - typically, between 2x and 4x RTX 090s for their workstations, some with more (> sample pic below). This includes some university labs as well. YouTube has numerous workstation builds concerning the 090 series (2x, 3x, 4x and more 090s), apart from the YouTube example of workstation builds I included earlier. The fact that 4090s are also a big step up over the previous gen and a treat to run for private amusement such as gaming is a whole other chapter for future reference...suffice it to say that they're a blast in some apps give you a whole new experience compared to the previous gen...I am now seeing some apps beyond 120 fps for 4K Ultra RTX max everything - my 4K 120 OLED is getting a real workout now. This brings me to the RTX4K series, and why 'we' call the 4090 a Titan class card... the $400 MSRP difference between the 4090 and 4080 isn't even worth mentioning to any business and professional user - and apparently to many folks in the personal use space, judging by what is not available and what is left on the shelves at the time of writing: RTX 4090: 76.3 billion transistors -- 16,384 cores -- 512 TMUS -- 176 ROPS - 24 GB GDDR6X / 384 bit RTX 4080: 45.9 billion transistors -- 9,728 cores -- 304 TMUS -- 112 ROPS - 16 GB GDDR6X / 256 bit The gap between the 4090 spec and 4080 spec is obviously big enough to drive a train through, and we'll surely see 4080 Ti and the like. While latest 'rumours' have the 4090 Ti delayed, if/when they release that, private and business users will jump on that, too. But remember, you don't have to buy anything from NVidia if you don't want to !

.thumb.jpg.e24471b8ec4156223b638365990f51e8.jpg)

.thumb.jpg.955141b42d3e4829dd9f3e950cec1aac.jpg)

.thumb.jpg.fc107f4b4cb9c4b710ab346314d1260a.jpg)

.thumb.jpg.bdb6e10c9f7bff30a64a4658879170c4.jpg)

.thumb.jpg.547b3d7b4a88721fdc959645c236db53.jpg)

.thumb.jpg.c7c5bb2f92e8d2912fe444a93ab45685.jpg)

.thumb.jpg.a69259966f06f0931ef7892670457ca1.jpg)

.thumb.jpg.27fe8660f94713ad4aafc02376db1b8b.jpg)

.thumb.jpg.90b36a6528153b6436501a00760ef918.jpg)