Welcome to ExtremeHW

Welcome to ExtremeHW, register to take part in our community, don't worry this is a simple FREE process that requires minimal information for you to signup.

Registered users can:

- Start new topics and reply to others.

- Show off your PC using our Rig Creator feature.

- Subscribe to topics and forums to get updates.

- Get your own profile page to customize.

- Send personal messages to other members.

- Take advantage of site exclusive features.

- Upgrade to Premium to unlock additional sites features.

-

Posts

2,211 -

Joined

-

Last visited

-

Days Won

96 -

Feedback

0%

Content Type

Forums

Store

Events

Gallery

Profiles

Videos

Marketplace

Tutorials

Everything posted by J7SC_Orion

-

GAME: Ban the Above User for a Reason - EHW Edition

J7SC_Orion replied to Simmons's topic in Chit Chat General

banned for not using a rotary phone to dial up email...also note it says 'Enterprise' computer...now the truth @ENTERPRISE comes out, after all these years ! -

GAME: Ban the Above User for a Reason - EHW Edition

J7SC_Orion replied to Simmons's topic in Chit Chat General

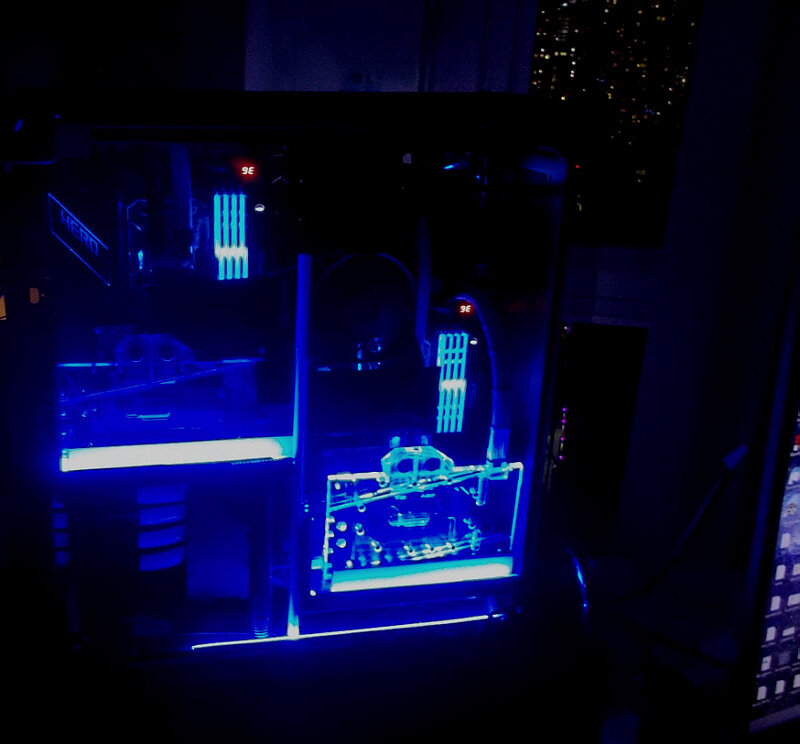

banned coz still working on the retro rig (just locked in final config 20min ago); gotta do something about them there hideous RGB and colour 'issues'... -

GAME: Ban the Above User for a Reason - EHW Edition

J7SC_Orion replied to Simmons's topic in Chit Chat General

banned coz...now you know I adore Leo_Gus, but as far as the EVGA Classifieds, you'll likely 'go postal' [CA/US; am I right, or am I right ?] peekaboo -

GAME: Ban the Above User for a Reason - EHW Edition

J7SC_Orion replied to Simmons's topic in Chit Chat General

banned coz..."leaving NC" -

GAME: Ban the Above User for a Reason - EHW Edition

J7SC_Orion replied to Simmons's topic in Chit Chat General

banned coz...Uh huh, 'big dumb doggies' convention ? -

Old HWBot warhorses makeover - fixing bent pins and other past sins

J7SC_Orion replied to J7SC_Orion's topic in Intel General

...did I read that there's there some sort of inlay available now for the pins /pads to be blocked for the Z170 <> 9900K mod, or is it still clear-tape-and cover diy ? In any event, are you going to stream your mod @ EHW ? -

Old HWBot warhorses makeover - fixing bent pins and other past sins

J7SC_Orion replied to J7SC_Orion's topic in Intel General

Tx much, I will follow up on that...now that I have quite a few extra functional mobos fresh out of the operating room, one of those can do NUC duties and the Z170 SOC Force (22 phase VRM set up for w-cooling <> lots of headroom) can enjoy a nice oc'ed 9900 coffee lake mod. -

Old HWBot warhorses makeover - fixing bent pins and other past sins

J7SC_Orion replied to J7SC_Orion's topic in Intel General

...debating that per posts here -

GAME: Ban the Above User for a Reason - EHW Edition

J7SC_Orion replied to Simmons's topic in Chit Chat General

...what's wrong with cloudflare's attire ? ban -

Old HWBot warhorses makeover - fixing bent pins and other past sins

J7SC_Orion replied to J7SC_Orion's topic in Intel General

Thanks gents. Re. the elastic bands, I found a perfect supply - ordering take-out 'butter chicken' from my fav restaurant. Their take-out has those elastic bands around the containers, and those elastic bands are tough - I have frozen them, accidentally washed them and even heated them up...they last. In any case, as a bonus, they allow for infinite height adjustment for both fans individually, i.e. for DRAM clearance. Besides, fresh supplies are only half a block away...their butter chicken (extra hot) is superb, too Re. the top Asus boards, they used to be my automatic go-to choice, though these days, I also really like Gigabyte/Aorus and MSI. The Asus X99 OC pin stuff was a bit flaky, or at least the accompanying bios...early versions would load up 1.4+v on 'auto VCCSA' in some circumstances and there were other issues, though most got fixed later. Re. the equipment being so old that it was covered in dust - well, six years or so for some of it is not short and dust-free. However, the Vaseline coating inside the socket (applied w/ a hair dryer and used to guard against condensation during freezing) made it much worse - it attracts and accumulates the dust. Once the top cover of the box was removed for whatever accidental reason and not replaced, time & dust started their relentless march... -

A long time ago, in a galaxy far, far away...I did a fair amount of extreme oc @ HWBot w/ LN2, DICE and so forth. I haven't done that now in years, but the sin bin, the island of broken toys, the old warhorse barn of various GPUs, CPUs, mobos and so forth used in XOC was sitting on the bottom shelf in one of our store rooms - seemingly waiting for a second life, after some 'necessary operations' I had put off...and off. Then MrRetro '486' and I start talking about some older equipment, and before long, it was finally time to fix the retro stuff. A quick disclaimer. Per usual, if you decide to try any of the tips and methods I describe here, you do so at your own risk. A quick pic of the materials I used (apart from the usual screwdrivers, pincers, pliers etc) for all the steps below. Btw, sorry about the quality of some of the pics, I was too busy to get my better camera out of the car in the garage 30 storeys down . Below are most of the special items I used: A magnifying glass, a bottle of 'isopropyl alcohol 99', a toothbrush with SOFT (!!) bristles, and a very fine stitching needle, along with a carpet knife that has a long thin razor blade (not shown). In addition, a conductive pen, such as the 'circuitscribe' pictured, is also useful for certain steps: I already mentioned the Gigabyte Z170 SOC Force in other threads. It wasn't really broken, but after a long pause, I gave it a through cleaning (removing LET, art eraser, Vaseline and other insulation materials applied before for LN2). It's now running a nice low-voltage 6700K 'ES' and DDR4000 - but only at 4.7 as I still have to mount a water-cooling setup....and yes, the elastic bands on the two fans on the air cooler are a nice touch, but not permanent (they work great, though ) Next came the X99 Asus Rampage V Extreme...it was full of LET and art eraser insulation, had several bent pins (when a 5960X ES slipped out of my hands the last time I used it ) and as a result was only running one of four RAM channels. The 2011 v3 socket itself had Vaseline in it from subzero prep over five years ago, and of course now dust and dirt had settled on top...in short, it was the biggest mess, the biggest challenge... First, several liberal applications isopropyl into the socket were in order, then turning it upside down and letting the resulting gunk drip out over night. Then another round of isopropyl, but this time !CAREFULLY! brushed across all 2011 pins with the soft-bristle toothbrush. Finally, I could see which pins needed attention...Normally, I would enjoy a glass or two of my fav Malbec or CabMerlot when doing this after hours - but not for these next steps... Using good lighting and the magnifying glass, you can quickly identify which pins need the most attention. My method is to very !CAREFULLY! place the thin razor blade of the carpet knife between the pin in question and the closest to it (others may have a different method and in any case: please see the above disclaimer). I actually fix the handle of the carpet knife with a piece of duct tape onto the desk lamp so that I have two free hands - one holds the magnifying glass, the other a very fine needle - I use the tip of the needle to VERY VERY !CAREFULLY! straighten out the pins in question...it is better to do it a little bit at a time instead of trying to get it all done in one go...those pins can break off easily. In the past, I have also used very thin surgical pincers to straighten pins, but not this time, given that the pins were not only bent but folded over. Pre-operation of the Asus Rampage V Extreme: ...all said and done, it took two days of this...and of course repeated testing. After the cleaning, it still only had one out of four RAM channels working, then I got it to two after several tries fixing some of the bent pins, then some more quick testing with the CPU installed...and I got to three channels after some additional pin operations, and things were looking up ! Finally, I got the last one fixed, and voila - Quad Channel happy days are here again for that board and CPU, per pic of the monitor below (running an old Win 8 pro bench drive). ...then I tried all the onboard USBs, the LAN and also mounted two old 780 Ti Classifieds to test out the PCIe channels....everything worked. I almost blew it though as the two GPUs were used for extreme oc before I put them aside...so years later when I re-mounted their air-coolers, I forgot to switch the GPUs' bios back from that 300% KingPin XOC & EVBot bios Fortunately, I noticed the orange instead of the green LEDs lighting up... The temp air cooler is actually an Arctic Threadripper model (good one, btw) and it will be replaced by a nice w-cooling setup in the near future...Now, these days, a 5960X @ 4.5 giggles is nothing to write home about, but it is 8c/16t and more importantly HEDT (quad channel, more PCIe lanes). Also, the 32GB of DDR4 Samsung-B I ended up mounting is already at 3333 MHz w/o any errors - I might get it to a higher speed and tighter timings once I get the cooling updated. The next resident of the barn of old warhorses is a Z97 Maximus VII Formula...it has a great 'sought-after batch' 4790K that ran in the mid-5s w/o much effort or excessive volts at mild ( -40C) sub-ambient back in the day...it really just needed cleaning. Per above, Vaseline in the socket and the same removal process. No bent pins - those only ever happened to me with 2011 / v3 sockets. For some strange reason, the Z97 Maximus VII Formula still doesn't like the XMP setting for the TridentZ DDR3 / 2666 MHz RAM, but I have chased down a lot of RAM tuning rabbit holes before, so I set everything up nice and tight at @ 2666 MHz manually. I still need to put the mobo cladding back on along with its built-in VRM w-cooling I'll skip over other mobos that just needed cleaning off from the gunk of XOC years back but otherwise required no major operations, such as a Rampage IV Extreme that now carries a (lousy-clocker) 4930K and 64GB of Ripjaws RAM for some office server back-up duty (i.e. during regular upgrades of front-line equipment). There was however one final problem child, and it it was one of my favourite-CPU-and-mobo combos from the early days: A 3770K in a Maximus V Extreme mobo (early Thunderbolt onboard, plus ROG OC Key, Wifi, bluetooth, Intel 1GB Lan etc). This was really my first 'serious oc' mobo and the good news is that the mobo is fine - it just needed a thorough cleaning. However, the 3770K, capable of 5GHz at 1.32v on water and with a strong IMC, had lost one of its 1155 little gold pads underneath the CPU (picture forthcoming)...subsequently, it got continuously stuck during the boot cycle. To fix that, other experienced OCers had recommended a few methods, among them using the above-pictured 'circuitscribe' conductive pen. After cleaning the underside of the 3770K CPU with isopropyl alcohol, I used clear tape to mask off the contact pads right around the missing one, making sure that only the missing pad area was exposed, and then applied the conductive pen. To my great relief, it worked and here is the mobo, awaiting deployment as a fun thing somewhere in my home-office... Well, that's about it for the old warhorses finally brought back for a better life on a nice digital pasture. Perhaps I follow this up one day with 'how I baked my GPU' to reflow soldering and lived to tell about it...

-

...very nice board indeed, but we never had that type back in the day so it has to sit at another table than the one of my old heroes...for multi-socket server stuff, it's usually those ugly green Supermicro mobos in the even uglier cream-yellow cases (the ones that fade over time into the 'hospital wall colour'). I should get some more Rustoleum paint...

-

..tx for the link, will check it out

-

...Tx for the tip on bios modders / will seek that out...there's one bios update from 2015 I have downloaded but not installed yet (below). I should ask have that modded if possible. As mentioned, there' a slightly less-featured (non-E) WS version which does have an updated Bios for Spectre & Co... that might be helpful input for bios modders for the 'E' model...

-

...I just kept this one since new, even after adding other more modern systems. The Gigabyte Z170 SOC Force and Asus X99-E WS are in that same 'special reserve'...when the X399 Creation workstation will be updated, there's a seat on that table for it, too ...unlike later Intel gens, the X79-E WS was as fast if not faster than its Rampage Extr counterparts, especially on m-GPU.The only limitations are: a.) Asus has still not gotten around (at last check) to update the bios re. side-channel stuff even though they did for a lesser version of this board, and b.) the bios does not do memory settings over 2400 MHz (unlike the Rampage), even if the CPU does, yet will go much higher via FSB tuning. It also takes decent amounts of memory tightening on secondary timings

-

I've had the Asus X79-E WS board in use since late 2013...in fact, I'm writing this post on it as I just updated it (re. storage and OS) for some development server duty, along with an X99-E WS. IMO the X79-E WS mobo has superb build quality, a very strong VRM, dual Intel 1GB and of course a pile of x16 PCIe. I'm using 32GB of TridentZ 2400 on it w/o any issues. If you can find a good used one (w/o bent pins etc) it might be worth it.

-

GAME: Ban the Above User for a Reason - EHW Edition

J7SC_Orion replied to Simmons's topic in Chit Chat General

banned for cat discrimination -

...time for some additional workstation builds...these will be based on older workstation mobos as they won't do 'front-line' database serving, just host some basic development work. Re. cooling fans for the rads, there are the Sunon genuine server fans but they are loud - may be I stick with the GentleTyphoon AP29s for now...

-

...yeah white PCBs rock, especially if there's a Galax 2080 Ti HoF OCL on top of it ! I do love my Aorus 2080 Tis, but they're a bit gaudy on the outside...fortunately, the black-white-silver theme of the workstation build they ended up in hides it all well. ...I'm still thinking about plugging a 9900K/S into my fav 'non-HEDT mobo', the Gigglebyte Z170 SOC Force (after I get rid of its orange theme with some Rustoleum white ). I got this setup way back in '15, along with the first DDR4 3866 kit and a great 6700K ES which runs that RAM at 4000 / stock voltage. This combo has been serving as a testbench, and in various other functions...now I'm trying to decide whether to just make it a NUC, what with 3x M.2 on the mobo, a pile of Sata (+ a USB-C) connections and perhaps with a 4x M.2 PCIe card <> or instead mod it for the 9900K/S, per above posts...obviously, a 9900K/S wouldn't make much sense in a NUC.

-

GAME: Ban the Above User for a Reason - EHW Edition

J7SC_Orion replied to Simmons's topic in Chit Chat General

banned coz...I think Midget the cat might have eaten Mr. / Mrs. Toadie ...Midget looks suspiciously guilty ...Leo prefers chikinz, especially for his birthday tomorrow ! Giant, juicy chikinz... -

RAM is 'native' 3866 GSkill TridentZ GTZR for 'Z370'...I have 4 sets of that Sammy-B model which wonder between machines, depending on the specific work tasks. It was not even on the QVL list for the mobo, but that turned out to be a good thing...not using XMP but did it manually with lots and lots of testing, 3466 end result was awfully close to Ryzen Dram Calculator 'fast'settings. I can get error-free 3600 (so far) but only at 16-16-16 and looser secondaries etc...3466 at 14-14-14 is just a better, faster setting overall, as you suggest re. significantly tighter timings with only 133 MHz loss . Weirdly. 3533 is WORSE than 3600 - I guess the bios writers didn't really finalize that particular setting ?!

-

...Finally had some time to play around with additional DDR4 speed settings (went to 32GB from 64GB for the tests, Aida pre-set in the bios, and 1.38v for the Samsung B-die for all settings). This TR2 2950X does have a very good IMC, so it will run quad-channel DDR4 3600 without drama, but the slightly looser primary timings negated any gains over the standard DDR4 3466 daily settings - basically, it's a tie. Also, this mobo / bios just doesn't seem to like DDDR4 3533...at all...ever... Here are the Aida 'read' results for ll three settings ...and some Aida64 'memory copy' results for 3466 and 3600:

-

AMD Allegedly Delaying Ryzen 4000 Series ‘Zen 3’ CPUs To 2021

J7SC_Orion replied to Andrew's topic in Rumour Mill

Yeah, I guess the 'silly season' on rumours is shifting to high gear. Some '''reliable sources''' claim a delay of Ryzen4K to 2021, while others quote AMD as saying 'not true'. In any case, Ryzen3K 'XT' may have come about as yields are getting quite good on the 7nm process, so a quick relabel, a price increase, and voila, extra revenue for AMD. And just in case, Ryzen4k really does delay, the XT refresh will help to bridge