Welcome to ExtremeHW

Welcome to ExtremeHW, register to take part in our community, don't worry this is a simple FREE process that requires minimal information for you to signup.

Registered users can:

- Start new topics and reply to others.

- Show off your PC using our Rig Creator feature.

- Subscribe to topics and forums to get updates.

- Get your own profile page to customize.

- Send personal messages to other members.

- Take advantage of site exclusive features.

- Upgrade to Premium to unlock additional sites features.

-

Posts

2,211 -

Joined

-

Last visited

-

Days Won

96 -

Feedback

0%

Content Type

Forums

Store

Events

Gallery

Profiles

Videos

Marketplace

Tutorials

Everything posted by J7SC_Orion

-

While I am enjoying the latest eye-candy in Cyberpunk 2077, Forza Horizon 5, Ride 4 and of course MSFS 2020, I still - after about a decade - also immensely enjoy roaming free in Unigine's Valley DX11 benchmark. Instead of 'just benchmarking' , you can try 'F4' and some commands pop up in the lower right of the screen to walk and fly around the endless landscape, glide up trees or on top of a valley in the air, or go for a hike (or fly) up a snowy mountain peak set to some simple, gentle music. It will do 'system settings' so 4K or more and modern GPUs will have no problem running extreme settings which makes it even nicer. There are few modern games (apart from MSFS 2020) which are that relaxing...or just use it for fun. FYI, even modern GPUs will heat up with this so check temps... Unigine Valley is a free download ! Speaking of Unigine, it's more recent benchmark - Superposition (up to 8K) - has similar options, albeit more confined to the study-room...still, throwing leavers on gravity vortex and so forth is also fun, at least for a bit...Unigine Superposition is also a free download.

-

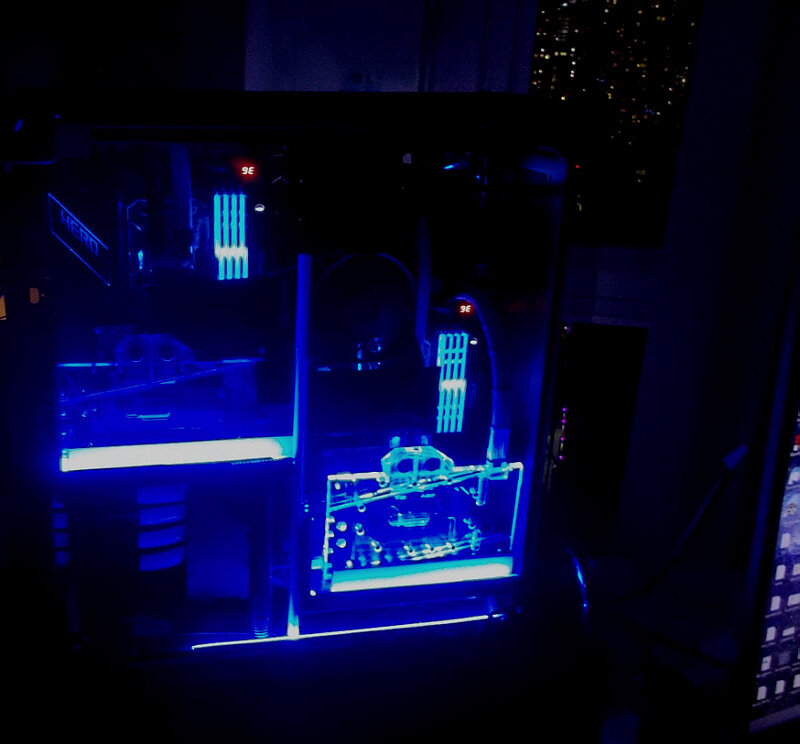

Speaking of mental health...as I have an extensive 'home office' where everything is -w-cooled and whisper-quiet - while our near-by 'commercial office' is mostly servers (with plenty of 8k - 10k rpm fans ) - I make sure to: a.) do the daily forest thing, per above (after all, this is British Columbia) and b.) one of my systems always has relaxing music (often J S Bach) on when I work on the other...

-

...btw, if anyone runs across DDR5 Dual_Rank 7200 32GB A-die or higher per stick, please post a link here. I realize that the 7950X3D's IF may not go that high, but I usually settle on just one type of RAM per RAM gen (not AMD or Intel specific) as I like to 'mix and match' down the line re. AMD and Intel workstations as well.

-

...rent a small office near-by ? I honestly think at least a weekly (I prefer daily) change of scenery / 'wallpaper' is important for the mental health.

-

- I didn't know about the back hatch with the Halo Pelican - have to try that ! - I've considered VR, but the few times I have tried it at friends' places, I get a weird migraine...but those were older setups. 4090 in particular should have enough oomph for VR. I understand that there are new OLED VR sets out there; I'll have to try one of those. - The DLSS glass cockpit thing on DLSS RTX 2K clearly is an issue - if you check the Pelican cockpit above (DLSS3/FI/NVReflex) it doesn't seem to have that problem, but I will check on my other system.

-

Cyberpunk 2077 HD Reworked Project

J7SC_Orion replied to bonami2's topic in Journalism & Entertainment

"30 fps", "and may be crazy or getting old idk" ...could be that you are, but I'll also get there soon enough. CP 2077 was designed with the 'Bladerunner' look in mind, not to be photo-realistic. I also don't see the handlebar 'problem', noting that these pics were taken with both HDR and RTX Psycho on which makes getting good stills a bit tougher. By comparison, the road in the vid you posted above looks a blurry mess, the plants are jaggy, as are some of the lines of the Range Rover and the houses. That said, when I feel like photorealism, I play > this which I got last year...Unreal 4 engine. From the demos I have seen about Unreal 5, we're in for a treat ! -

Cyberpunk 2077 HD Reworked Project

J7SC_Orion replied to bonami2's topic in Journalism & Entertainment

...at what resolution are you playing ? At 4 K ultra / everything maxed, I find it super-crisp, at least on my NV cards (AMD not quite). Still, if this improves it even more, I qm going to try it. Also hoping to ger some decent titles with Unreal 5 engine. soon..Unreal 4 is already quite something. -

-

Nice - though exceeding the speed of sound in a 737 may not go down well with the FAA & Co; busted ! ...yeah, MSFS 2020 has been getting a lot of patches and also additional 3rd party marketplace content lately. That's good to see as they're still investing in it. The DLSS3/FI/NVRef RTX4K update made me very happy, considering how MSFS2020 started out - always full of promise, but the early iterations had some 'coding issues'. Some of those are still lurking under the surface, but have far less impact. Even the DLLS2 / RTX3K secondary setup I sometimes use is a lot more playable, IMO. Below, the 'weird' Halo Pelican leaving Lukla for Mt. Everest... Some Pitts stomach-twisting over our place... ...and a quick jaunt over to Iceland for some night flying...

-

...your front yard looks a bit warmer though

-

...I like roaming around in winter weather, do an hour or so in the forest and take some pics if the conditions are right...

-

50% gains in Cyberpunk 2077 at 4K with DLSS3, FI & Co

J7SC_Orion replied to J7SC_Orion's topic in Hardware News

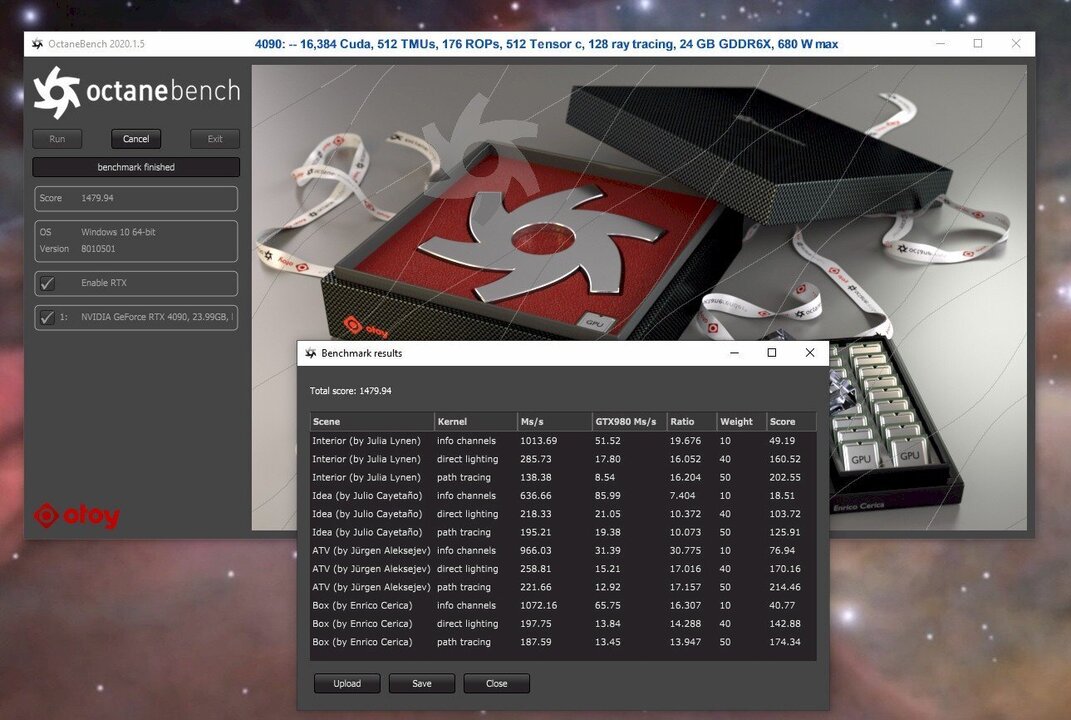

Point well taken; volume at highest margin is what an economist would recommend to maximize profits (not revenue). In any case, as important as we might think we are as gamers and 'fan-boys-and-girls', the real money for the big GPU manufacturers is in the workstation and enterprise markets. With the huge growth of AI, (primarily) Nvidia has been sitting pretty. It is also known to ride roughshod over some of its board partners by keeping them on a short leash re. designs and cost-plus pricing (see 'EVGA', compared to Palit - the latter owning all kinds of brands including Galax - and is the largest GPU vendor). AMD and Intel are desperately trying to catch up to NVidia in the lucrative workstation and enterprise markets with their latest multi-tile offerings. Still, for now, NVidia rules with the Ada Lovelace and Hopper designs, and well-developed related software with years of a head-start. Some permutations and combinations from those will hit the 'high-end' consumer market...see other threads about a potential 4090 Ti/Titan with 48 GB of VRAM; if/when it hits, it will be priced between the 4090 and RTX 6000 Ada...from that perspective, the US$ 1,619 I paid for my 4090 is outright '''cheap'''. Put differently, those are not upgrades of GPU chips from the gamer market, but downgrades from the workstation and enterprise markets... Who knows what the future will bring ? Multi-tile HD GPUs with tiles disabled for consumer models ? How about paying a subscription fee to unlock some tiles on the GPU you already own ? It boggles the mind... So DLSS3 for RTX4K was easier for NVidia with its massive AI prowess compared to their competitors. All I can say is that the 4090 with its 76.3 Billion transistors is a monster when compared to the 3090 at 28.3 billion and a 2080 Ti at 18.6 billion. DLSS3 / FrameIns/ NVReflex is hugely impressive from my vantage point re. not only speed in fps and frame-times, but also visual quality...I use a C1 48 OLED 4K120 for comparison, and at that size and resolution, imperfections would show more dramatically. A final point concerns the sheer gain in efficiency. Below are screenies from a workstation bench program called Octanebench, and while the oc'ed 3090+2x2080 Ti setups just nudge ahead of a single 4090, I could try to oc the 4090 a bit more - but they are certainly close. HOWEVER, the 4090 used around 580W for that while the 3xGPU combo was at about 1,260W...at the end of the day, DLSS3/FI/NVR is an incredible technology; I just wish it would also be available on my 3090 Strix, at least 'partially, given some additional hardware requirements with DLSS3. At least we can hope that some of it is used to improve DLSS2 some more. -

50% gains in Cyberpunk 2077 at 4K with DLSS3, FI & Co

J7SC_Orion replied to J7SC_Orion's topic in Hardware News

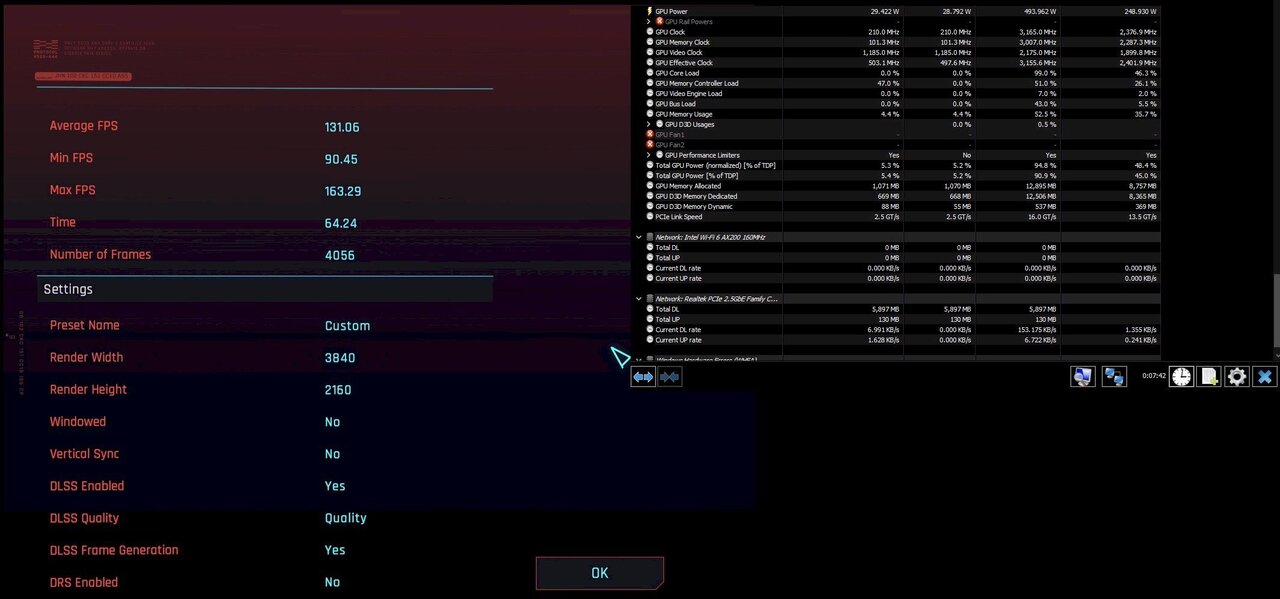

After experiencing DLSS3, Frame Insertion and NVidia Reflex on my RTX 4090 in MSFS 2020 as a flawless suite of tools that rejuvenated the 5950X setup and with 4K ultra settings hitting the limit of 120 fps on the 4K120 OLED in Flight Simulator, my expectations were already raised re. what the long-promised DLSS3/FI/NVRe update would do for Cyberpunk 2077...answer: - a heck of a lot. With virtually no stuttering or visual oddness, the same CPU/mobo/4090 oc setup went from an already decent 90 fps in the CP2077 internal benchmark (DLSS2, 'Quality') to over 130 fps (min fps are even more impressive) in DLSS3, 'Quality'. I didn't even oc to what the card is capable of because with the C1 OLED at max 120, it makes no difference...and I didn't think that I would be able to exceed the 4K120 level so soon. FYI, the same system with the same settings managed a respectable 45 - 50 fps (DLSS2, 'Quality') with an overclocked 3090 Strix at close to 2200 MHz. That card is now hooked up to an older IPS HDR panel at the other end of our place and that is a happy combo - be it for work or play - in its own right. For certain work tasks, the 3090 is paired with 2x 2080 Ti (all water-cooled) Still, I recall the early days of DLSS 1 - leaving much to be desired on the visuals. DLSS 3 / Frame Insertion / NV Reflex really are game changers, IMO, in the visual fidelity department as well. The irony is that the RTX4K series, especially the 4090, is probably the card that least required such boosts though apparently, there is also some extra hardware trickery involved on Ada Lovelace compared to Ampere. -

-

....I initially thought it was some leftover Christmas fruitcake

-

For my use cases, dual ethernet is a must - I only recently 'returned' from exclusively building HEDT with an eye on work/productivity apps (HEDT market is sort of dead - for now). But yeah, pricing has gone 'silly'. My Crosshair X570 Dark Hero is currently C$ 120 more than what I paid for it at the same retailer where I bought it. I wonder what Gigabyte will charge for the new B650E Tachyon - it competes with the X670E Crosshair Gene but should be a bit more affordable. It has some nifty features re. DDR5 oc'ing and VRM

-

...well, caution is advisable because I like pizza with pineapple, anchovies and Canadian back bacon ...I am far from having done any serious research on X670E, but for performance-benching-gaming only, I fancy the Asus Crosshair Gene, or something equivalent from MSI or Gigabyte. That said, I am more likely going to go with a semi-productivity mobo with decent oc capabilities. Like my current X570 Dark Hero, it should also come with dual ethernet - something like the Asus X670E ProArt may be which has 10 Gb and 2.5 Gb ethernet, DynamicOC and a few other tricks and bits

-

Both Intel and AMD released their financial results recently. Nothing to write home about for either...in fact, rather bad news on many fronts for both. I picked YouTube / Coretek's summaries below -- Coretek is an acquired taste, much like certain kinds of strong European cheeses but he does make some good points, especially about some accounting gymnastics at Intel, and also their share dividend apparently being based on additional debt. I will update this thread when NVidia releases financial results, though NVidia's position is expected to be stronger as they are well positioned in the AI related hardware (and they got my 4090 money )

-

On paper, asymmetric cores such as P + E make sense, but in practice, there will always be the scheduler issues with some apps, given all the legacy baggage Windows carries. I prefer symmetric setups because of that. Also, I use systems for both work and play and the 7950X3D makes huge sense to me at the price (compared to for example a 7/5995 Threadripper Pro). For gaming and some benchies, I would simply disable one or the other CCX in the 7950X3D and thus end up with a symmetric setup if needed.

-

For me, the choice is a bit easier since I skipped LG1700 altogether (and so far, AM5). It is just too late now to get into LG1700 at this point and either way, I will get into DDR5 with the next upgrade. All that said, I first want to see lots of reviews on how the OS schedulers deal with the 7950X3D and 7900X3D. Also, I am still thrilled with my 5950X AM4 at 4K, so there is a bit of extra time to make an informed choice on a new mobo, DDR5 etc

-

Noice ! I love the 'older' stuff...and for K/Ubuntu, it really doesn't matter anyways.

.thumb.png.fa531846ffcfeabd0c10ba687afa3f05.png)

.thumb.png.80a3c80b328f5098720daaad21c2f63e.png)

.thumb.png.1aa50007dc8f148ec3113eade6894e7b.png)

.thumb.png.ed5fcb380958cca79bf96a34a88c081d.png)

.thumb.png.e35a8bb97fe389c5bc6efacc369e5339.png)

.thumb.png.3dd101994c111cd3d38592602af56c6f.png)

.thumb.png.d711246d563eb0f8a7eab82c892ed3c5.png)

.thumb.png.20112964e2ff6c3fb473c2ac1de08a43.png)

.thumb.png.4f6c9ad5cac0f2b5d73f90ea2112bf39.png)

.thumb.png.aaf5eddd2bc306e6b9dc9e5db08754a7.png)